API Testing—Strategy and Tools

Summarize with AI:

If you’re not careful, unit testing your Web Service API can take over all your testing. Here’s a unit testing strategy that shows what it means to “unit test” a Web Service API.

Your API testing can quickly grow out of control if you don’t distinguish between unit testing your API and integration/end-to-end testing. If you’re not careful, API testing will start taking over tests that don’t belong to your API and create duplication among your tests.

Rather than just handwave about an API testing strategy, to demonstrate how to effectively manage your API testing, I’m going to walk through a typical strategy using an API testing tool to demonstrate what that strategy looks like in real-life terms. I’m sure we’re all shocked and amazed that I’ll be using Telerik Test Studio for APIs for those demos. I know that I am.

What Is API Testing?

In the end, an API has to be implemented with code and, like any other set of code, you need to test it. “Testing code” is normally organized into a hierarchy of unit testing, integration testing and end-to-end testing. At the start of that hierarchy, unit testing both confirms that your code does the right thing when fed its inputs and confirms that your code passes off the right data to whatever comes next in the processing chain (integration testing is where you confirm that the “passing off” works correctly).

With APIs, though, unit testing can be where the wheels fall off. Because APIs are an interface to a processing chain, it’s easy to confuse unit testing an API with integration testing of that processing chain. An effective API testing strategy identifies what “unit testing an API” means (and what it doesn’t) and where testing an API fits into the hierarchy of integration and end-to-end testing.

API Unit Tests

Like any other set of tests, API testing follows the Arrange-Act-Assert pattern. Because an API begins its life as a set of URLs (with Azure’s Web API facility you can define your API without writing any code at all), this means you begin your API testing with your URLs, not with your code.

Arrange

Typically, for RESTful services, your URLs all share a base URL and all your requests are just variations on that URL. For example, a customer service might have just two URLs to support all of its basic CRUD operations:

- http://customers: Retrieve all customers or add a customer with the system supplying the customer id

- http://customers/A123: Retrieve/update/delete/add a customer specifying the customer id (“A123” in this case)

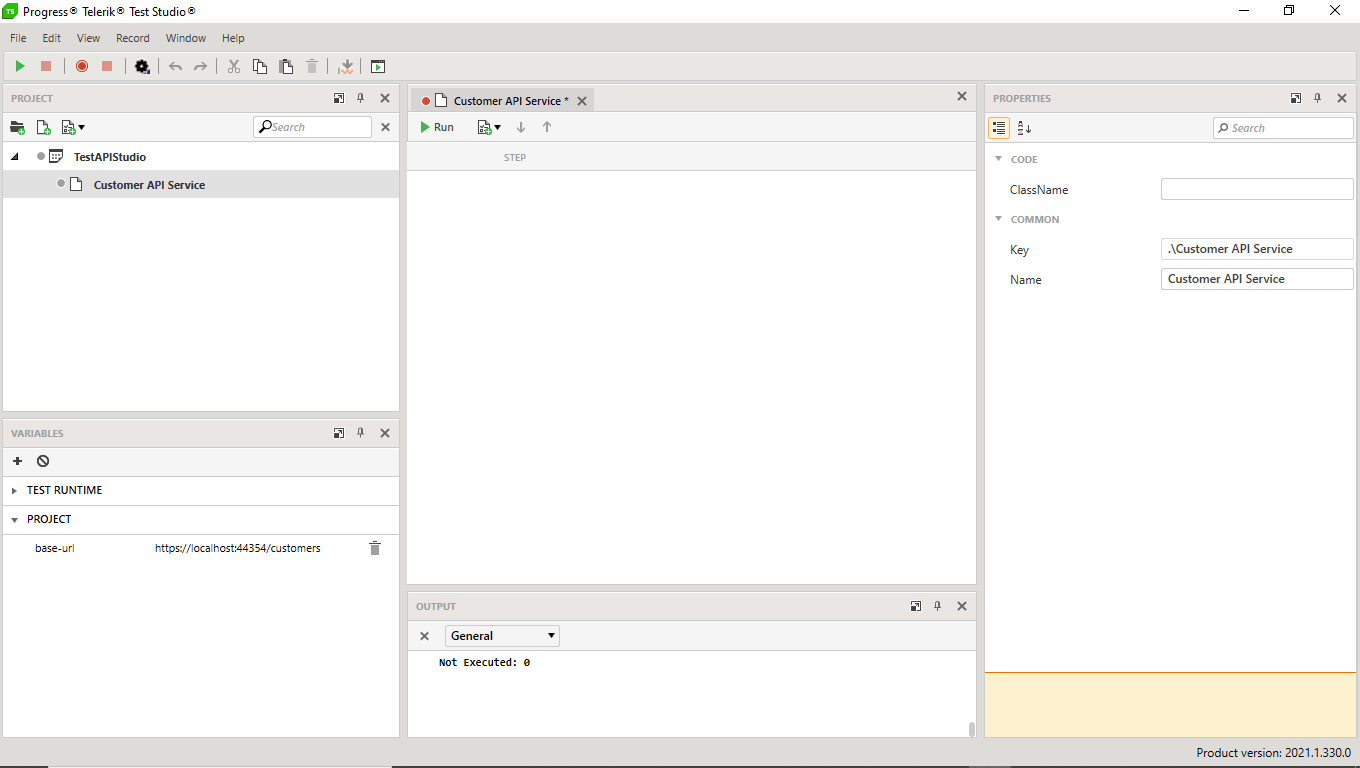

For my customer service, then, my base URL would be http://customers. In Test Studio for APIs, that’s supported by creating a project-level variable (called, by convention, base-url—though you can call the variable anything you want). Leveraging that variable, the two URLs for my basic CRUD operations would look like this (Test Studio for APIs uses double French braces to mark variables in a test):

- {{base-uri}}: Retrieve all customers or add a customer with the system supplying the customer Id

- {{base-uri}}/A123: Retrieve, update, delete or add a customer specifying the customer id

Setting up my project and creating my first test with its base URL takes about two or three minutes in Test Studio for APIs and looks something like this:

Act

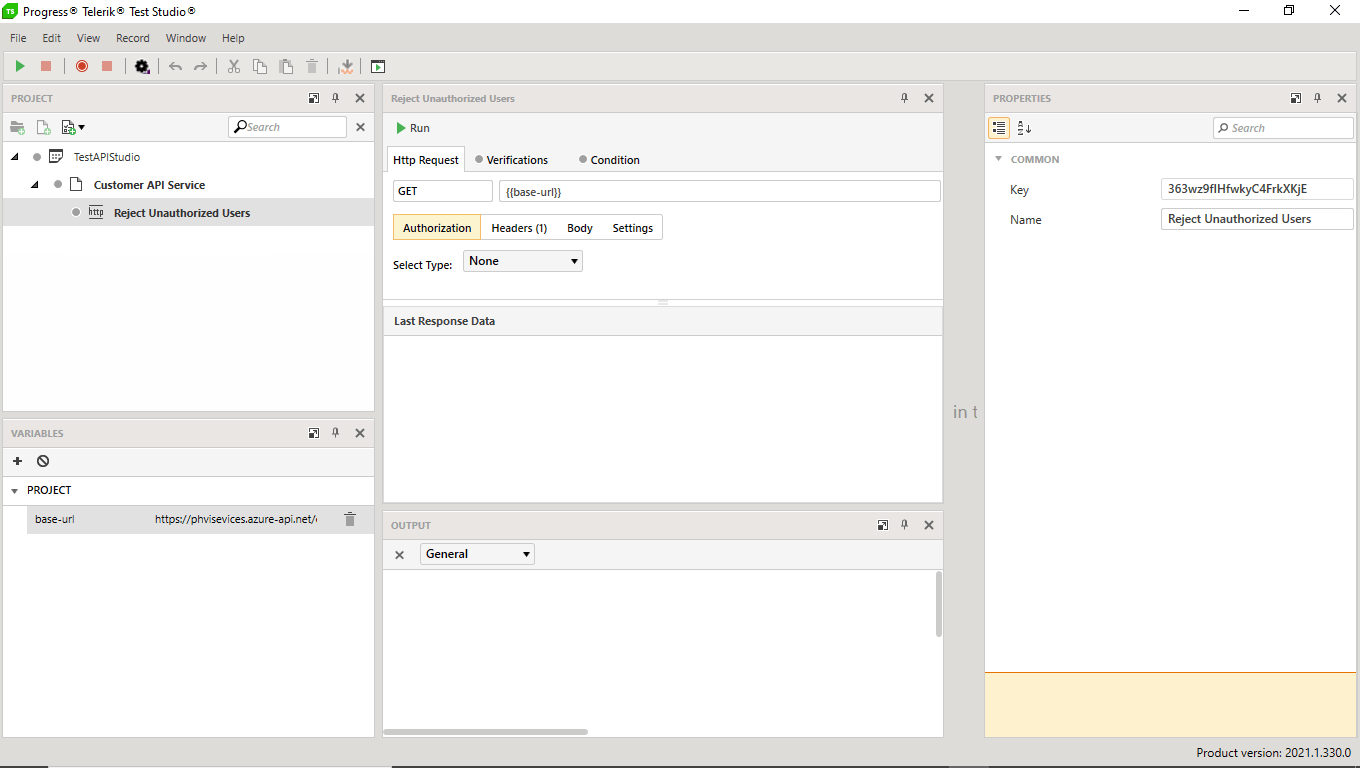

For services, a test’s Act phase consists of issuing an HTTP request using the base URL, an HTTP verb (GET, POST, etc.) and, potentially, some values in the request body and/or header.

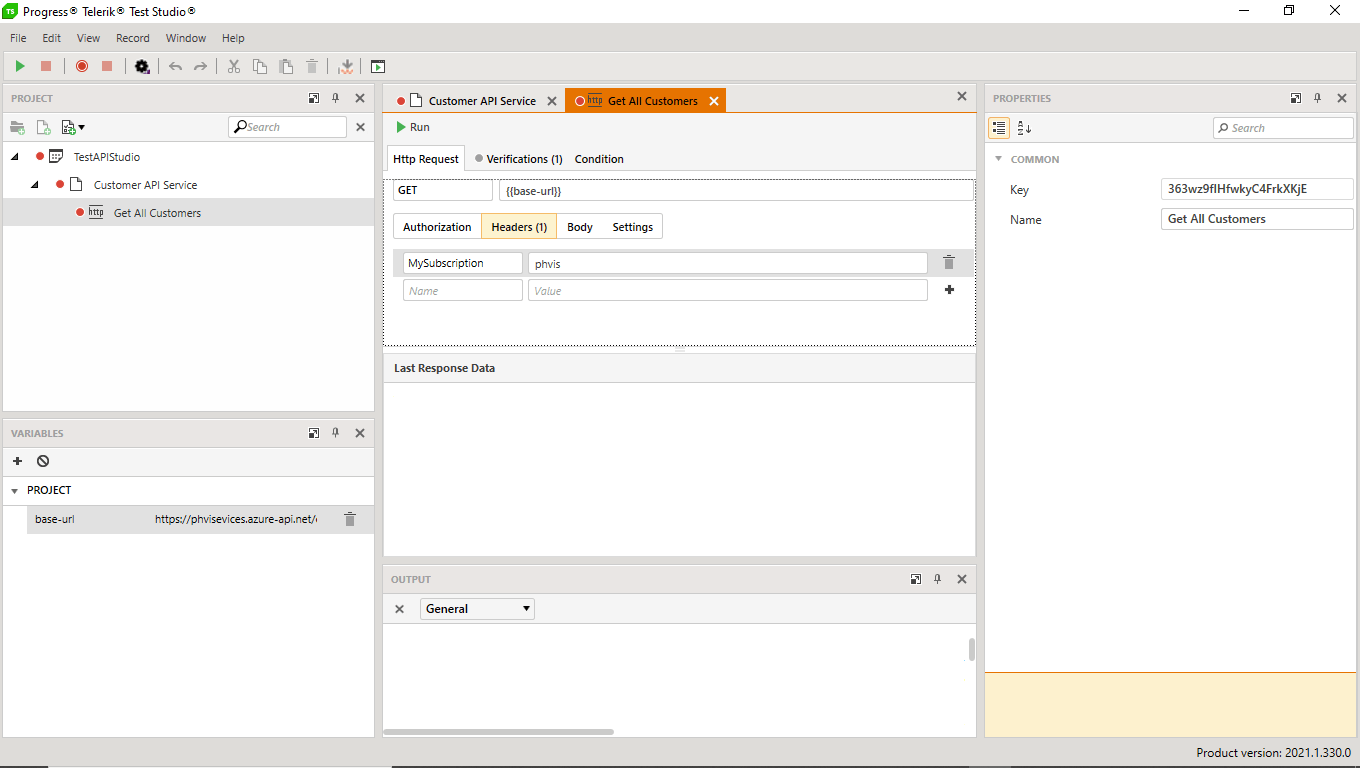

Generally, my first test is a simple GET request using the base URL (for my customer service, this would correspond to a “get all customers” request). In Test Studio for APIs, tests consist of one or more steps, including HTTP requests. So, to implement my first test in Test Studio for APIs, I would add an HTTP Request step to my project with its verb set to GET and its URL set to just my base-url variable. You can see that here (and, again, this takes about a minute to set up in Test Studio for APIs):

Assert

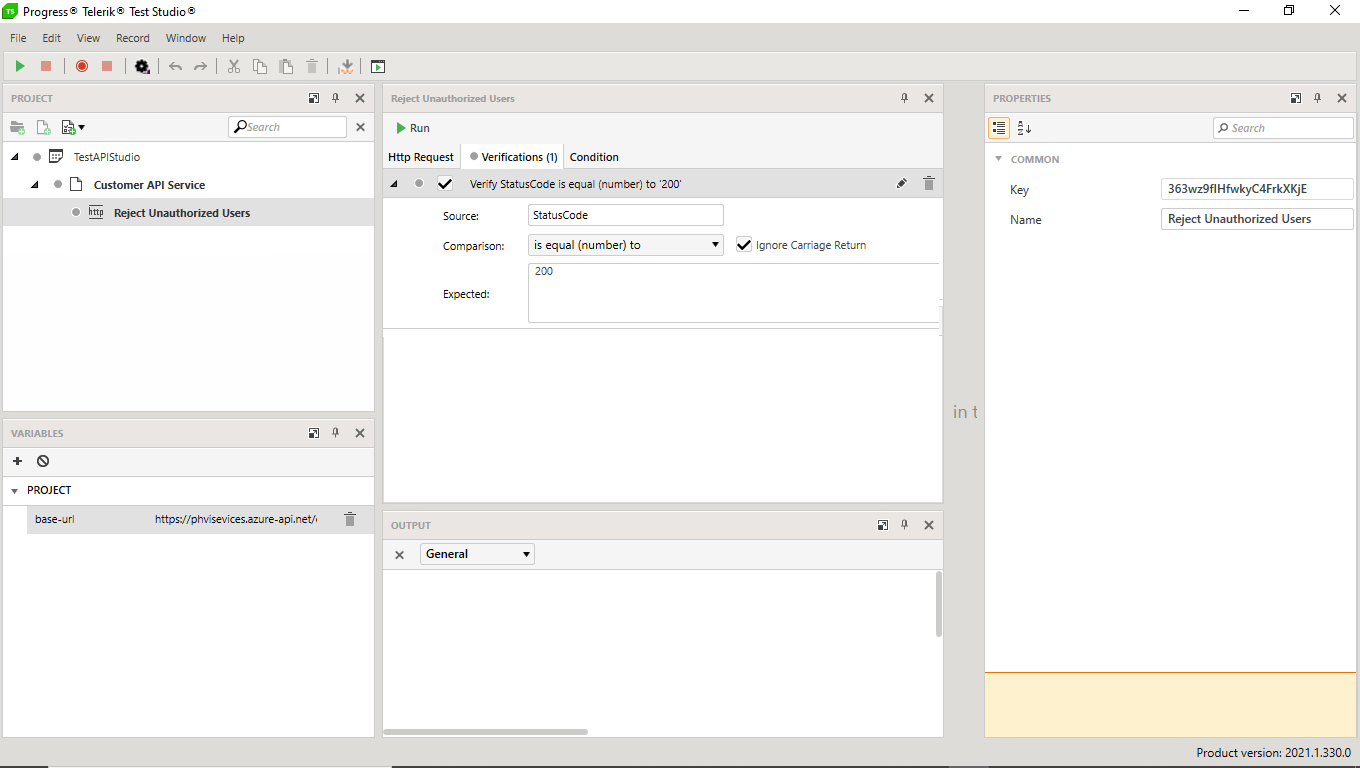

In an API test, the Assert phase consists of checking the HTTP response’s status code and, potentially, the values in the response’s body or headers. Test Studio for APIs supports this through settings in the verification tab for an HTTP request. For my initial test, I just want to check that the status code is equal to 200:

It might seem pointless at this point to run my test—after all, the service isn’t even created yet. However, running the tests before the service is created is the easiest way to determine that my test can detect failure (and a test that can’t detect failure is useless).

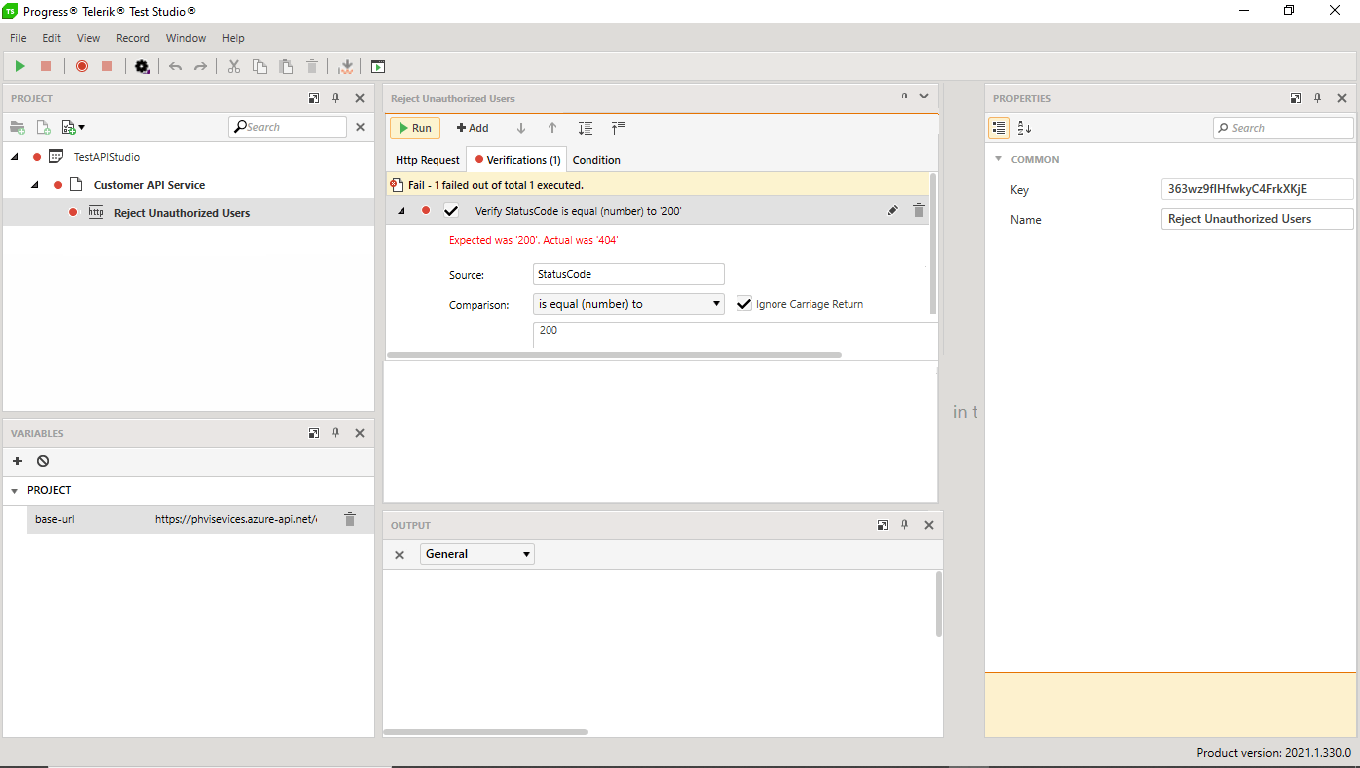

In Test Studio for APIs, I just click the Run button. Not surprisingly, my test fails with the traditional red stoplight dots beside the failing step, test and project:

I will, eventually, morph this test into something more useful (which is why I’ve given it the name “Reject Unauthorized Users”) but, for now, I’ve set up my test project and proved that I can tell the difference between success and failure. Ideally, this should take a few minutes at most.

Testing Authentication

I’m now ready to start defining my API. That will vary depending on what tool you’re using to create your service: If I’m working with ASP.NET, I’ll create my controllers (though without any code in my methods) and start running my service; if I’m working with Azure’s API Management, I’ll define the API and its initial operations.

At this point what I’m testing for is whether I can successfully access my API. Assuming that I haven’t mistyped my base URL, this is the test where I check to make sure that I’m handling authentication correctly. If I do have some authentication/authorization in place, I’m expecting to get back a 401 status code.

I’ll change my Assert to check for a 401 status code so that the rejection shows up as “passing the test” (i.e. in Test Studio for APIs, I update the status code on the verification tab to 401). Now, when I run my test, I should get my first successful test and prove that my service locks out unauthorized users.

For my second test, I want to confirm that authorized users can access the service. Test Studio for APIs will generate authentication headers for me given a username and password (for Basic authentication) or a client id and secret (for OAuth). This example of my second test just adds a basic subscription-type header to the request:

In the verification step for this test, I’ll check for a status code that indicates that I’ve finally reached my service. Again, this will vary depending on what tool you’re using to create your Web Service: With an ASP.NET Web Service, I’ll get a return code of 200; with Azure’s API Management, I’ll get a 500 status code (Internal Server error).

At this point, I have tests that demonstrate that, without authentication, clients can’t access my service and, with authentication, they can.

I’ll also add some test for requests that aren’t supported by the API to prove that, for example, a read-only service won’t accept update requests or badly formatted requests. These tests will also check that I’m producing the right response when returning results for valid and invalid requests (in Test Studio for APIs, I can use JSON paths and XPath to check my responses).

As I build out my tests, an OpenAPI tool like Swagger can be helpful in generating the format for the body of my requests. OpenAPI will also generate a JSON Schema that I can use to determine that my request and response are in the correct format (in Test Studio for APIs, I would add a coded step to validate my requests and responses against JSON schemas).

Testing Interfaces

While the obvious next step is to test the API’s actual functionality, at this point, I’ll probably move away from API testing, at least for a while. That may sound perverse, but my API generally exists for one of four purposes:

- Call methods on some objects

- Write an item to a queue

- Raise an event

- Call another API

In unit testing my API, I’m only responsible for confirming that I’m doing the right things when interfacing with those objects/queues/events/services. For example, where my API code calls objects, I’ll create mock objects (in the Telerik world, I would use JustMock) to confirm that:

- My API makes the right calls in the right order

- Parameters received by the API are correctly passed to those objects

- Outputs from the classes are converted into properly formatted responses

- Exceptions raised by the objects are properly formatted in responses and/or in logs

Where my service writes to a queue or raises an event, I’ll confirm that:

- The right event is raised

- Data is written to the right queue

- Errors in raising the event or writing to the queue are correctly reported in responses or logs

- The data passed to the event or written to the queue is backward-compatible with what my API has done in the past

In Test Studio for APIs, I would use coded steps to check for those conditions.

But that’s all my API unit testing is responsible for—it’s not responsible for confirming either that the objects/Web Services called from my API or the processes that respond to the events/read from the queues are doing the right things. Where I am calling another service, someone should be doing the API unit testing on on that other Web Service using the same kind of strategy I’ve outlined here. Someone should also be unit testing the objects my API calls or the processes that read from my queue/respond to my event. Those tests may even be run with a different tool (in the Telerik world, that would be Test Studio).

And, in unit testing my API, I’m also limited in what results I can check for. Where my service is raising an event or writing to a queue, my API could have a potentially infinite number of processes reading from those queues or responding to those events—I may not even be aware of many of those processes. It’s impossible for me to ensure that service is doing the “right” thing by checking that every follow-on process works. In these scenarios, my standard for “my API is doing the right thing” is “whatever my API did before.”

Integration Testing

Don’t get me wrong: Eventually, I do have to prove that my API works with those other processes. But that’s the responsibility of integration testing (where I’m manipulating objects or calling a Web Service) or end-to-end testing (where I’m raising an event or writing to a queue).

I’ll return to my API testing for integration but, because I’m looking further down the processing chain, I’m going to need more complicated tests. This includes tests that, for example, wait for the whole process to complete and then check the results. In Test Studio for APIs, I’d create more multiple-step tests that, for example, include a Wait step to pause my test for some period of time before checking to see if my expected result has wound its way through the system.

So I really have three sets of API tests: My initial unit tests, my integration tests and my end-to-end tests. As a result, I want my testing package to let me organize those tests into separate groups (in Test Studio for APIs, I can use folders to organize my tests within my projects or create separate projects for the different kinds of tests).

You can keep your API testing under control by recognizing when you’re unit testing your API and when you’re using your API for integration or end-to-end testing. And, when you are unit testing your API, you’re just testing your API and not the processes behind it.

Peter Vogel

Peter Vogel is both the author of the Coding Azure series and the instructor for Coding Azure in the Classroom. Peter’s company provides full-stack development from UX design through object modeling to database design. Peter holds multiple certifications in Azure administration, architecture, development and security and is a Microsoft Certified Trainer.