What Is DeepSeek? Dive in Using DeepSeek, .NET Aspire and Blazor

Summarize with AI:

A new AI model has taken the tech world, and the actual world, by storm.

It performs close to, or better than, the GPT 4o, Claude and Llama models. It was developed at a cost of $1.3 billion (rather than the originally reported $6 million)—using clever engineering instead of top-tier GPUs. Even better, it was shipped as open-source, allowing anyone in the world to understand it, download it and modify it.

Have we achieved the democratization of AI, where the power of AI can be in the hands of many and not the few big tech companies who can afford billions of dollars in investment?

Of course, it’s not that simple. After Chinese startup DeepSeek released its latest model, it has disrupted stock markets, scared America’s Big Tech giants and incited TMZ-level drama across the tech space. To wit: Are American AI companies overvalued? Can competitive models truly be built at a fraction of the cost? Is this our Sputnik moment in the AI arms race? (I don’t think NASA was able to fork the Sputnik project on GitHub.)

In a future article, I’ll take a deeper dive into DeepSeek itself and its programming-focused model, DeepSeek Coder. For now, let’s get our feet wet with DeepSeek. Because DeepSeek is built on open source, we can download the models locally and work with them.

Recently, Progress’ own Ed Charbeneau led a live stream on running DeepSeek AI with .NET Aspire. In this post, I’ll take a similar approach and walk you through how to get DeepSeek AI working as he did in the stream.

Note: This post gets us started; make sure to watch Ed’s stream for a deeper dive.

Our Tech Stack

For our tech stack, we’ll be using .NET Aspire. .NET Aspire is an opinionated, cloud-ready stack built for .NET-distributed applications. For our purposes today, we’ll be using it to get up and running quickly and to easily manage our containers. I’m not doing .NET Aspire justice, with all its power and capabilities: Check out the Microsoft documentation to learn more.

Before we get started, make sure you have the following:

- Docker (to get up and running on Docker quickly, Docker Desktop is a great option)

- Visual Studio 2022

- .NET 8 or later

- A basic knowledge of C#, ASP.NET Core and containers

Picking a Model

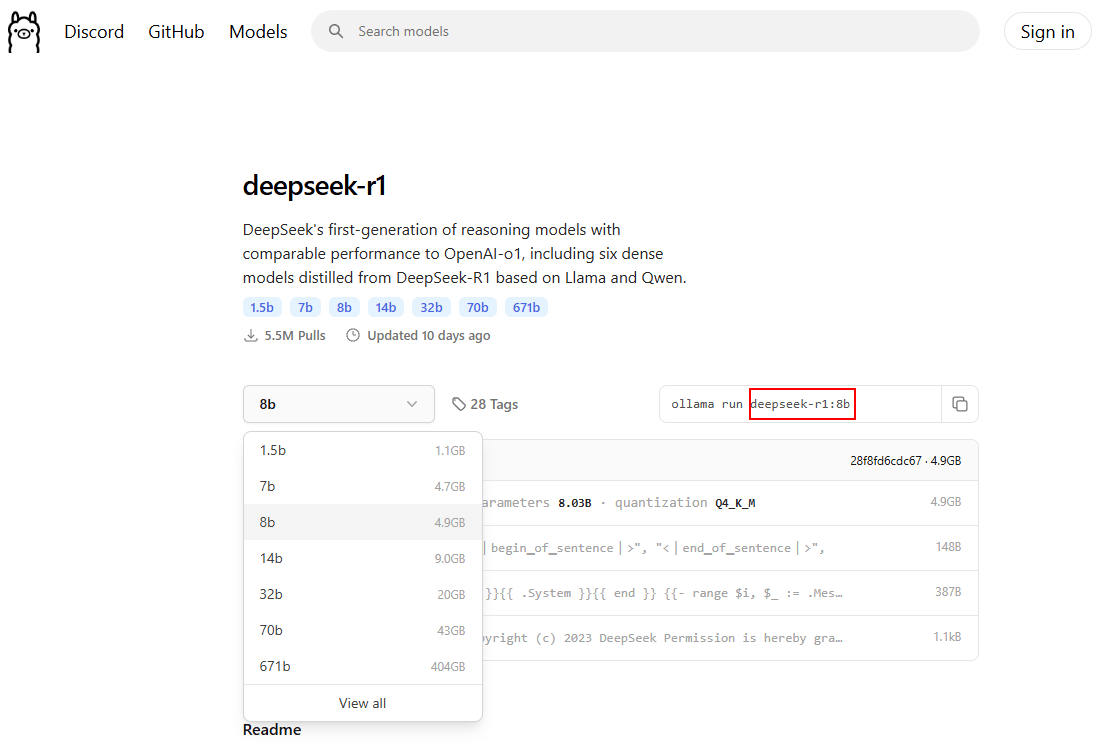

To run models locally on our system, we’ll be using Ollama, an open-source tool that allows us to run large language models (LLMs) on our local system. If we head over to ollama.com, let’s search for deepseek.

You might be compelled to install deepseek-v3, the new hotness, but it also has a 404 GB download size. Instead, we’ll be using the deepseek-r1 model. It’s less advanced but good enough for testing—it also uses less space, so you don’t need to rent a data center to use it.

It’s a tradeoff between parameter size and download size. Pick the one that both you and your machine are comfortable with. In this demo, I’ll be using 8b, with a manageable 4.9GB download size. Take note of the flavor you are using, as we’ll need to put it in our Program.cs soon.

Set Up the Aspire Project

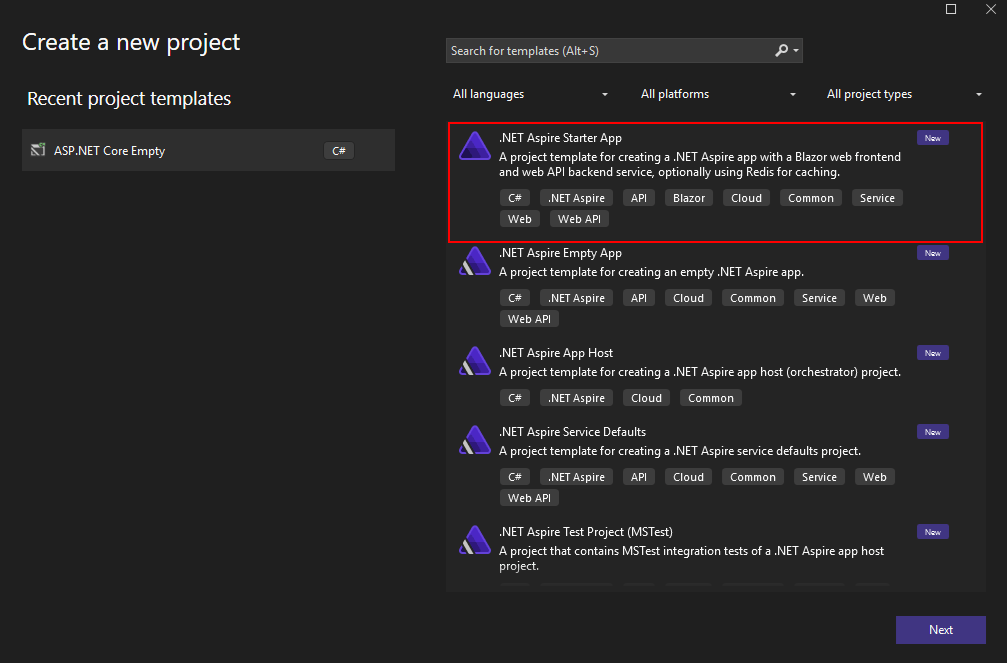

Now, we can create a new Aspire project in Visual Studio.

Launch Visual Studio 2022 and select the Create a new project option.

Once the project templates display, search for aspire.

Select the .NET Aspire Starter App template, and click Next.

Then, click through the prompts to create a project. If you want to follow along, we are using .NET 9.0 and have named the project DeepSeekDemo.

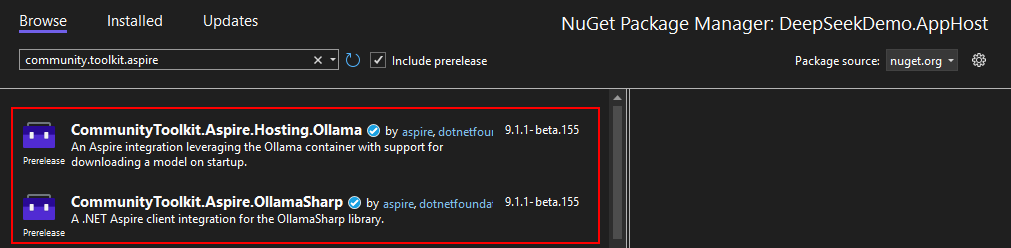

Right-click the DeepSeekDemo.AppHost project and click Manage NuGet Packages….

Search for and install the following NuGet packages. (If you prefer, you can also do it from the .NET CLI or the project file.)

CommunityToolkit.Aspire.Hosting.OllamaCommunityToolkit.Aspire.OllamaSharp

We’ll be using the .NET Aspire Community Toolkit Ollama integration, which allows us to easily add Ollama models to our Aspire application.

Now that everything is installed, you can navigate to the

Program.csfile in that same project and replace it with the following.var builder = DistributedApplication.CreateBuilder(args); var ollama = builder.AddOllama("ollama") .WithDataVolume() .WithGPUSupport() .WithOpenWebUI(); builder.Build().Run();Here’s a breakdown of what the

AddOllamaextension method does:AddOllamaadds an Ollama container to the application builder. With that in place, we can add models to the container. These models download and run when the container starts.WithDataVolumeallows us to store the model in a Docker volume, so we don’t have to continually download it every time.- If you are lucky enough to have GPUs locally, the

WithGPUSupportcall uses those. - The

WithOpenWebUIcall allows us to talk to our chatbot using the Open WebUI project. This is served by a Blazor front end.

Finally, let’s add a reference to our DeepSeek model so we can download and use it. We can also choose to host multiple models down the line.

var builder = DistributedApplication.CreateBuilder(args); var ollama = builder.AddOllama("ollama") .WithDataVolume() .WithGPUSupport() .WithOpenWebUI(); var deepseek = ollama.AddModel("deepseek-r1:8b"); builder.Build().Run();

Explore the Application

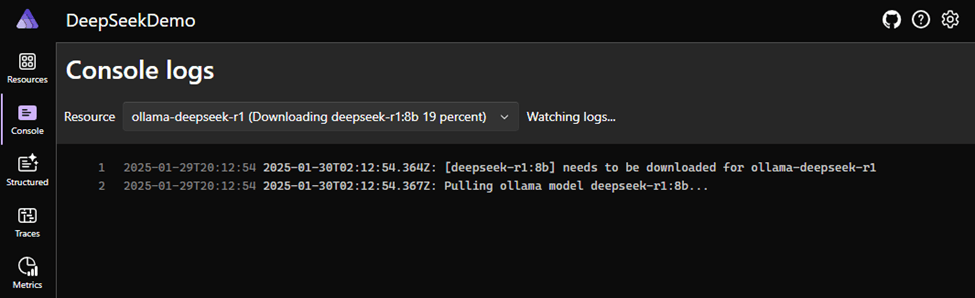

Let’s run the application! It’ll take a few minutes for all the containers to spin up. While you’re waiting, you can click over to the logs.

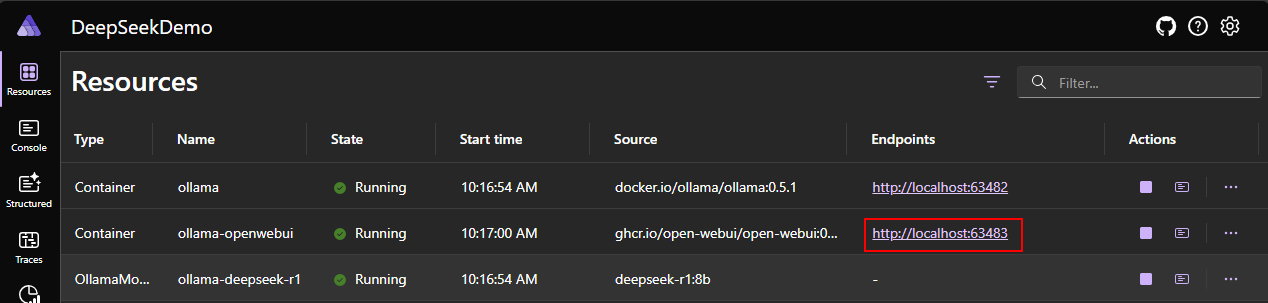

Once all three containers have a state of Running, click into the endpoint for the ollama-openweb-ui container.

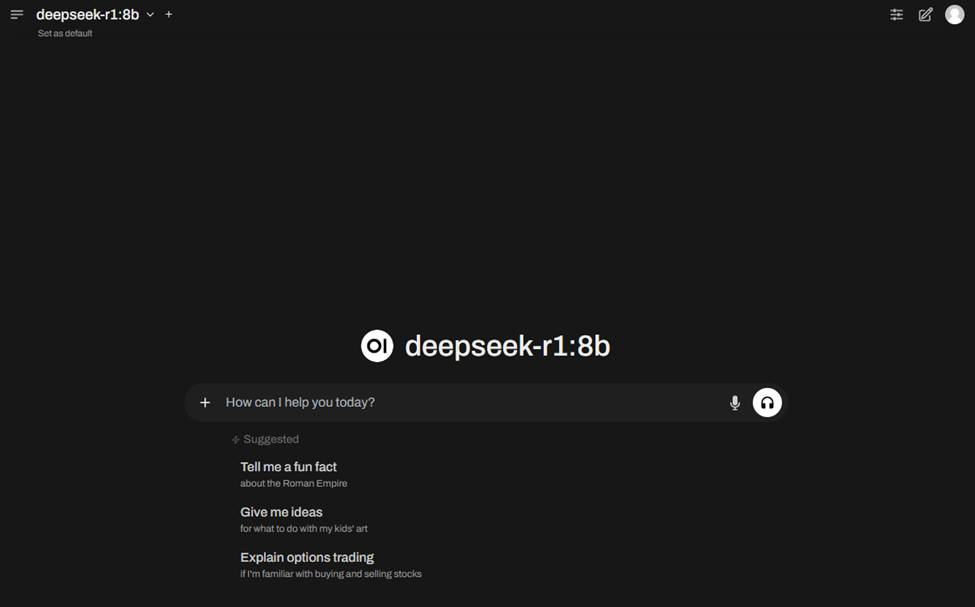

Once there, select the DeepSeek model and you’ll be ready to go.

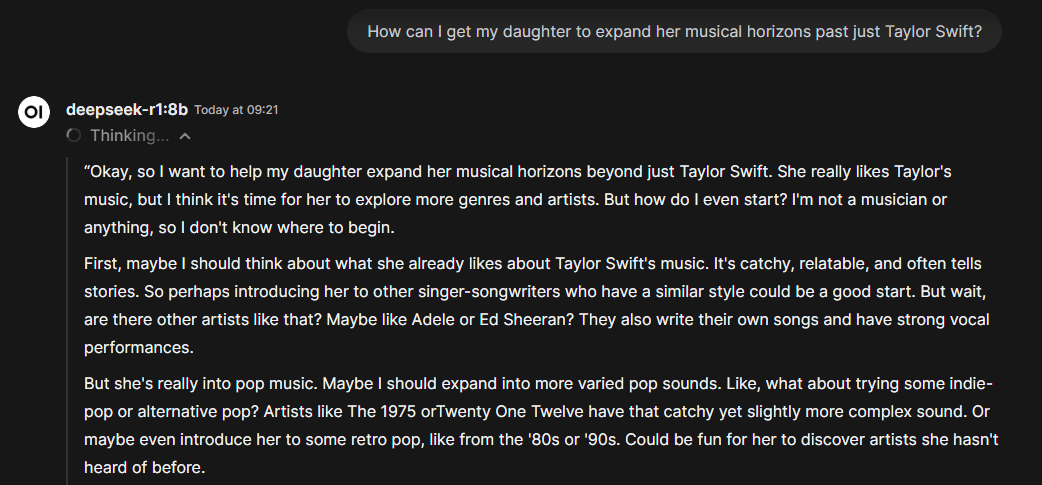

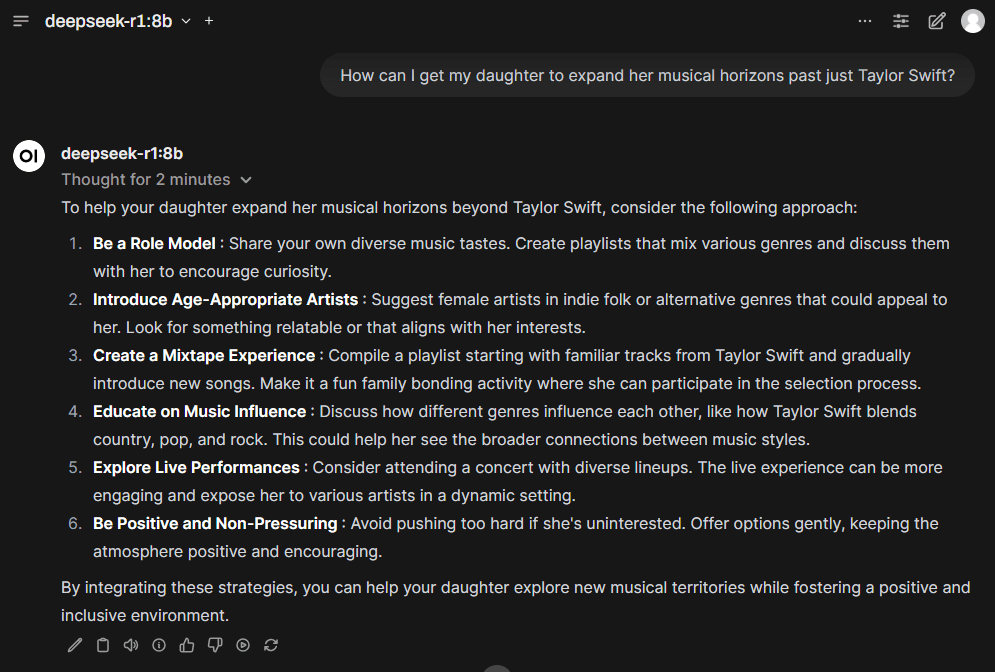

Let’s try it out with a query. For me, I entered an oddly specific and purely hypothetical query—how can a tired parent persuade his daughter to expand musical tastes beyond just Taylor Swift? (Just saying: the inevitable Kelce/Swift wedding will probably be financed by all my Spotify listens.)

You’ll notice right away something you don’t see with many other models: It’s walking you through its thought process before sending an answer. Look for this feature to be quickly “borrowed” by its competitors.

After a minute or two, I’ll have an answer from DeepSeek.

Next Steps

With DeepSeek set up in your local environment, the world is yours. Check out Ed’s DeepSeek AI with .NET Aspire demo to learn more about integrating it and any potential drawbacks.

See also:

- Running DeepSeek locally with Just a Browser live stream with Ed Charbeneau

- Local GenAI Processing: WebLLM with Blazor WebAssembly blog post by Ed

Any thoughts on DeepSeek, AI or this article? Feel free to leave a comment. Happy coding!

Dave Brock

Dave Brock is a software engineer, writer, speaker, open-source contributor and former Microsoft MVP. With a focus on Microsoft technologies, Dave enjoys advocating for modern and sustainable cloud-based solutions.