Best Practices for Data Retrieval in Telerik Reporting

Summarize with AI:

Every report needs data to go live. See how to configure the data sources in your report to ensure the best performance and learn some tricks that will help with report authoring.

Creating a report basically means to obtain a chunk of data, organize it according to a given set of rules and present it in a visual manner. The reports are already an integral part of the current business processes—whether it is a simple invoice or an elaborate sales chart, virtually every collection of facts and figures can be represented in a visually appealing way. But before getting to the visual part of the things, we need to make sure the basis of our reports—the data—is retrieved and retained in the best possible way.

We on the Telerik Reporting team understand the need for a reliable and easy way to access the data your report needs.That’s why I will explain below more about the different approaches for data retrieval and their variety—from database connections through collections of business objects up to JSON-formatted entities delivered from a web service.

The content below assumes you’re already familiar with Telerik Reporting, but if you’re not—please consider checking our Getting Started articles first. I will go a bit more technical and provide under-the-hood details and examples because I believe that better understanding of the way the data gets obtained and processed by the reporting engine will help you build your reports faster and use them effortlessly in various applications.

Connecting to Data

Database Connections

Telerik Reports can connect to data from various sources, but the most commonly used is the database. The component that performs the actual connection between the report and the database is named SqlDataSource. Its wizard provides a simple and intuitive interface that helps to create and test a connection to the selected database in a matter of seconds.

The SqlDataSource is database-agnostic, i.e. it does not have dedicated routines for MSSQL, Oracle or MySQL databases. Instead it relays the commands and parameters through a common interface, provided by the DbProviderFactory class. While configuring the SqlDataSource, you select a provider name, which is used by the DbProviderFactories instance to create the corresponding DbProviderFactory. The available DbProviders are listed in a configuration file—it can be the common machine.config file or just the configuration file of the .NET application. Here’s how the list with the DbProviderFactory entries in the machine.config looks:

<DbProviderFactories>

<add name="Oracle Data Provider for .NET" invariant="Oracle.DataAccess.Client"

description="Oracle Data Provider for .NET" type="Oracle.DataAccess.Client.OracleClientFactory, Oracle.DataAccess, Version=2.112.3.0, Culture=neutral, PublicKeyToken=89b483f429c47342" />

<add name="Odbc Data Provider" invariant="System.Data.Odbc" description=".Net Framework Data Provider for Odbc"

type="System.Data.Odbc.OdbcFactory, System.Data, Version=2.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" />

<add name="OleDb Data Provider" invariant="System.Data.OleDb"

description=".Net Framework Data Provider for OleDb" type="System.Data.OleDb.OleDbFactory, System.Data, Version=2.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" />

<add name="SqlClient Data Provider" invariant="System.Data.SqlClient"

description=".Net Framework Data Provider for SqlServer" type="System.Data.SqlClient.SqlClientFactory, System.Data, Version=2.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" />

<add name="MySQL Data Provider" invariant="MySql.Data.MySqlClient"

description=".Net Framework Data Provider for MySQL" type="MySql.Data.MySqlClient.MySqlClientFactory, MySql.Data, Version=8.0.13.0, Culture=neutral, PublicKeyToken=c5687fc88969c44d" />

</DbProviderFactories>Usually every database provider or driver installed on the machine adds its entry here—this way the provider is visible to applications that need to access a database in a more generic approach. So when you pick the MySQL Data Provider from the combo-box in the SqlDataSource wizard, the wizard stores the invariant name of that provider and uses it for creating the specific implementations of DbConnection, DbCommand and the rest of the classes needed to access that database.

Using such an approach allows us to connect to virtually any database that has an ODBC, OleDB or ADO.NET data provider. It is not even necessary to install it on the machine—it just needs to be registered in the <DbProviderFactories> section of the configuration file for applications that use .NET Framework. However, in .NET Standard the DbProviderFactories class does not exist and its entries need to be manually registered, as explained in this KB article.

Often different data providers to one database coexist on the same machine. You can have an ODBC driver and ADO.NET provider for MSSQL Server. There is no general rule that states which one you should prefer. For example, the System.Data.SqlClient provider is designed to provide optimal performance when connecting to SQL Server 2000 and later, but ODBC driver can work with older databases. In some cases the newer driver versions lack some functionality that is necessary for our application to function. Such is the case with the MySql.Net Connector provider that didn’t have implemented a certain method named CreateCommandBuilder, which is used by our engine to obtain the database schema. In the versions before 6.4.6 the method was present, then it got deprecated and from version 8.0 it got reintroduced.

The best advice in such a situation would be to measure the capabilities and performance of each available database provider to make sure it suits your needs. Of course, it’s always better to have options to choose from and that’s why our product ships with more than 20 ODBC drivers that can be used to connect to the most popular databases.

Telerik Reporting offers a few other components that allow connecting to a database, but they target a specific database or technology and are not as popular as the SqlDataSource. These components include:

-

CubeDataSource—used to connect to an OLAP cube data model through MDX query;

-

OpenClientDataSource—specifically designed to be used with OpenEdgeAppServer ABL procedures;

-

OpenAccessDataSource—provides data through an OpenAccess data model.

-

EntityDataSource—it’s not mandatory to have a real database as a data storage for this component—allows to connect to an existing ObjectContext or DbContext and access its data model. The current implementation works with .NET Framework and cannot be used in projects that employ EntityFrameworkCore.

Although these components meet particular business needs, they share common logic with the SqlDataSource and most of the tips and suggestions provided here are valid for them as well.

Web Service Connections

Although the scenarios where the data is obtained from a database are still the most common ones, the cases where the database is not directly accessible or is even missing are getting more and more traction. In these setups a web service accepts the requests and returns data objects, formatted as JSON, XML or text. This approach has significant benefits in terms of security and configuration, and using a standardized interface to access the data provides for easier adoption. Telerik Reporting has a dedicated component to serve that purpose—the WebServiceDataSource component.

Similarly to the other data sources, it can be configured via its wizard to connect to a web service, authenticate if needed, request the necessary data and parse the response to a set of data objects. The WebServiceDataSourceconfiguration allows you to fine-tune the URL and the request body using tokens that will be substituted at runtime with actual values from report parameters or static strings.

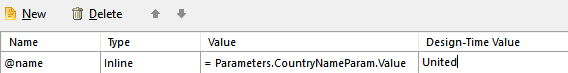

As a real-life example, let’s take a look at the website restcountries.eu that provides statistics for all the countries in the world via RESTful API. The endpoint that returns all the countries is https://restcountries.eu/rest/v2/all, but if our report needs to show a subset of countries by given name, the endpoint we need to use is https://restcountries.eu/rest/v2/name/{name}. In this case the WebServiceDataSource’sServiceUrl would look like this: https://restcountries.eu/rest/v2/name/@name, where the“@” character denotes a token that will be substituted. The actual token substitution is configured in the WebServiceDataSource parameters collection, and in the current case we’ll have an inline parameter that obtains its value from a report parameter:

As you can see, it’s important to pick the right endpoint and request just the necessary info rather than fetch data for all the countries and filter it later on the clientside.

The component also provides enough authentication options to cover simple and more complicated scenarios for granting access to the web service. The type and encoding of the response are also configurable. The propertyDataSelector is very useful when the returned data needs to be filtered by specific criteria, but I’ll cover it in detail in the “Organizing the Data” section below.

Static Data Connections

In scenarios where the actual data is not available or is ineffective to be used due to slow connection or metered access, the CSVDataSource and JSONDataSource components prove to be very effective. They provide a simple interface to data that can be stored in a file (local or remote) or inline in the report definition. As the names suggest, the input of these data sources is textual data, organized in comma-separated values or in JSON format. These components are great for quick proof-of-concept report designs and even high-load testing.

Our team uses them extensively in support communication where the users send us reports that we need to test without having access to the actual database. When given the report definition and a few minutes with Excel, it can result in a mock-up data set with thousands of records. Therefore I strongly suggest using these data sources in earlier stages of report design process instead of using real data from a production database server or a web service.

Business Objects Connections

If any of the listed data access approaches do not fit the current scenario, you can always resort to creating the data set programmatically and passing it to the report through the ObjectDataSource component. It accepts a class that implements IEnumerable, IListSource or IDbDataAdapterinterfaces, or a method that returns an instance of these interfaces, making it applicable to virtually any object collection. Configuring the ObjectDataSource is easy, since it needs only two arguments—the name of the collection class and the name of the method that returns the data. The corresponding wizard takes care of guiding the user through all the necessary steps, including the configuration of the parameters of the data retrieval method, if there are any. At runtime, when a data item like Table or Graph needs to obtain its data from the ObjectDataSource, the engine will instantiate the collection class and call the data retrieval method, providing values for its parameters.

Although very powerful, this component has some requirements that need to be considered before using it in a report:

The first one concerns the dependencies that the collection class has. Ideally the business objects would be declared in a separate assembly that needs to be registered into the application configuration file under the <AssemblyReferences> section. In case the business class assembly references other assemblies that are not part of the .NET framework, these assemblies need to be copied in the application directory or registered in GAC in order to be resolved by the runtime when it loads the business object classes. These problems are not that easy to resolve, because the .NET runtime does not provide enough information about what went wrong while trying to load the assembly. The tool that we use in these scenarios is the Microsoft’s Assembly Binding Log Viewer named Fuslogvw. As its description suggests, it logs the assembly bindings from the running .NET applications. Inspecting the bind failures allows us to determine which assemblies fail to load in a particular application—i.e. our reports project.

The second thing to consider while working with the ObjectDataSource component is related to the .NET architecture. By design, assemblies targeting the .NET Framework cannot reference assemblies that target .NET Core. So if your business objects reside in a .NET Core assembly, they cannot be referenced by a reports project that targets .NET Framework. In such a scenario there are two options—to change the target framework of the reports project from .NET Framework to .NET Core or to change the target framework of the business objects assembly from .NET Core to.NET Standard 2.0. If for some reason both options are not feasible, the business objects should be extracted in another .NET Standard assembly that is referenced by both projects.

As you can see, our product offers a lot of options for connecting to a data source and fetching data from it. Choosing and configuring the report data source for your report might seem an easy task but it is an important factor that may affect the report performance and degrade the user experience of the reporting application.

Organizing the Data

Once we’ve established a connection to the database, we need to request the data and shape it in a way that will be most convenient to use in a report. The process of data organization is vital for both the report design process and report performance and can be divided in three parts.

Trimming

Regardless of how carefully we construct our database query, occasionally we may end up with a SqlDataSource that fetches a result set with 30 columns to a Table item which uses only 5 of them. The general recommendation applicable to all the data sources is “only what’s necessary,” i.e. data sources should retrieve the minimum of the data fields needed for the report, especially when the result set contains lots of records. Let’s illustrate it with an example: our report needs to show the actual price of the items we sold last year. The actual price equals the base price minus the discount. Our query might look like this:

Select base_price, discount, base_price - discount as actual_price from Sales

Assuming that Money field type in MSSQL takes 8 bytes, for one million records in our Sales table we’ll have ~8MB overhead only from a single column in our dataset. Of course, in a real-life scenario we’ll have traffic compression and other optimizations (and also 8MB is not 8 million bytes, right), but still this column is redundant. In this scenario I would use the SqlDataSource’s CalculatedFields property and write a simple expression that will subtract the prices for me.

The same considerations are valid for the other data sources. For example, the ObjectDataSource uses reflection to iterate through the business objects and find the properties used in the report by their name. If the models we use have lots of properties, their traversal would take more time, resulting in a bad performance. In this case it’s usually a good idea to have a mid-tier set of classes that contain only the properties used in the report.

Filtering

Every data item or group in the report can set rules for filtering its data before it’s processed. This is useful in a few scenarios, but usually the data can and should be filtered server-side. As explained before, the less data is fetched from the data source, the better the performance. Almost all data source components provide a way to filter their data before it’s sent to the data items. The recommended way to apply filtering to the data source is through report parameters.

The report parameters are expressions, evaluated before processing the report. Depending on their configuration, they might be displayed in the report viewer, providing an UI to select or enter a value. Regardless of their visibility, they must have a valid value prior to report processing. This value might be passed to the WHERE clause in the SqlDataSource’s query, used in theDataSelector’sJSONPath expression in WebServiceDataSource, or sent as a parameter to the ObjectDataSource’s data retrieval method. In any case they should be used to decrease the amount of data fetched from the data source when possible. In the case of WebServiceDataSource component the DataSelector is applied client-side once the data is fetched from the server. Therefore, if the web service provides an endpoint that could return only the necessary data, it’s always better to use it rather than applying a DataSelector afterwards.

A nice example of the importance of filtering is a scenario with a client who used our product to create accounting reports. His report had two modes—detailed and summary, selected through a report parameter. In “detailed” mode it printed every record from the result set—hundreds of thousands of records—which would take a reasonable amount of time. In the “summary” mode the report was expected to print only a few rows with the summary figures. Our client used the same query for both modes and, understandably, was surprised that the performance in “summary” mode was not that good for just a few lines in the resulting document. We explained to him that the second scenario would save only the time needed to render the pages, but the data retrieval, processing and grouping are the same in both cases. In such cases the recommended solution would be to modify the query executed by the SqlDataSource, moving the data grouping on the database server. This can be done either through Bindings or by creating a new report with data source and layout specifically designed for “summary” mode.

Our client went with the solution using SubReport item with different report for each mode and was happy with the performance gain.

Ordering

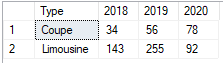

By “ordering”I do not mean sorting the data by its values, but customizing the layout of the columns and rows in the result set returned by the data source. In general, it should be as similar to the layout that will be used in the report as possible. But on the other hand, if the same data source will be reused by another data item, the same layout may be impractical. Consider having the following result set that represents the car sales per year:

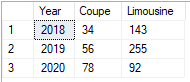

Such a layout makes perfect sense if we have to show it in a table, preserving the same look—the columns represent the years, the rows—the car types. But if we want to reuse the same dataset in a Graph item and show a line chart that has the year on its X axis and sales on its Y axis, it would be a real challenge. In this case we should reorganize the query so the layout would look like this:

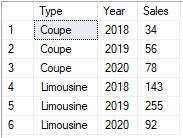

Now the data is nicely ordered and can be shown in Table and Graph items, but still doesn’t fit all the possible scenarios—for example, what if we expect to have more car types? In this scenario the most universal layout would be this one:

This data representation can be easily grouped and displayed in a Table or a Graph item, regardless of the car types and years. The only problem here is that the result set is now much bigger than the previous ones and would impact the performance for larger data sets. As you can see, there is no universal solution—a scenario that is well suited for one data item might be inapplicable for another. Ideally each data source should be configured specifically for its corresponding data item, but as a downside the data will not be reused between the items and this would also result in a performance drawback. Still, I’d recommend starting with a more generic data set layout and fine-tune it at a later stage if necessary.

Conclusion

On these few pages I explained at length how the database connection mechanism works and what are the correct ways to fetch the report data from various data sources. Although the report authoring is aided by wizards, live preview items and context Ribbon tabs, the initial stage that configures the data sources is the base that provides for solid performance and effortless design process. Given that the examples above are based on the problems some of our users have had while setting up the data retrieval in their reports, I hope that knowing the details about the process will help you select the option that provides the best performance in any scenario.

Get Started Today

Take our reporting tools for a spin—try out the latest version of Telerik Reporting today with a FREE trial.

Telerik Reporting is a complete, lightweight, easy-to-use and powerful .NET reporting tool for web and desktop applications that supports: ASP.NET Core, Blazor, ASP.NET MVC, ASP.NET AJAX, HTML5, Angular, React, Vue, WPF, WinForms. With our Reporting tool any developer or reporting user will be able to create, style, view and export rich, interactive and reusable reports to attractively and beautifully present any analytical and business data. The reports can be added to any web and desktop business application through report viewer controls. The ready reports can be exported to more than 15 formats.

Telerik Report Server is an end-to-end report management solution which helps transform raw data into actionable business insights and then stores and distributes these insights within the business. Report Server comes bundled as a lightweight web application that can be downloaded and run on user local web server. Once its installed, it enables the company to store all reports in a single server-side repository. It comes packed with a variety of line-of-business features like report scheduling, data alerts and e-mail notifications, user management, authentication and authorization and rebranding functionality.

Tried Telerik DevCraft?

You can choose Telerik Reporting and Telerik Report Server as individual products or enjoy them as part of the great Telerik DevCraft bundles.

Telerik DevCraft is the finest software developer tools collection across .NET and JavaScript technologies, which includes modern, feature-rich and professionally designed UI components for web, desktop and mobile applications, reporting and report management solutions, document processing libraries, automated testing and mocking tools from the Telerik and Kendo UI suites. DevCraft will arm you with everything you need to deliver outstanding applications in less time and with less effort. With the backing of our legendary support team, which consists of the developers who build the products, and a ton of resources and trainings you can rest assured that you have a stable partner to rely on for your everyday challenges along your software development journey.

Ivan Hristov

Ivan Hristov has been a software developer in the Telerik Reporting division since 2013.

When not at work, he might be seen biking/skiing with his daughter, reading or discussing influential movies with friends. Feel free to reach out to him through LinkedIn.