The Promise of Model Context Protocol

Summarize with AI:

There is a lot of excitement around Model Context Protocol (MCP)—let’s understand its value and explore potential solutions it enables.

It is the age of AI—there is a huge opportunity for developers to infuse apps with solutions powered by generative AI and large/small language models. Modern AI is also an opportunity to streamline and automate developer workflows for better productivity. However, look beyond the shiny surface and cracks start to show with today’s generative AI—context and expertise matters.

The Model Context Protocol (MCP) promises standardized communications to provide AI models/agents with deep contextual knowledge and bolster confidence in performing tasks with customized tools. Let’s explore.

AI Model Shortcomings

Today’s generative AI is powered by large/small language models. These are extremely powerful systems capable of understanding human natural language to hold conversations and respond to prompts with answers from a seemingly unlimited wealth of knowledge.

Powered by unsupervised learning and deep neural networks, today’s AI models can be expensive to host, but sport support for huge amounts of parameters and processing through massive tokenization workloads every second. The popularity and sheer power of today’s AI models is a testament to how far things have come with AI.

However, despite all the smartness, AI models struggle with a few foundational problems that are baked in by design:

AI model knowledge bases are timestamped. There was a moment in time when the concerned AI model crawled the internet to ingest data. Beyond that point, the AI model is not trained on anything new. Sure, there are techniques like Retrieval Augmented Generation (RAG) to ground AI models—but RAG is often expensive and known to have scalability issues.

AI models do not do anything. AI models are simply not designed to perform computations or carry out actions. These are powerful probabilistic pattern matching/recognition systems, but not meant to perform tasks. To really boost our productivity, we perhaps need AI to do concrete things for us—some through automation, others with human supervision.

AI Agents

The next big milestone in the evolution of AI is already here—say hello to AI agents. An AI agent is essentially a system that is capable of performing tasks in response to human prompts. Actions can be autonomous if allowed or need human permission.

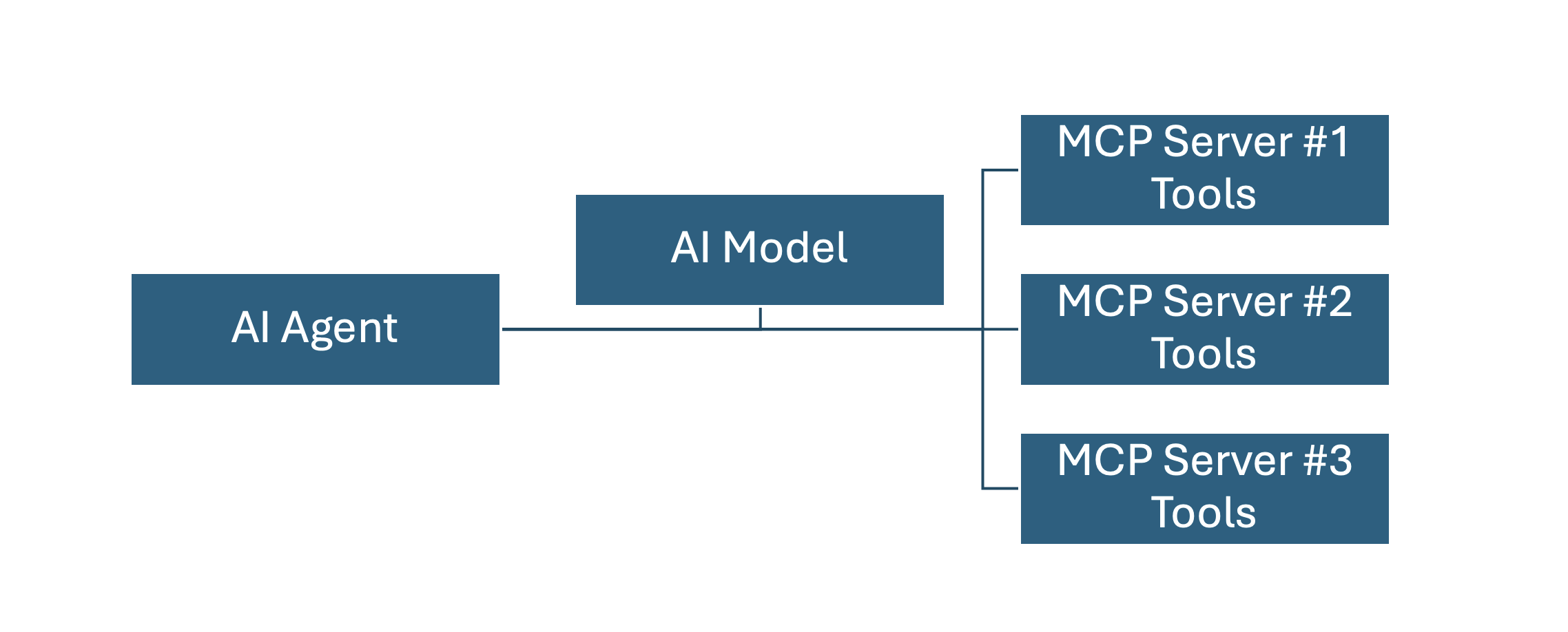

AI agents do leverage the natural language processing power and wealth of knowledge of AI large language models. But in addition to knowledge, AI agents have access to specialized tools to perform tasks. AI agents often act like a supervisor—tapping into AI models for knowledge, designing a workflow and leveraging available tools to carry out an action.

AI agentic workflows do change the game big time. No longer are we constrained by suggested responses—we can actually have AI do concrete things for us. However, context still matters, and for us to have trust in automation, the tasks are best carried out by systems that are experts in respective domains. There needs to be way for disparate systems to speak a common language to provide context and tools to AI Agents. The popular answer is MCP.

What’s MCP?

The Model Context Protocol (MCP) is an open-industry protocol that standardizes how applications provide context to AI LLMs—developers can think of it as a common language for information exchange between AI models/agents.

Developed by Anthropic, MCP aims to provide a standardized way to connect AI models to different data sources, tools and non-public information. The point is to provide deep contextual information/APIs/data to AI models/agents. MCP services also support robust authentication/authorization toward executing specific tasks on behalf of users.

While early days, there is plenty of excitement in big tech around MCP. With a flexible stable specification, MCP can play a big role in standardizing information exchange between AI models and various data sources for grounded AI responses/tooling usage.

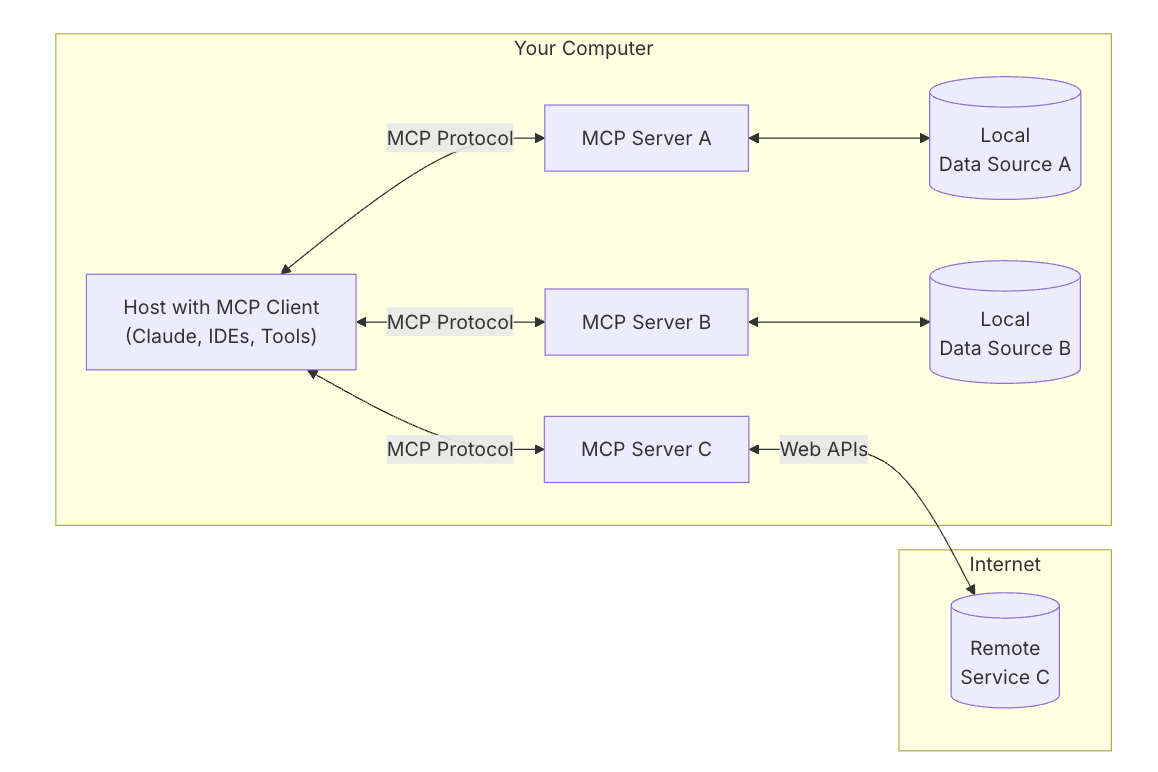

MCP specifications essentially work over simple client-server architectures, where a host application can connect to multiple servers—each bringing their own tools with specific domain expertise. With MCP standardized protocols, developers have the opportunity to expose deeply contextual APIs, documentation, database records and any other type of customizable domain information—all surfaced up through MCP servers for clients to consume/leverage.

Image courtesy MCP Docs

Why MCP?

As we graduate to using more AI agentic workflows, one can see how MCP can help get around two of the shortcomings of traditional AI Models:

- With standardized protocols, AI agents do not need to depend on timestamped knowledge. Looking up pertinent information is as easy as a web search in the moment.

- With specialized tools available, AI agents can take concrete actions in response to user prompts. MCP provides the bridge to bring in domain knowledge and task know-how.

In MCP land, there are some common terms that are often thrown around:

- A host is an app that wants to leverage MCP for access to specialized data/tools. Examples—VS Code, Visual Studio, Claude Desktop, Cursor AI and other coding editors/IDEs.

- An MCP client is essentially the workhorse that communicates 1:1 with MCP Servers. Examples—GitHub Copilot, ChatGPT, Tabnine and more.

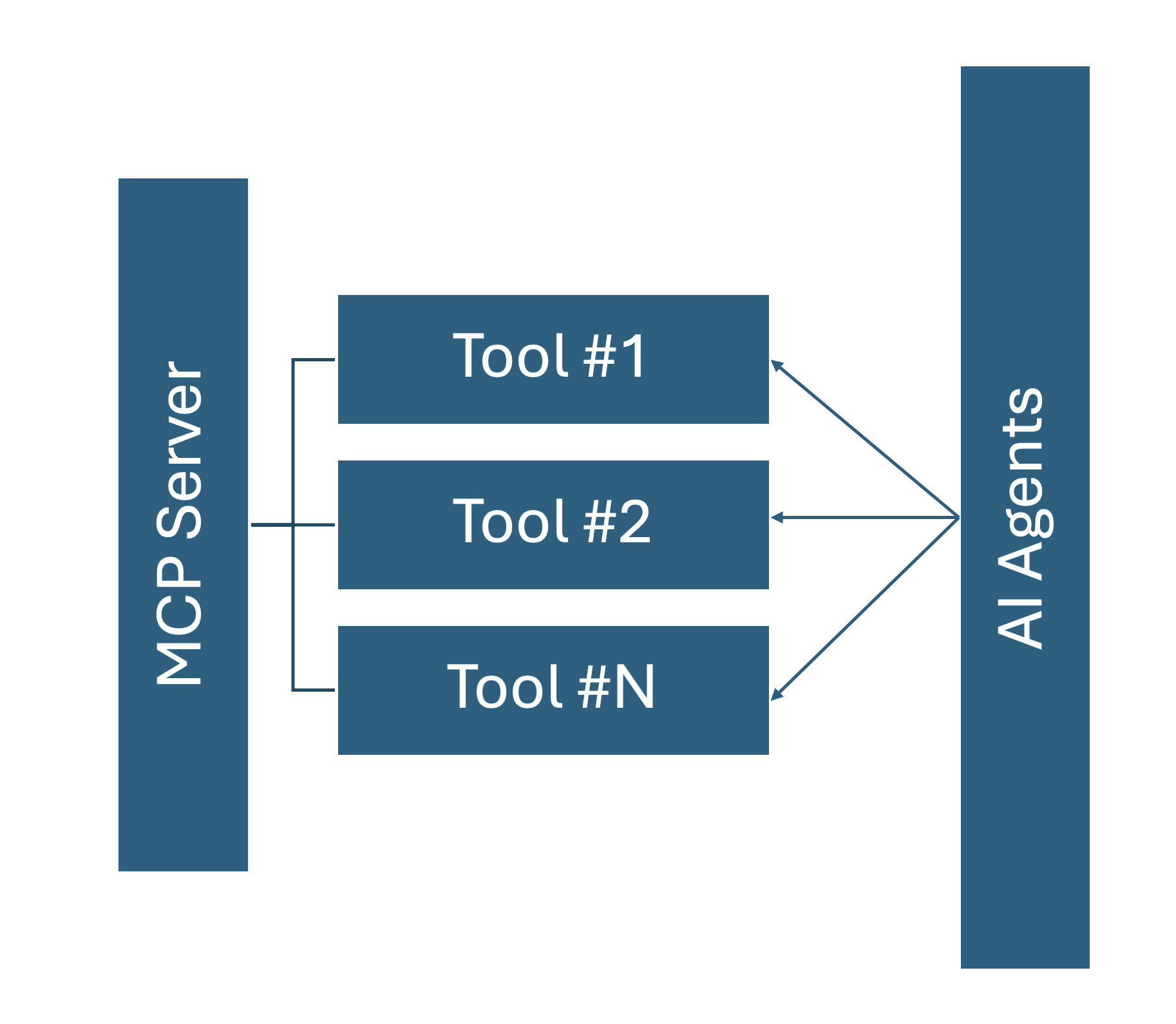

- An MCP server is a hosted program/endpoint that exposes specific capabilities through the standardized MCP communications.

Not everyone will need to make an MCP host or client. The biggest benefit of MCP standardization is democratizing access to specialized tooling through MCP servers to AI agents.

Today’s AI agents have access to natural language processing and a knowledge repository of AI models, but they are also starting to have a wealth of MCP-powered tools at their disposal. Agentic workflows do not need expertise in contextual tasks. Companies that provide specialized services can surface their tools through MCP for AI agents to invoke.

MCP Considerations

For developers looking to build MCP servers/clients, there is plenty of help—no need to reinvent the wheel.

- There are abstractions through SDKs for popular programming languages, like C#, Python, TypeScript, Java and Kotlin.

- Security is well thought out. MCP servers will often surface domain knowledge and specific tasks that need to identify/grant access to users. Standard authentication/authorization techniques apply to MCP and access control is securely done with tokens/keys to specified services.

- Tooling to work with MCP is well established. There is an MCP Inspector to try things out locally, as well as server logging techniques to monitor performance/errors.

- Popular developer tools like VS Code/Cursor/Claude Desktop have MCP clients built-in, making integration with MCP servers easy.

MCP Inspirations

As developers look to build more MCP servers/clients, a little inspiration never hurts—thankfully, there are lots of examples that showcase the capabilities and versatility of MCP.

- MCP references: The MCP website has long lists of open-source example servers and clients—these include reference implementations, third-party servers and community initiatives.

- A wonderful list of MCP servers/clients is mcp.so—a curated collection of high quality MCP implementations.

- Another collection of awesome MCP servers is mcpservers.org—a reference collection of MCP implementations of various types.

MCP aims to standardize how applications provide context to AI models and tools to AI agents. The advent of MCP looks to empower agentic workflows to have secure access to deeply contextual tasks and, in turn, AI workflows that are more beneficial to everyone. More on building with MCP coming up soon with upcoming content—upwards and onwards. Cheers, developers!

Sam Basu

Sam Basu is a technologist, author, speaker, Microsoft MVP and gadget lover. With a long developer background, he also worked as a Developer Advocacy Manager for advocating modern web/mobile/cloud development platforms on Microsoft/Telerik/Kendo UI technology stacks. His spare times call for travel, fast cars, cricket and culinary adventures with the family.