AI Won't Replace Design Engineers, But It Will Change How We Work

Summarize with AI:

The robots are coming for our jobs. Or are they?

Every few years, something comes along that promises to fundamentally change how we build products. This time feels different though. Not because AI is going to eliminate design engineering roles, but because it is shifting what these roles could focus on day to day.

AI

If you’re a designer, engineer and/or a design engineer in some form or fashion, AI tools have most likely become unavoidable in your work. Gemini can now edit images while maintaining consistent likenesses. Claude writes code, GitHub Copilot helps autocomplete entire functions and tools like Google Stitch can transform sketches into UI components.

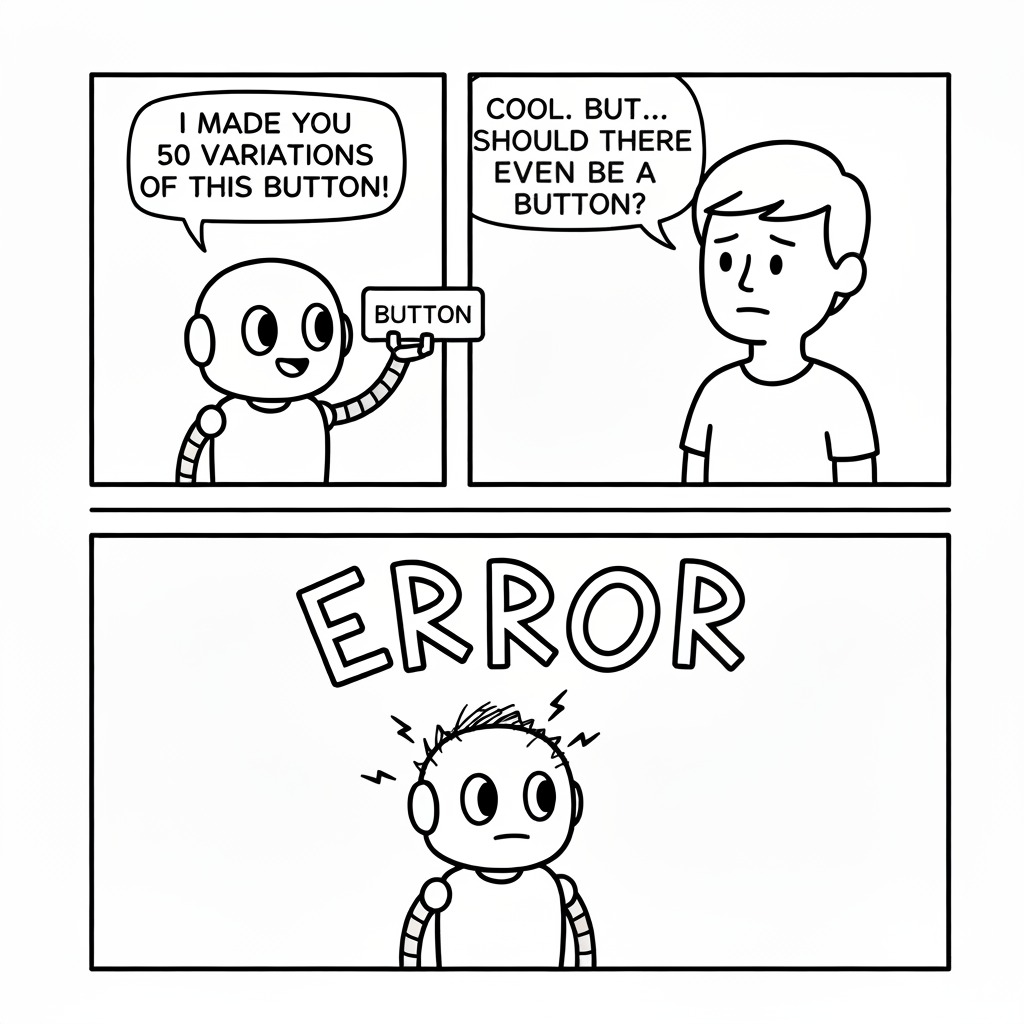

With that being said, there is a pattern emerging after months of industry adoption: these tools excel at execution but struggle with intention. They can generate 17 variations of a button component but can’t determine whether that button should exist at all. They’ll produce well-formatted BEM class structures while missing that your CSS information architecture needs a complete rethink.

This gap between execution and strategic thinking is where design engineers can continue to create their value.

Comic generated with the help of AI (Gemini 2.5 Flash Image)

Design & Design Engineering

The nature of design engineering work is shifting. Certain tasks that once consumed hours can now be handled in seconds. Component scaffolding, design token conversion, responsive breakpoints can all be automated (to a certain extent).

Design engineers have always operated at the intersection of possibility and practicality. They’re the ones who know both what can be built and what should be built. AI changes the equation by handling more of the “can” so humans can focus on the “should.”

Take prototyping. What used to be a multi-day process of translating designs into functional code can now happen in real-time during a meeting. You can test five different approaches in an afternoon by dropping a rough sketch into a tool like v0.app and getting working UI back in seconds.

However, someone still needs to know which five approaches are worth testing. Someone needs to understand the user journey well enough to spot when the AI-generated solution completely misses the point.

As AI tools progress, the design engineer becomes less about pixel-perfect implementation and more about system thinking. Less about writing CSS and more about understanding when that fancy animation will break the experience on older devices. Less about cranking out components and more about knowing which components will actually scale across the product.

The Path Forward

The good news is the core purpose behind design engineering remains unchanged. Someone still needs to bridge the gap between what designers envision and what engineers build. That translation layer, that understanding of both worlds, can’t be automated because it’s fundamentally about human communication and context.

The robots aren’t taking our jobs. They’re taking the repetitive parts we never loved anyway. What remains is the challenging, creative, strategic work that made us choose this path in the first place.

In a world where anyone can generate UI in seconds, the person who knows which UI to generate becomes invaluable. That’s the design engineer’s role now, and it’s only becoming more critical.

Hassan Djirdeh

Hassan is a senior frontend engineer and has helped build large production applications at-scale at organizations like Doordash, Instacart and Shopify. Hassan is also a published author and course instructor where he’s helped thousands of students learn in-depth frontend engineering skills like React, Vue, TypeScript, and GraphQL.