Speed Up Your Angular Application with Code Splitting

Summarize with AI:

Load speed is critical to the success of our apps. Learn how code splitting works and how it improves load time.

Code splitting is a low-hanging fruit when it comes to improving the load speed of our web applications.

(Photo credit: Maksym Diachenko)

Instead of sending one big bundle with the entire application’s JavaScript to the user when they visit our site, we split the bundle into multiple smaller bundles and only send the code for the initial route.

By removing code that is not necessary for the critical rendering path, we make our application load (download, render and become interactive) faster.

Why do we want our application to load fast?

User Experience

It is a truth universally acknowledged that a successful site must have a good user experience.

Many aspects contribute to a site’s user experience: the site’s load performance, how easy it is for the user to find what they’re looking for, whether the site is responsive, easy to use, accessible and attractive.

Studies show that mobile users value fast page load the most. What does a fast page load mean?

It means that the page is rendered (the pixels are painted on the screen) quickly and it is responsive to user interactions (users can click on buttons, select options).

When we visit a site, it doesn’t feel great if we have to wait for the content to be displayed. It doesn’t feel great either when we click on a link or button that doesn’t seem to respond.

In fact, waiting feels really stressful. We have to keep calm, take in deep breaths and meditate so we don’t start rage-clicking the non-responsive button.

The initial page load speed is especially critical as users are likely to abandon a site if the content takes too long to display or if the page takes too long to become interactive.

Please note that page load performance includes load speed as well as layout stability (measured by Cumulative Layout Shift). This article focuses on page load speed, however, I heartily recommend watching Optimize for Core Web Vitals by Addy Osmani to learn what causes CLS and how to reduce it.

How Fast Should a Page Load?

So, what is considered to be a fast page load time?

I love this tweet from Monica, a senior engineer at Google. She says, “If you wouldn’t make eye contact with a stranger for the time it takes your web app to first paint, it is too slow.”

We can further quantify the initial load speed with the user-centric metrics provided by Google’s core web vitals.

Page load speed is measured in two sets of metrics:

1. The First Set Looks at Content Load Speed

First Contentful Paint (FCP) measures when the first text content or image is displayed on the screen.

Largest Contentful Paint (LCP) measures when the main content of the page (the largest image or text) is visible to the users.

LCP is a newer metric used to estimate when the page becomes useful for the user. It replaces (First Meaningful Paint) FMP. You can watch Investigating LCP, a fun and informative talk by Paul Irish, to find out more.

Rendering content fast is extremely important as the user can start engaging with the page. It creates a good first impression and perceived performance.

However, what matters even more in an interactive web application is being able to interact with the application fast.

2. So the Second Set of Metrics Measures Page Responsiveness

First Input Delay (FID), Time to Interactive (TTI) and Total Blocking Time (TBT) measure how quickly and smoothly the application responds to user interactions.

The table below gives a summary of the times to aim for on average mobile devices and 3G networks. Please refer to web.vitals for detailed explanations and any updates.

| Metrics | Aim |

|---|---|

| First Contentful Paint | <= 1 s |

| Largest Contentful Paint | <= 2.5 s |

| First Input Delay | < 100 ms |

| Time To Interactive | < 5 s |

| Total Blocking Time | < 300 ms |

| Cumulative Layout Shift | < 0.1 |

To put these times in context, studies show that when waiting for a response to user interactions:

- Less than 200ms feel like an instant reaction.

- Less than 1s still feels like the page is performing smoothly.

- Less than 5s feels like it is still part of user flow.

- More than 8s makes users lose attention and they are likely to abandon the task.

What Factors Affect Page Load Time?

We have seen that a fast page load provides a better user experience and that we can measure the load speed with user-centric metrics. We know to aim for a Largest Contentful Paint of less than 2.5s and a Time To Interactive of less than 5s.

It still begs the question: What are the factors that cause delays in page load?

When a user visits our site, the browser does quite a lot behind the scenes to load the page and make it interactive:

- Fetch the HTML document for the site

- Load the resources linked in the HTML (styles, images, web fonts and JS)

- Carry out the critical rendering path to render the content, and execute the JavaScript (which may modify content and styles and add interactivity to the page)

Let us look at what is involved in some of these steps in a bit more detail so we can understand how they can affect the page load time.

1. Network Latency

When the user enters a URL in the browser address bar, again the browser does quite a bit behind the scenes:

- Queries the DNS server to look up the IP address of the domain

- Does a three-way handshake to set up a TCP connection with the server

- Does further TLS negotiations to ensure the connection is secure

- Sends an HTTP request to the server

- Waits for the server to respond with the HTML document

Network latency is the time from when the user navigates to a site to when the browser receives the HTML for the page.

Of course, the browser uses the cache to store information so the revisits are quicker. If a service worker is registered for a domain, the browser activates the service worker which then acts as a network proxy and decides whether to load the data from cache or request it from the server.

We can measure the network latency by Round Trip Time (RTT) or Time to First Byte (TTFB).

Network latency affects page load time because the browser cannot start rendering until it has the HTML document.

2. Network Connectivity

There is a huge variance in network connectivity. 4G networks in different countries have different speeds.

Even though we have 4G and 5G networks now, according to statistics, a significant percentage of users are still on 3G and 2G networks.

Besides, many other factors may affect network speed even if the user is on a fast network.

Transferring large files over a slow network connection takes a long time and delays the page load speed.

What should we do? Send fewer bytes over the network and send only what is needed for the current page (not the entire application).

3. Varying User Devices

Another factor affecting page load speed is the CPU strength of a device.

The JavaScript in our application is executed on the CPU of the user’s device. It takes longer to execute JavaScript in the median and low-end mobile devices with slower CPUs than it does on high-end mobile devices with fast/multi-core CPUs.

It is really important for the performance of our application that we don’t send unoptimized JavaScript that takes too long to execute.

4. Main Thread Workload

“The Browser’s Renderer Process is responsible for turning a web application’s HTML, CSS and JS code into the pages that we can see and interact with.” — Inside look at a modern web browser

It is the main thread that does most of the work. It:

- Renders the page content

- Executes the JavaScript

- Responds to user interactions

As we can imagine, while the main thread is busy doing one task, the other tasks are delayed. For instance, while the main thread is busy executing a script, it cannot respond to user interactions.

It is really important that we don’t tie up the main thread with JavaScript that takes too long to execute.

5. Cost of JavaScript

If you’re like me, you love writing JavaScript code. We need JavaScript to make our applications interactive and dynamic.

However, JavaScript is an expensive resource. The browser needs to download, parse, compile and execute the JavaScipt.

In the past, parsing and compiling JavaScript added to the cost of processing JavaScript. However, as Addy Osmani explains in his article, The Cost of JavaScript in 2019, browsers have become faster at parsing and compiling JavaScript.

Now, the cost of JavaScript consists of the download and execution time:

- Downloading large JavaScript files takes a long time, especially on slow network connections.

- Executing large JavaScript files uses more CPU. This especially affects users on median and lower-end mobile devices.

What can we do to provide a better load speed across all network connections and all devices?

Network latency, network connection and user devices are all external factors that are not in a frontend developer’s control. However, what we do have control over is the JavaScript.

Here’s what we can do:

Improve the execution time of our JavaScript

Chrome DevTools refers to a script that takes longer than 50 milliseconds to run as a long task. Long tasks delay the main thread from responding to user interactions, hindering the interactivity of the page. We can use DevTools to identify long tasks and optimize.

Reduce the size of the JavaScript bundles

Angular CLI already takes care of tree shaking, minification, uglification and differential loading (less JavaScript is shipped for modern browsers) for us.

What we can do is use code splitting to split our application code into smaller bundles.

Let us look at code splitting in more detail next.

Code Splitting

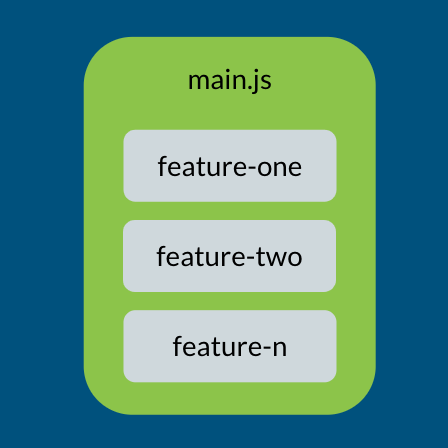

Code splitting lets us reduce the size of our application bundle (main.js) without sacrificing the features in our application. It does this simply by splitting the application’s JavaScript bundle into smaller bundles.

Bundling an Angular Application

The Angular CLI uses webpack as the bundling tool. Angular takes care of the webpack configuration for us. The configuration lets webpack know what bundles are needed to bootstrap an Angular application.

In a production build for an Angular application, webpack creates runtime.js, polyfills.js and main.js bundles.

Webpack includes the modules that we import statically (using the import statement at the top of our JS modules) in the main application bundle (main.js). By default, the entire application code is included in main.js.

main.js is a critical resource, meaning that it modifies the DOM and CSSOM and, therefore, it affects rendering. To make sure our application is loaded fast (LCP < 2.5s and TTI < 5s), main.js should only include code that is needed for the application’s first page.

We can tell webpack to split the application code into separate bundles by dynamically importing the modules that don’t need to be included in the main.js bundle.

webpack creates separate bundles for modules that are dynamically loaded (using the dynamicimport() syntax).

The main.js bundle only includes code for the application landing page. The feature modules are split into separate bundles.

Note: It is important not to statically import the dynamically loaded modules as well, otherwise they will end up in the

main.jsbundle.

Eager Loading

As part of the bundling, webpack adds <script> tags for the JavaScript bundles needed to bootstrap our Angular application in the application’s HTML document (index.html).

These bundles are eagerly loaded, which means the browser will download and process these resources when it receives the HTML document.

<head>

<script src="runtime.js" defer></script>

<script src="polyfills.js" defer></script>

<script src="main.js" defer></script>

</head>

<body>

<app-root></app-root>

</body>

Set up Code Splitting in Angular

The modular architecture used to build Angular applications lends itself nicely to code splitting. We break our application into features and the features into components.

Components are self-contained building blocks that contain their HTML, CSS and JavaScript. Their dependencies are injected, and they define the interface for interacting with other components.

Angular Modules are used to organize the components (and directives, etc.) in the features and define what is shared with other modules. We use the Angular Router to handle navigations to our feature pages.

Code splitting can be done at component level or route level. In this article we will look at route-level code splitting.

The Angular CLI makes it really easy to set up route-level code splitting. We simply use the ng command to generate a module specifying the module name, route path and the parent module. For example:

ng generate module docs --route docs --module app

And, voila! The Angular CLI generates the module, a component, and the route configurations for us.

Of particular interest is the route configuration. The CLI adds a route in the route configuration for us. This is where the magic happens 😉.

// Route Configuration

const routes: Routes = [

{

path: 'docs',

loadChildren: () => import('./docs/docs.module')

.then(m => m.DocsModule)

}

];

How does it work?

Lazy Loading

The route configuration is an array of Route objects. The loadChildren property of the Route object indicates to the Router that we want to dynamically load the route’s bundle at runtime.

By default, the Angular Router loads the bundle when the user first navigates to the route. This is called asynchronous or dynamic, or on-demand or lazy loading.

The actual code splitting is done by webpack. The import() function tells webpack to split the requested module and its children into a separate bundle.

For our example route configuration above, webpack will create a separate bundle for DocsModule named something like: docs.module.js.

Benefits of Code Splitting

Instead of including all the application’s JavaScript in one large bundle, code splitting lets us split our application bundle into smaller bundles. This has many benefits:

Application loads faster ⏱. The browser cannot render our application until the critical resources have downloaded. With code splitting we can make sure that our initial application bundle

(main.js)only has code for the first page. The result is a smallmain.jsthat is faster to download (than a large bundle with all the application code in it). So our application is rendered faster and becomes interactive faster even on slower network connections.Easier to optimize for execution time 🏃🏽♀️. It is easier to identify which bundles take too long to execute. They are shown as long tasks in Chrome DevTools, so we know which bit of of code to investigate and optimize.

Does not waste users’ data 💰. Many users have limited mobile data plans. We don’t want to make our users download a large bundle that uses up their data, when it is quite likely that they only want to use part of the application. With code splitting, users only download JavaScript for the pages they visit and thus only pay for what they actually use.

Better for caching. When we change the code in one bundle, the browser will only invalidate and reload that bundle 🎁. The other bundles that don’t have updates don’t have to be reloaded, thus avoiding the network request and related latency and download costs.

What’s Next

Code splitting improves our application’s initial load speed, but we don’t want to stop there. We need to look into preloading strategies to preload the route bundles to make sure the navigations are fast too.

Use Chrome DevTools and Lighthouse to measure performance. If needed, look into inlining the critical CSS (also known as above-the-fold CSS) of your application and deferring the load of non-critical styles.

Look into optimizing images.

Use source map explorer to understand what is in your JavaScript bundles.

If you’re wondering about component-level code-splitting in Angular, I recommend watching Brandon Robert’s talk on Revising a Reactive Router with Ivy.

Conclusion

In order to provide a good user experience, it is important that our web application renders fast and becomes responsive to user interactions fast.

Google’s Core Web Vitals provides us with user-centric metrics to measure our application’s load performance. Best practice is to aim for a Largest Contentful Paint of less than 2.5 seconds and a Time to Interactive of less than 5 seconds.

Code splitting is one of the effective techniques that lets us split our application’s JavaScript bundle into smaller bundles. The initial application bundle only contains the critical JavaScript necessary for the main page, improving our application load speed.

It is super easy to set up route-level code splitting with the Angular CLI: Simply run the command to generate a lazy loaded module. Webpack splits the lazy loaded modules into separate bundles and Angular takes care of the webpack setup for us!

Ashnita Bali

Ashnita is a frontend web developer who loves JavaScript and Angular. She is an organizer at GDGReading, a WomenTechmakers Ambassador and a mentor at freeCodeCampReading. Ashnita is passionate about learning and thinks that writing and sharing ideas are great ways of learning. Besides coding, she loves the outdoors and nature.