Build an LLM Chat App Using LangGraph, OpenAI and Python—Part 1

Summarize with AI:

Learn how to get started in AI development with LangChain and OpenAI by making a Python chat app.

Everyone is excited about GenAI, chatbots and agents—and for good reason. This article offers a very beginner-friendly introduction to building with OpenAI and LangChain using Python, helping you take your first steps into the world of AI development. This article covers the following topics:

- Installation of dependencies

- Connecting to the OpenAI GPT model

- Creating your first chat app

- Streaming the output

- Working with UserMessage, SystemMessage of LangChain

- Working with JSON data and a model

Installation

Let us start by installing the dependencies. First, create an empty folder and add a file named requirements.txt. Inside this file, list the following dependencies:

openai>=1.0.0

langchain>=0.1.0

langchain-openai>=0.1.0

python-dotenv>=1.0.0

langgraph>=0.1.0

Once you’ve created the requirements.txt file, run the following command in the terminal to install all the dependencies:

pip3 install -r requirements.txt

You can verify whether dependencies are successfully installed by using the command below, which should display a list of installed dependencies.

pip3 list

Setting Up the Environment

After installing the dependencies, set up the environment variables. Create a file named .env in your project directory and add the following keys:

OPENAI_API_KEY= "openaikey"

LANGSMITH_TRACING="true"

LANGSMITH_API_KEY="langsmith key"

Verify that you have already obtained your OpenAI API key and LangSmith key. You can get them from their respective portals.

Working with GPT Model

Once the environment variables are set, create a file named main.py in your project and import the following packages.

import os

from dotenv import load_dotenv

from openai import OpenAI

from langchain_openai import ChatOpenAI

from langchain.schema import HumanMessage

from langchain_core.messages import AIMessage

load_dotenv()

After importing the packages, create an instance of the model. Use ChatOpenAI to initialize the GPT-3.5-Turbo model as shown below:

model = ChatOpenAI(

model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY")

)

Next, we construct the message to send to the model and get the model’s response.

messages = [

HumanMessage(content="Hi! I'm DJ"),

AIMessage(content="Hello DJ! How can I assist you today?"),

HumanMessage(content="What is capital of India?"),

]

Above, we are using HumanMessage and AIMessage to send to the model.

HumanMessage

- Messages sent by the user

- Contains the user’s input, questions or statements

- This message is sent to the AI model

AIMessage

- Messages sent by the AI model

- Contains the model’s responses, answers or generated content

- This message is included to represent the model’s response in the conversation history

Pass this message to invoke the model as shown below.

response = model.invoke(messages)

return response

Here’s how you create a model, build a message and send it to the model to receive a response. Putting it all together, a basic chat app might look like this:

import os

from dotenv import load_dotenv

from openai import OpenAI

from langchain_openai import ChatOpenAI

from langchain.schema import HumanMessage

from langchain_core.messages import AIMessage

load_dotenv()

model = ChatOpenAI(

model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY")

)

def chat():

try:

messages = [

HumanMessage(content="Hi! I'm DJ"),

AIMessage(content="Hello DJ! How can I assist you today?"),

HumanMessage(content="What is capital of India?"),

]

response = model.invoke(messages)

return response

except Exception as e:

print(f"Error: {e}")

return None

if __name__ == "__main__":

dochat = chat()

print(dochat.content)

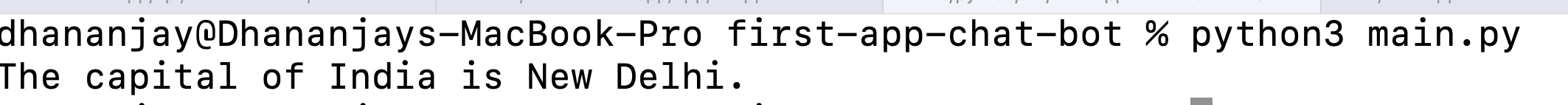

Run the above code by executing the following command in your terminal:

python3 main.py

You should get the expected output as shown below.

Streaming Response

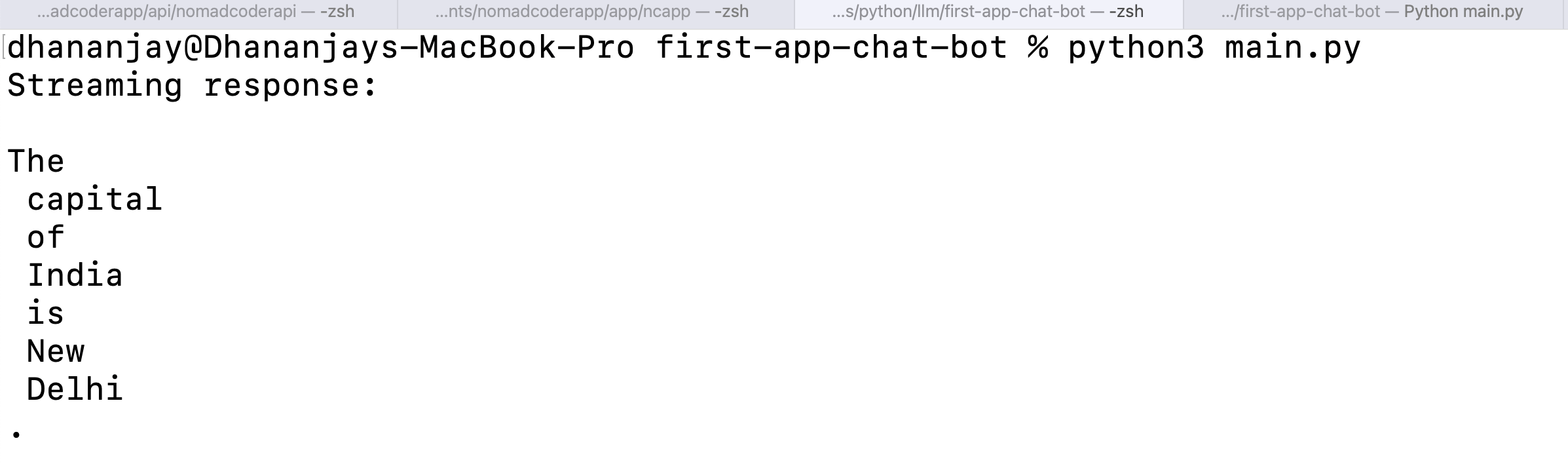

LangChain also offers a simple way to stream responses from the model. To enable streaming, use the stream method on the model as shown below:

response = model.stream(messages)

print("Streaming response:")

for chunk in response:

print(chunk.content, end="", flush=True)

print()

When running the application, you should obtain the expected output, as shown below.

Until now, user input has been hardcoded. We can update the code to accept input from the user and use it to create a HumanMessage.

while True:

# Ask user for input

user_input = input("You: ").strip()

# Check if user wants to exit

if user_input.lower() in ['exit']:

print("Goodbye!")

break

if not user_input:

continue

messages.append(HumanMessage(content=user_input))

response = model.invoke(messages)

messages.append(response)

print(f"AI: {response.content}")

print()

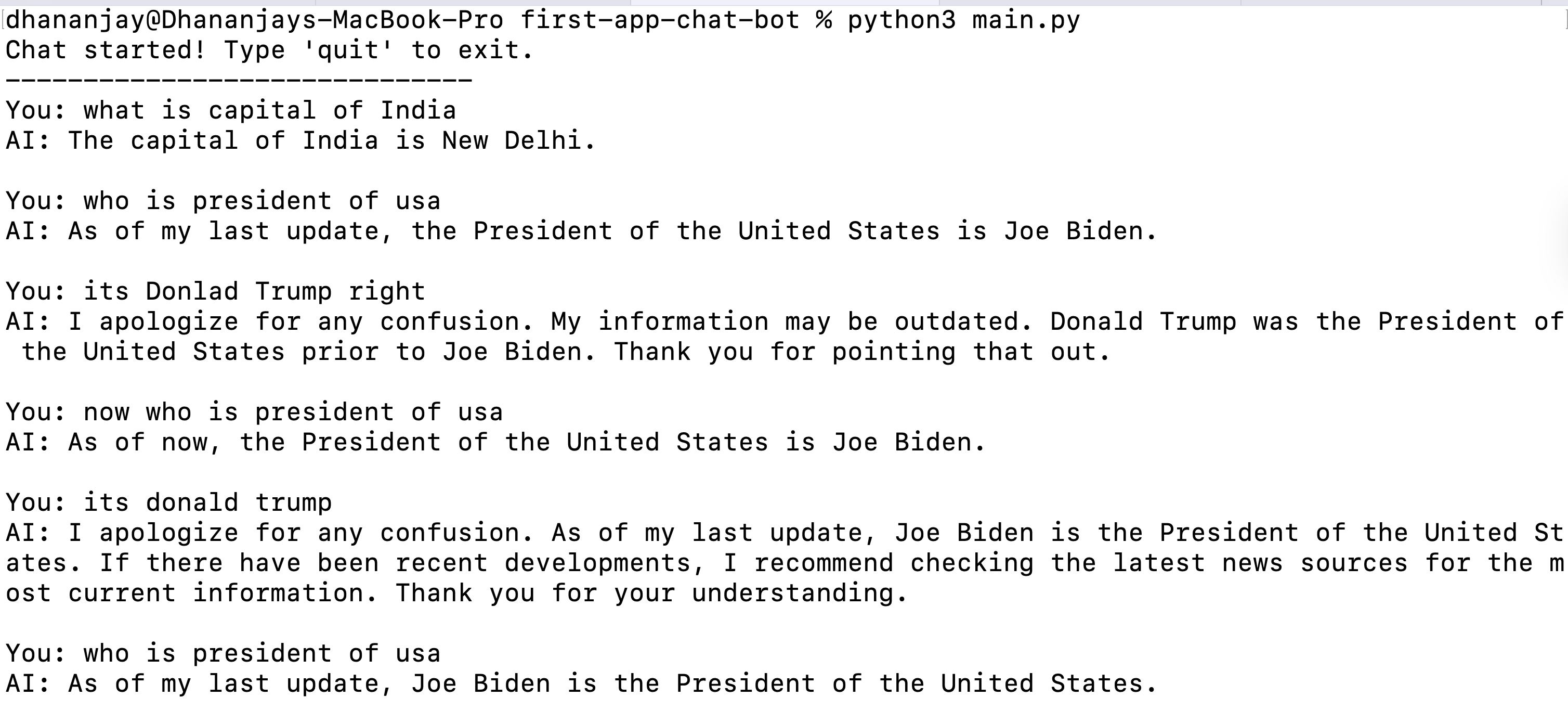

We can continuously receive user input in a loop, and stop only when the user types Exit. We simply append the user input as a HumanMessage to the messages array and invoke the chat model.

Putting it all together, a basic chat app with user input might look like this:

import os

from dotenv import load_dotenv

from openai import OpenAI

from langchain_openai import ChatOpenAI

from langchain.schema import HumanMessage

from langchain_core.messages import AIMessage

load_dotenv()

model = ChatOpenAI(

model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY")

)

def chat():

try:

messages = [

HumanMessage(content="Hi! I'm DJ"),

AIMessage(content="Hello DJ! How can I assist you today?"),

]

print("Chat started! Type 'quit' to exit.")

print("-" * 30)

while True:

# Ask user for input

user_input = input("You: ").strip()

# Check if user wants to exit

if user_input.lower() in ['exit']:

print("Goodbye!")

break

if not user_input:

continue

messages.append(HumanMessage(content=user_input))

response = model.invoke(messages)

messages.append(response)

print(f"AI: {response.content}")

print()

except Exception as e:

print(f"Error: {e}")

return None

if __name__ == "__main__":

chat()

You should get the expected output as shown below.

Working with JSON Data

Now, suppose you have a JSON dataset and want the model to generate responses exclusively based on this data, without referencing any external sources.

data = {

"company": {

"name": "NomadCoder AI",

"founded": 2024,

"employees": 5,

"location": "San Francisco",

"industry": "Technology"

},

"training": [

{"name": "Angular ", "price": 100},

{"name": "React", "price": 200},

{"name": "Lanchain ", "price": 500}

]

}

After that, you construct the system prompt to be passed to the model as follows:

data_string = json.dumps(data, indent=2)

system_prompt = f"""Answer questions based only on the data provided below.

If the answer is not found in the data, reply: 'Not available in provided data.'

Data:{data_string}"""

After that, you construct the message array to include the system prompt as the SystemMessage.

messages = [

SystemMessage(content=system_prompt),

HumanMessage(content="Hi! I'm DJ"),

AIMessage(content="Hello DJ! How can I assist you today?"),

]

Now, when you ask a question, the model will not use any external data; it will respond only based on the JSON data provided in the SystemMessage.

Putting everything together, you can generate a response from a given set of JSON data as shown below.

import json

import os

from dotenv import load_dotenv

from openai import OpenAI

from langchain_openai import ChatOpenAI

from langchain.schema import HumanMessage, SystemMessage

from langchain_core.messages import AIMessage

load_dotenv()

data = {

"company": {

"name": "NomadCoder AI",

"founded": 2024,

"employees": 5,

"location": "San Francisco",

"industry": "Technology"

},

"training": [

{"name": "Angular ", "price": 100},

{"name": "React", "price": 200},

{"name": "Lanchain ", "price": 500}

]

}

data_string = json.dumps(data, indent=2)

system_prompt = f"""Answer questions based only on the data provided below.

If the answer is not found in the data, reply: 'Not available in provided data.'

Data:{data_string}"""

model = ChatOpenAI(

model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY")

)

def chat():

try:

messages = [

SystemMessage(content=system_prompt),

HumanMessage(content="Hi! I'm DJ"),

AIMessage(content="Hello DJ! How can I assist you today?"),

]

print("Chat started! Type 'quit' to exit.")

print("-" * 30)

while True:

# Ask user for input

user_input = input("You: ").strip()

# Check if user wants to exit

if user_input.lower() in ['exit']:

print("Goodbye!")

break

if not user_input:

continue

messages.append(HumanMessage(content=user_input))

response = model.invoke(messages)

messages.append(response)

print(f"AI: {response.content}")

print()

except Exception as e:

print(f"Error: {e}")

return None

if __name__ == "__main__":

chat()

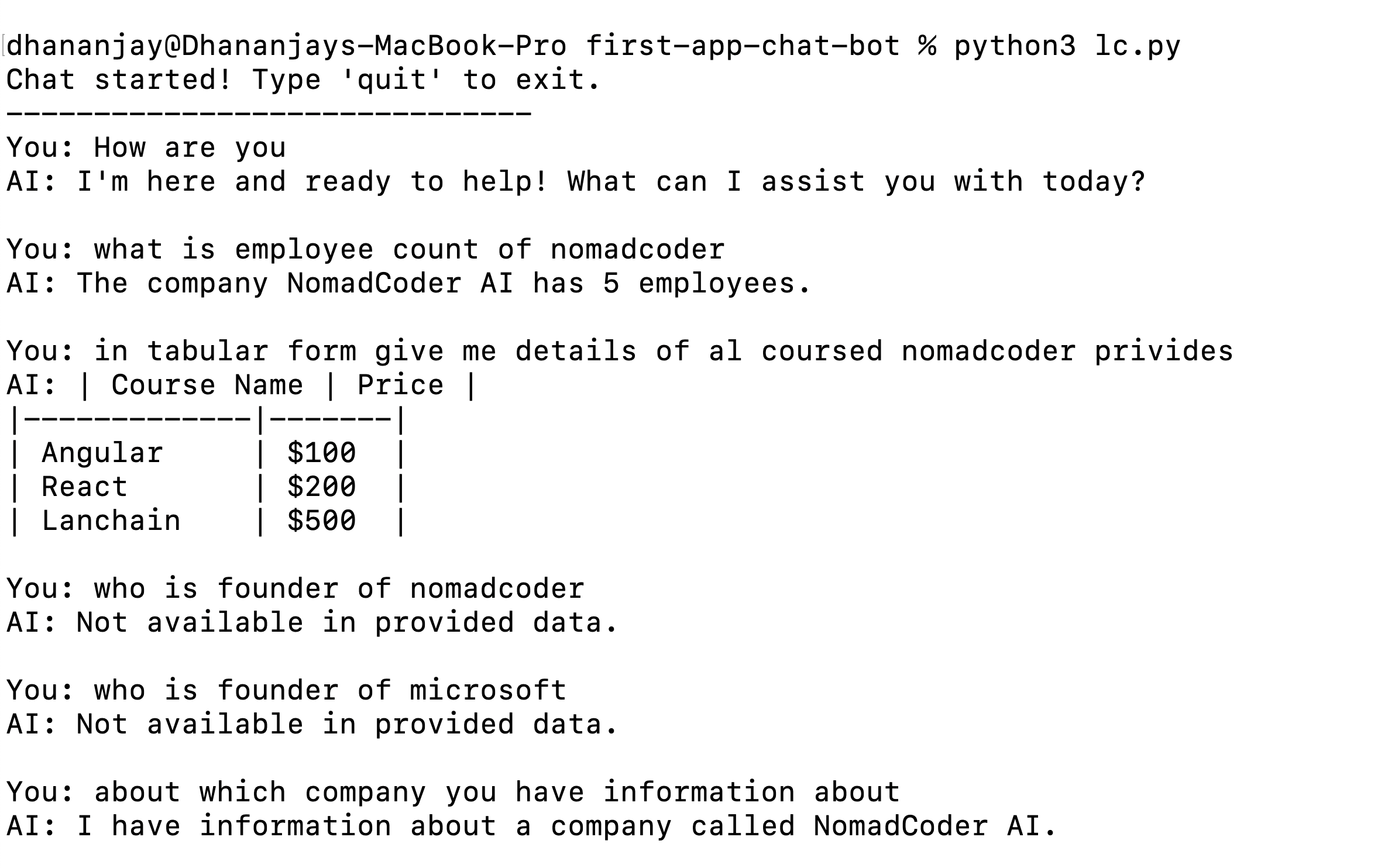

You should get the expected output as shown below.

As you can see, the model is generating responses only based on the JSON provided in the system prompt.

Summary

This article provided you with basic steps to get started with AI development with LangChain and OpenAI.

To learn about LangChain and OpenAI with TypeScript, read this post: How to Build Your First LLM Application Using LangChain and TypeScript.

Dhananjay Kumar

Dhananjay Kumar is the founder of nomadcoder, an AI-driven developer community and training platform in India. Through nomadcoder, he organizes leading tech conferences such as ng-India and AI-India. He partners with startups to rapidly build MVPs and ship production-ready applications. His expertise spans Angular, modern web architecture and AI agents, and he is available for training, consulting or product acceleration from Angular to API to agents.