See how user interfaces are benefitting from LLMs and how you can start to implement them into your Blazor, Angular, React or Vue app.

The rise of Large Language Models (LLMs) in tech is reshaping UI/UX development. LLMs are becoming key in creating user-friendly interfaces that mimic human communication, making digital experiences more intuitive and responsive. This trend points toward a future where digital interfaces are more than just tools—they’re evolving into entities that understand and interact naturally with users, thereby enhancing the digital experience.

Common Trends and Patterns in LLM and UI Integration

Throughout this blog series, we’ll be highlighting different ways to integrate LLMs with UIs using Telerik and Kendo UI components, and provide practical insights for developers and designers, demonstrating how to achieve efficient and user-friendly AI application interfaces with minimal effort.

In the evolving world of AI Large Language Models (LLMs), UI design is crucial for enhancing user experience. Let’s explore some of the common UI/UX approaches that are currently shaping how we interact with AI-driven tools.

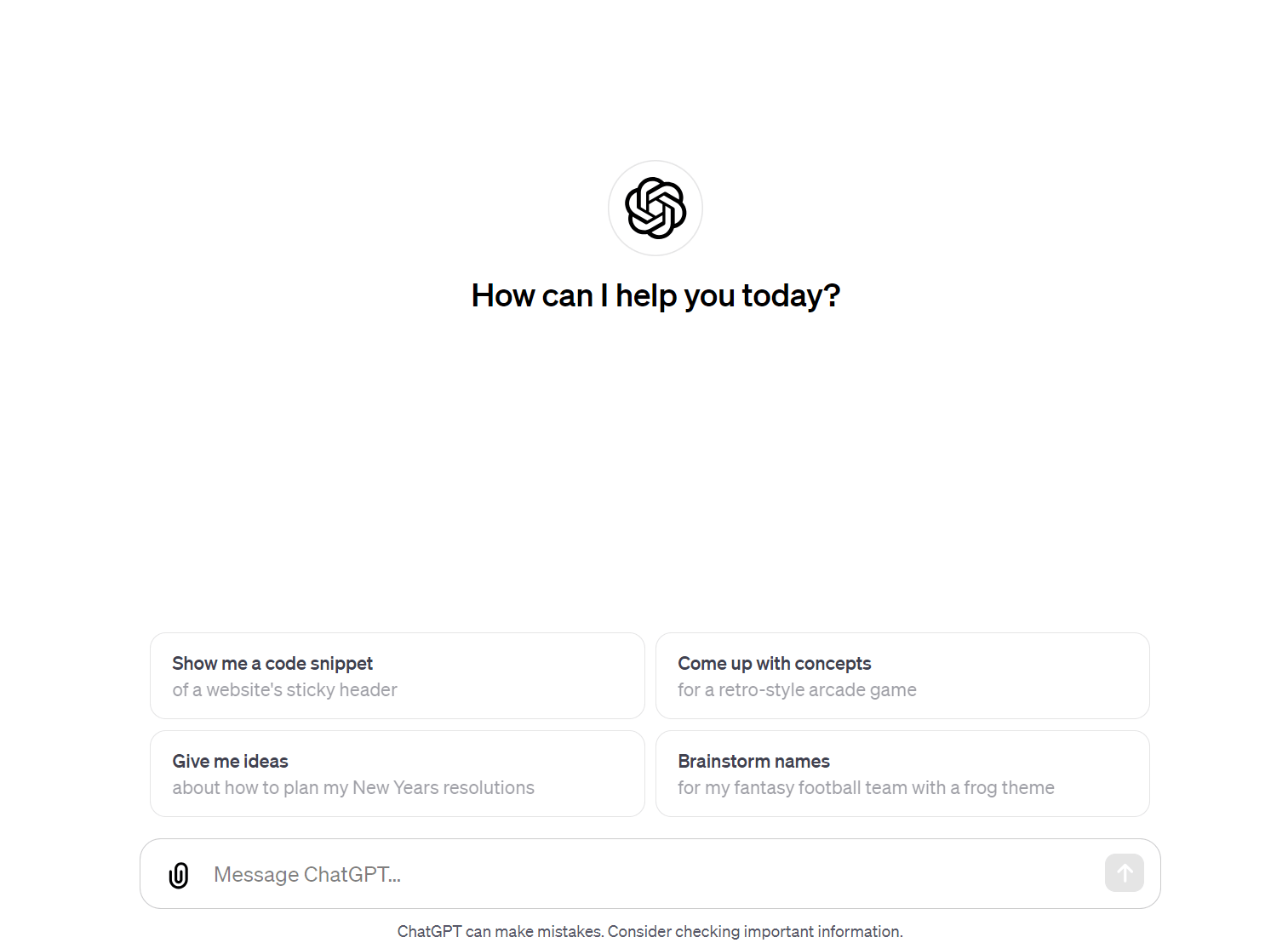

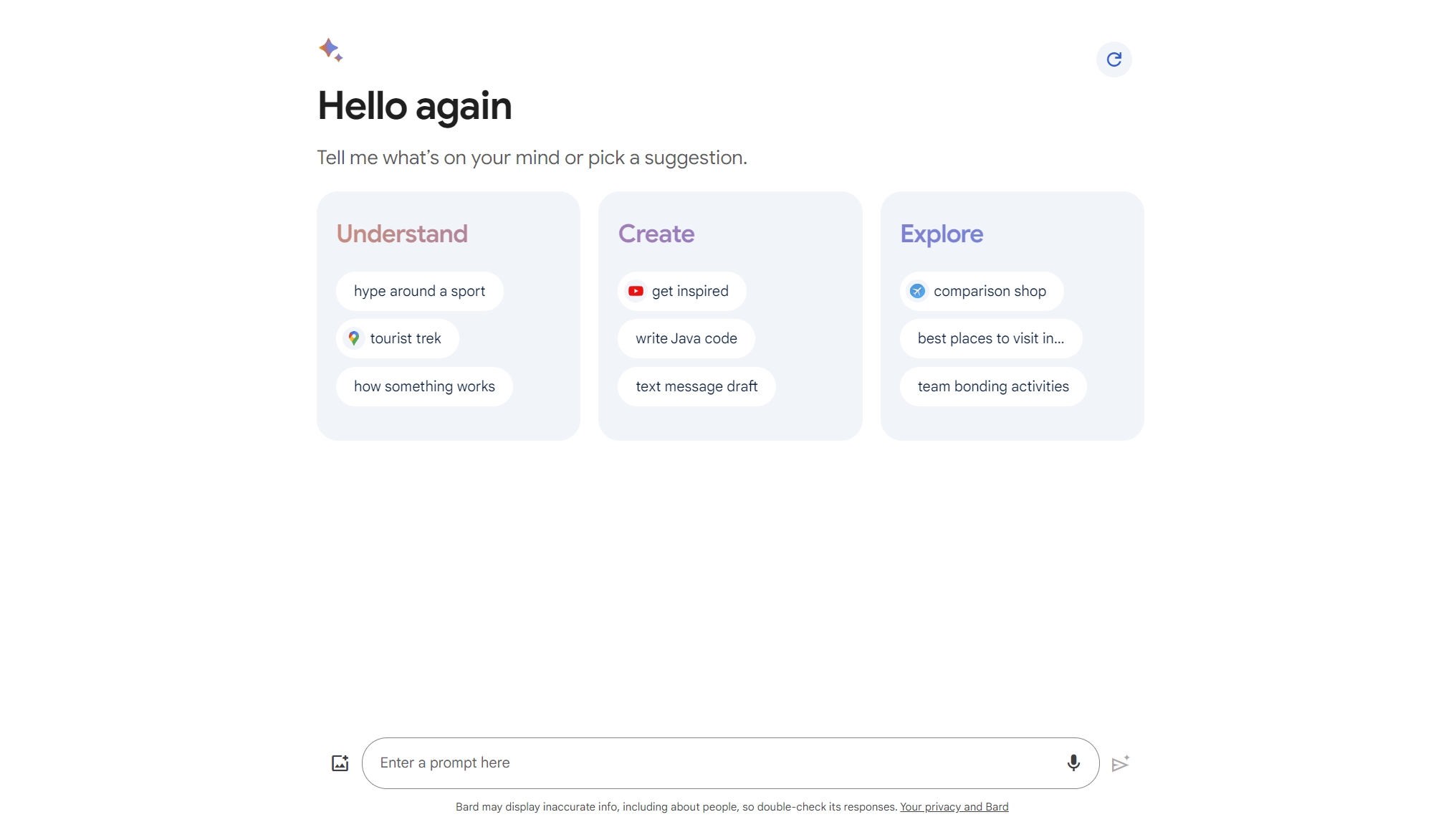

1. Standalone Sites

AI apps like ChatGPT and Bard function as standalone websites, featuring a central input bar for user queries. This layout largely borrows from established web and mobile UI/UX designs, reflecting a familiar structure that users can navigate easily.

ChatGPT

Such GenAI UI implementations excel in providing a responsive interface, ideal for reading and navigating through extensive text, offering a focused and comprehensive search experience. On the other hand, this approach is not suitable when you want to integrate GenAI as part of a larger context of a purpose built application.

Bard

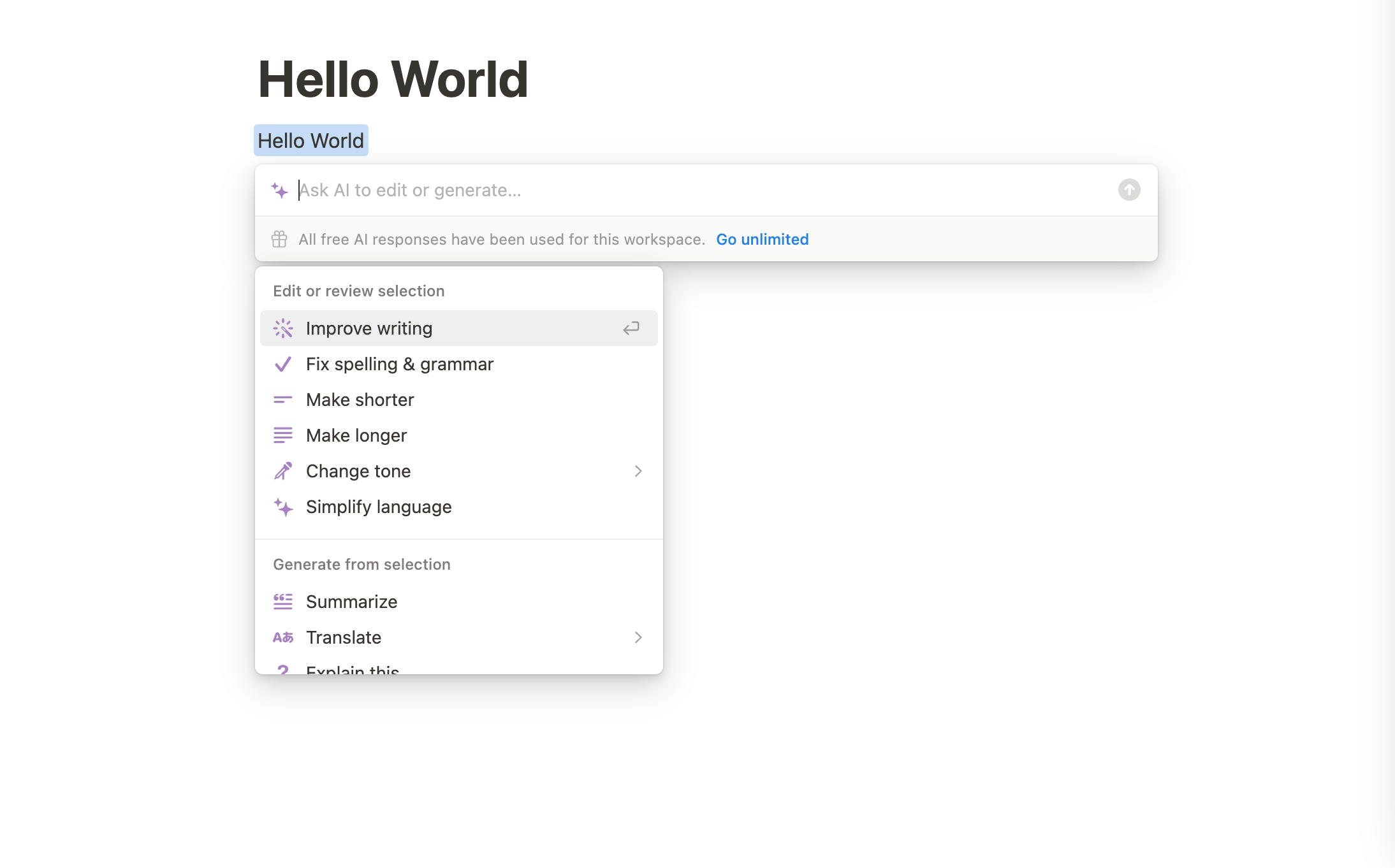

2. Taskbars

Gen AI models are also being integrated as taskbars within websites, for example as seen with notion.so. These taskbars remain discreet until activated by user commands or shortcuts.

Notion

This approach doesn’t dominate the user interface but instead remains subtly integrated in a larger application context. This design choice ensures that the AI features don’t disrupt the user’s workflow or distract from the primary functions of your website.

3. Widgets

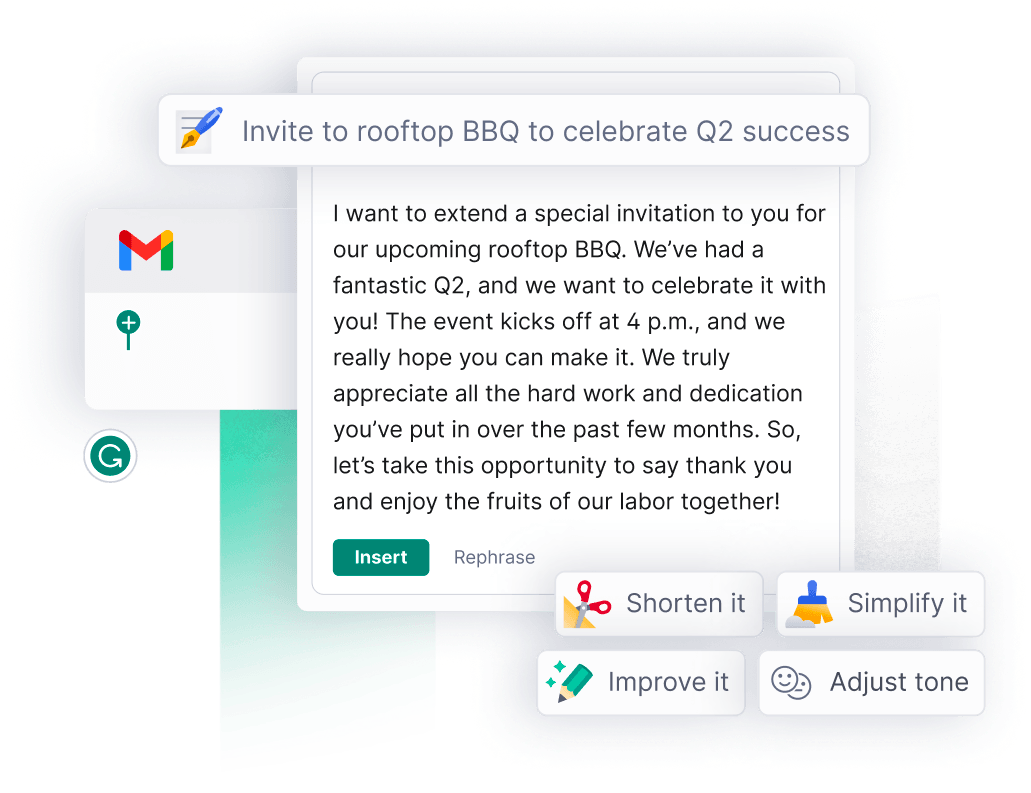

The use of widgets is an emerging trend, distinguishing AI LLMs from traditional chatbots.

GrammarlyGo exemplifies this, offering specialized AI-enhanced prompts and commands. Positioned for convenience, these widgets aim to be user-friendly, though they sometimes face limitations in space, especially with longer responses.

Grammarly

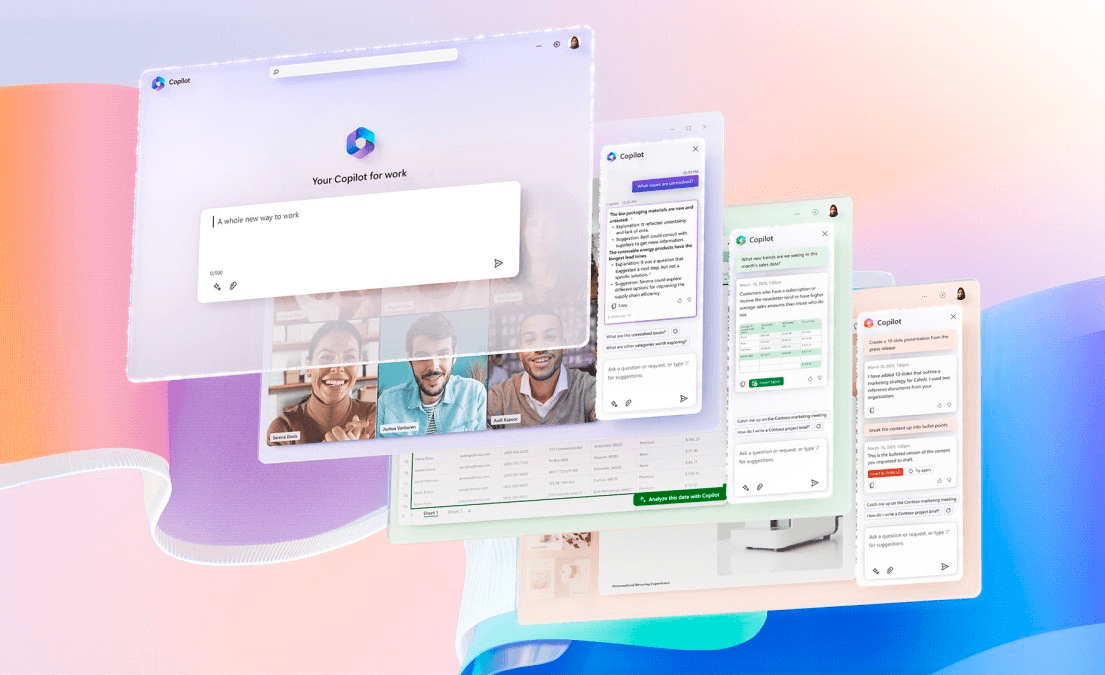

4. Combining It All: Widgets, Taskbars and Standalone Sites

Some AI applications are merging these approaches for a comprehensive UI/UX experience. A good example is Microsoft Copilot, which integrates a chat widget for generating prompts, a taskbar for specific commands and a landing page for content creation, encapsulating the benefits of each approach.

Copilot

As we navigate through these varied UI designs, it’s clear that the focus is on creating intuitive, efficient and versatile interfaces. These developments are steering us toward a future where AI is seamlessly woven into our digital interactions, enhancing both functionality and user engagement.

While the current state of UI/UX design for Gen AI is functional, it often lacks the ease and fluidity necessary for optimal user experience, creating friction that we are committed to eliminating.

Our goal at Progress is simple yet profound: to reduce the clutter of visual interfaces and enable users to execute tasks through direct, intuitive AI commands, aligning digital experiences more closely with natural human behavior.

Introducing the Telerik and Kendo UI AI Prompt Component

Introducing the new AI Prompt Component within our component library, devised to streamline the integration of your favorite AI services into applications built with Kendo UI for Angular, KendoReact, Kendo UI for Vue and Telerik UI for Blazor.

- Input Prompts & Prompt Suggestions:

- Allows for input of custom queries and selection from predefined, suggested queries for the AI engine.

- Customizable list of suggestions, enabling developers to tailor options to end-user needs.

- Configurable Actions Menu:

- Contains predefined actions for AI interactions, fully customizable in terms of order, text, icons and hierarchy.

- Facilitates direct access to specific AI functionalities, improving user efficiency.

- Query Results Display:

- Designated area to display the results from AI interactions.

- Configurable display settings (enable/disable) to accommodate various user preferences.

- Focused on presenting text-based results for clear and concise feedback.

- Reprocessing and User Feedback Options:

- Option for users to reprocess previous queries for smoother, iterative interactions.

- Features to copy results to the clipboard and upvote/downvote AI responses, allowing users to provide feedback and easily use the information elsewhere.

These features aim to provide an efficient user interface for interacting with AI services, focusing on enhancing usability while offering developers flexibility in customization.

Select an option to see the code block:

Blazor Code

<TelerikAIPrompt OnPromptRequest="@HandlePromptRequest" OnCommandExecute="@HandleCommandExecute" Commands="@PromptCommands">

</TelerikAIPrompt>

@code {

public List<AIPromptCommandDescriptor> PromptCommands { get; set; } = new List<AIPromptCommandDescriptor>()

{

new AIPromptCommandDescriptor() { Id = "1", Title = "Correct Spelling and grammar", Icon = nameof(SvgIcon.SpellChecker)},

new AIPromptCommandDescriptor()

{

Id = "2",

Title = "Change Tone",

Icon = nameof(SvgIcon.TellAFriend),

Children = new List<AIPromptCommandDescriptor>

{

new AIPromptCommandDescriptor() { Id = "3", Title = "Professional" },

new AIPromptCommandDescriptor() { Id = "4", Title = "Conversational" },

new AIPromptCommandDescriptor() { Id = "5", Title = "Humorous" },

new AIPromptCommandDescriptor() { Id = "6", Title = "Empathic" },

new AIPromptCommandDescriptor() { Id = "7", Title = "Academic" },

}

},

};

public void HandlePromptRequest(AIPromptPromptRequestEventArgs args)

{

// execute call to LLM API

// args.Output = responseMessage;

}

public void HandleCommandExecute(AIPromptCommandExecuteEventArgs args)

{

// execute command

// args.Output = responseMessage;

}

}

Angular Code

import { PromptCommand, CommandExecuteEvent, PromptOutput, PromptRequestEvent } from '@progress/kendo-angular-conversational-ui';

import { spellCheckerIcon, tellAFriendIcon } from '@progress/kendo-svg-icons';

@Component({

selector: 'my-app',

template: `

<kendo-aiprompt [style.width.px]="300"

[(activeView)]="activeView"

[promptOutputs]="promptOutputs"

[promptCommands]="promptCommands"

(promptRequest)="onPromptRequest($event)"

(commandExecute)="onCommandExecute($event)"

>

<kendo-aiprompt-prompt-view></kendo-aiprompt-prompt-view>

<kendo-aiprompt-output-view></kendo-aiprompt-output-view>

<kendo-aiprompt-command-view></kendo-aiprompt-command-view>

</kendo-aiprompt>

`

})

export class AppComponent {

public promptCommands: Array<PromptCommand> = [{

id: 1,

text: "Correct Spelling and grammar",

svgIcon: spellCheckerIcon

}, {

id: 2,

text: "Change Tone - Professional",

svgIcon: tellAFriendIcon

}, {

id: 3,

text: "Change Tone - Conversational",

svgIcon: tellAFriendIcon

}, {

id: 4,

text: "Change Tone - Humorous",

svgIcon: tellAFriendIcon

}, {

id: 5,

text: "Change Tone - Empathic",

svgIcon: tellAFriendIcon

}, {

id: 6,

text: "Change Tone - Academic",

svgIcon: tellAFriendIcon

}

];

public activeView: number = 0;

public promptOutputs: Array<PromptOutput> = [];

lkl

public onPromptRequest(ev: PromptRequestEvent): void {

// execute call to LLM API

// populate the outputs collection

// switch to the output view

}

public onCommandExecute(ev: CommandExecuteEvent): void {

// execute command

// populate the outputs collection

// switch to the output view

}

}

React Code

const AIPromptComponent = () => {

// State for managing active view and outputs

const [activeView, setActiveView] = useState(0);

const [outputs, setOutputs] = useState([]);

// Handle change in active view

const handleActiveViewChange = (newView) => {

setActiveView(newView);

};

// Handle the prompt request

const handleGenerate = (event) => {

// execute call to LLM API

// populate the outputs collection

// switch to the output view

};

// Handle command execution

const handleCommandExecute = (event) => {

// execute command based on the event details

// populate the outputs collection

// switch to the output view

};

return (

<AIPrompt

activeView={activeView}

onActiveViewChange={handleActiveViewChange}

onPromptRequest={handleGenerate}

onCommandExecute={handleCommandExecute}

toolbarItems={[promptView, outputView, commandsView]}

>

<AIPromptView />

<AIPromptOutputView outputs={outputs}/>

<AIPromptCommandsView

commands={[{

id: '1',

title: "Correct Spelling and grammar",

icon: spellCheckerIcon as any

}, {

id: '2',

title: "Change Tone",

icon: tellAFriendIcon as any,

children: [

{ id: "3", title: "Professional" },

{ id: "4", title: "Conversational" },

{ id: "5", title: "Humorous" },

{ id: "6", title: "Empathic" },

{ id: "7", title: "Academic" }

]

}]}

/>

</AIPrompt>

);

};

export default AIPromptComponent;

Conclusion

Progress is aiming for seamless technology interaction with the integration of LLMs in UI development. As AI continues to advance, we’re committed to adapting our UI components to stay at the forefront of digital interaction trends.

We’re eager to hear from you—tell us what you would like to see from us in regards to AI and UI components in the comments or in the Feedback Portal.

References and Other Materials

Lyubomir Atanasov

Lyubomir Atanasov is a Product Manager with a passion for software development, UI design, and design thinking. He excels in creating impactful and innovative software products by challenging the status quo, collaborating closely with customers, and embracing a spirit of experimentation. His dedication to delivering exceptional UI solutions makes him an invaluable asset, providing immense value through his expertise and innovative approach. Follow him on LinkedIn.