5 .NET Standard Features You Shouldn’t Miss

Summarize with AI:

It’s amazing to see the .NET community with so much energy, can you believe there’s a C# Advent Calendar? That means there’s 25 (including this one) all new articles for the month of December!

As the .NET ecosystem moves at record speed these days, it's easy to overlook some amazing things happening in this space. There are constantly new tools and features being released that can easily increase productivity. Let's take a look at a few features Microsoft recently shipped that help with testing, front-end development and cross-platform migrations.

InMemory Database Provider for Entity Framework Core

Entity Framework (EF) Core now targets .NET Standard 2.0, which means it can work with libraries that implement .NET Standard 2.0. While EF boasts many new features, the InMemory database provider is one that shouldn't go unnoticed. This database provider allows Entity Framework Core to be used with an in-memory database and was designed for testing purposes.

The InMemory provider is useful when testing a service that performs operations using a DbContext to connect with a SQL Server database. Since the DbContext can be swapped with one using the InMemory database provider, we can avoid elaborate test setups, code modification or test doubles. Even though it is explicitly designed for testing, the InMemory provider also makes short work of demos, prototypes, and other non-production sample projects.

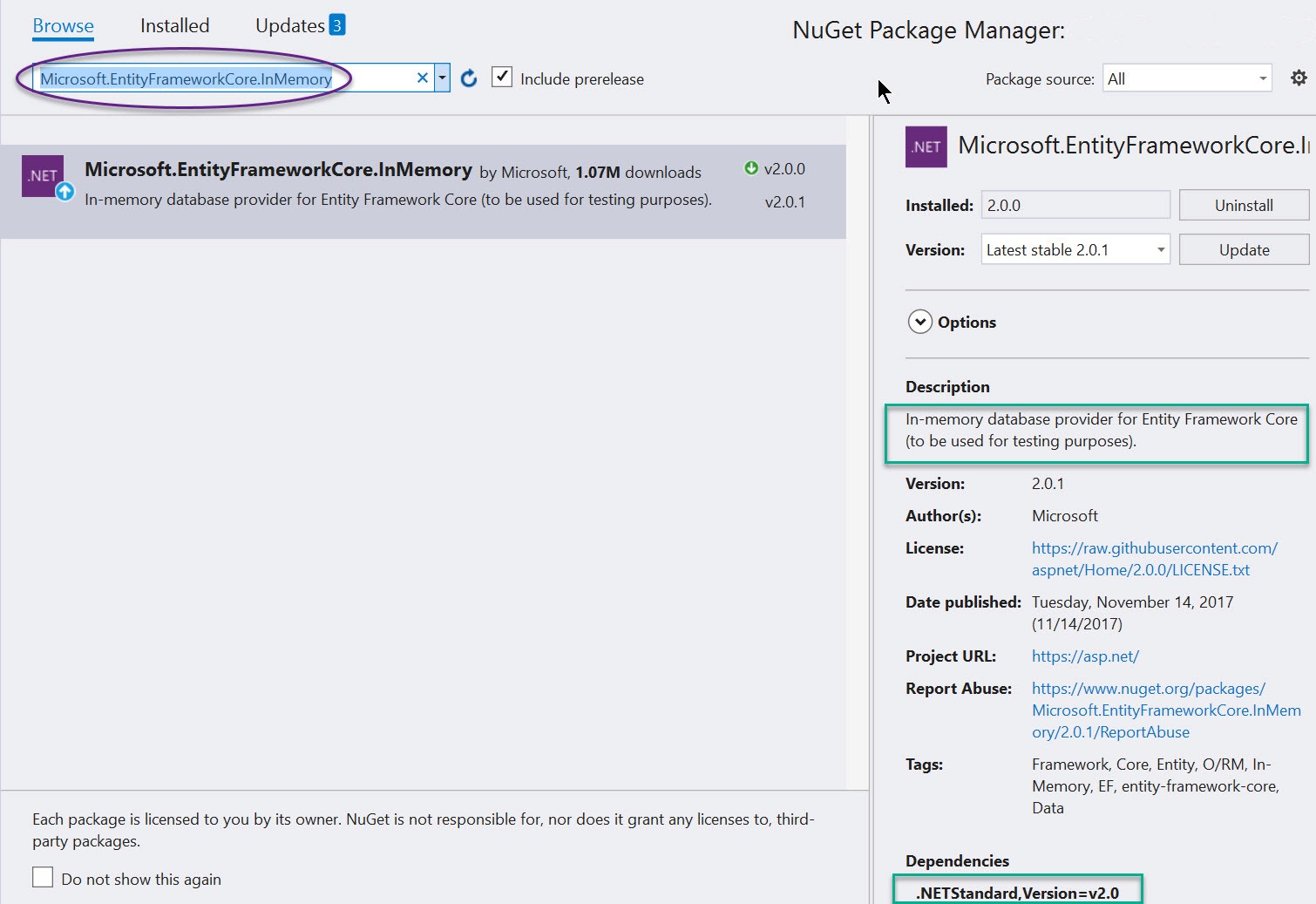

The InMemory provider can be added to a project using NuGet by installing the Microsoft.EntityFrameworkCore.InMemory package.

Package manager console:

PM> Install-Package Microsoft.EntityFrameworkCore.InMemory -Version 2.0.1

NET Core CLI:

$ dotnet add package Microsoft.EntityFrameworkCore.InMemory

Visual Studio NuGet Package Manager:

Once the InMemory provider is set up, we'll need to add a constructor to our DbContext that accepts a DbContextOptions<TContext> object. The DbContextOptions object will allow us to specify the InMemory provider via configuration, thus allowing us to swap the provider as needed.

public class BloggingContext : DbContext

{

public BloggingContext()

{ }

public BloggingContext(DbContextOptions<BloggingContext> options)

: base(options)

{ }

}

UseInMemoryDatabase extension method configures the context to connect to an in-memory database. Once the database connection is established, we can seed it with data and run our tests.[TestMethod]

public void Find_searches_url()

{

var options = new DbContextOptionsBuilder<BloggingContext>()

.UseInMemoryDatabase(databaseName: "Find_searches_url")

.Options;

// Insert seed data into the database using one instance of the context

using (var context = new BloggingContext(options))

{

context.Blogs.Add(new Blog { Url = "http://sample.com/cats" });

context.Blogs.Add(new Blog { Url = "http://sample.com/catfish" });

context.Blogs.Add(new Blog { Url = "http://sample.com/dogs" });

context.SaveChanges();

}

// Use a clean instance of the context to run the test

using (var context = new BloggingContext(options))

{

var service = new BlogService(context);

var result = service.Find("cat");

Assert.AreEqual(2, result.Count());

}

}

The InMemory provider is easy to setup, and offers low friction for testing business logic using EF. Additionally, since the database is in-memory, the data will not persist once the application restarts, providing a clean slate for each test run or demo. Despite being for "testing purposes only" it's extremely useful for creating and sharing demo code as no actual instances of SQL server are required, yet you can still utilize basic functionality of EF.

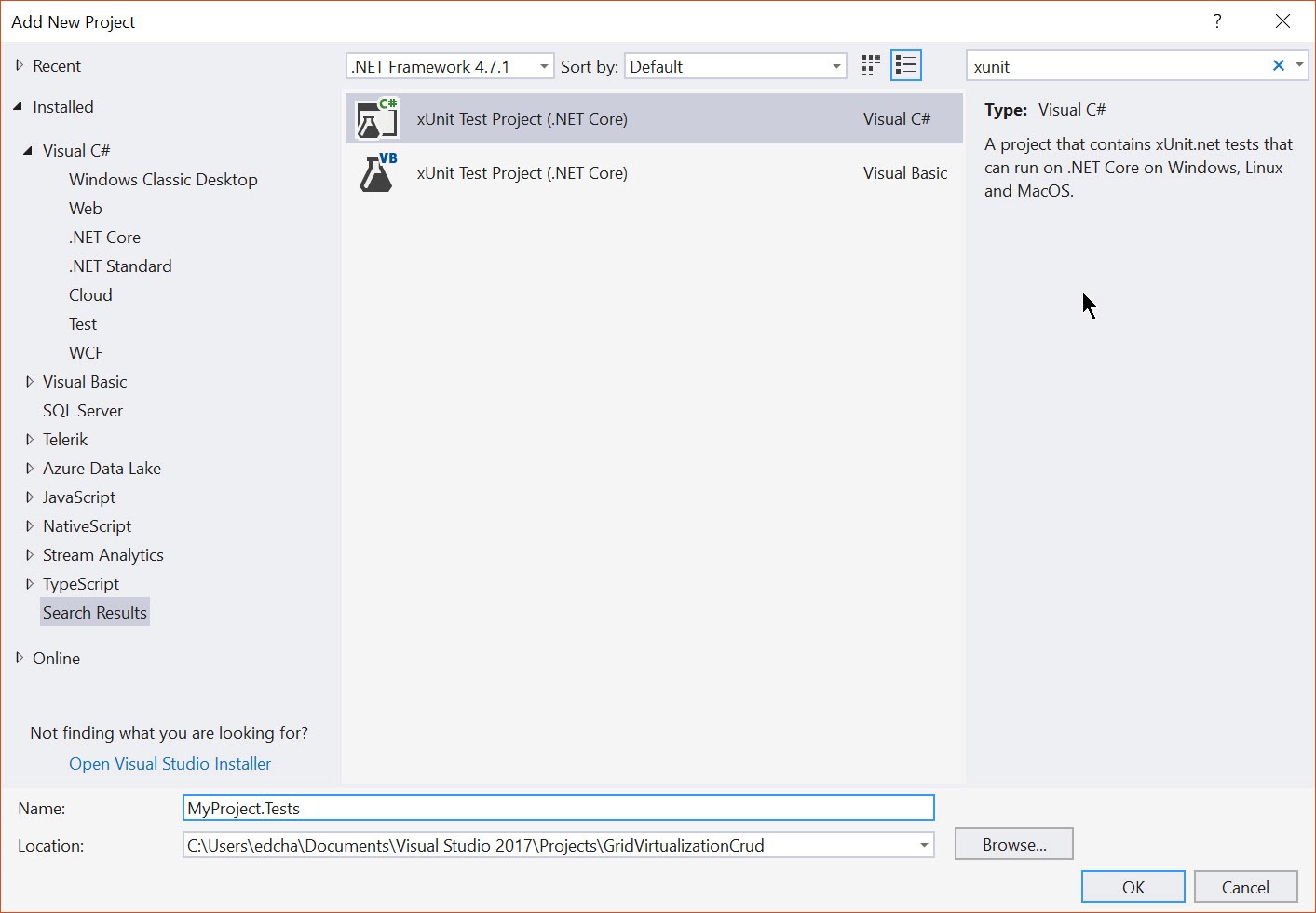

xUnit Test Projects

With over 10 million downloads according to NuGet.org, xUnit is quite a popular unit testing framework for .NET. If you're writing a .NET Core 2.0 application, then take advantage of the xUnit project template. The xUnit project template is included with .NET Core 2.0 to easily kick-start unit test projects from the .NET Core CLI or Visual Studio. While setting up xUnit has always been as simple as adding a few package references, the new project template reduces the process to a single click (or command). .NET Core CLI:$ dotnet new xunit

From Visual Studio: File > New (or Add) > Project

The new project is initialized with all the necessary dependencies. In addition, it works seamlessly with Visual Stuido 2017 Test Runner Explorer and supports Life Unit Testing in VS2017 Enterprise Edition.

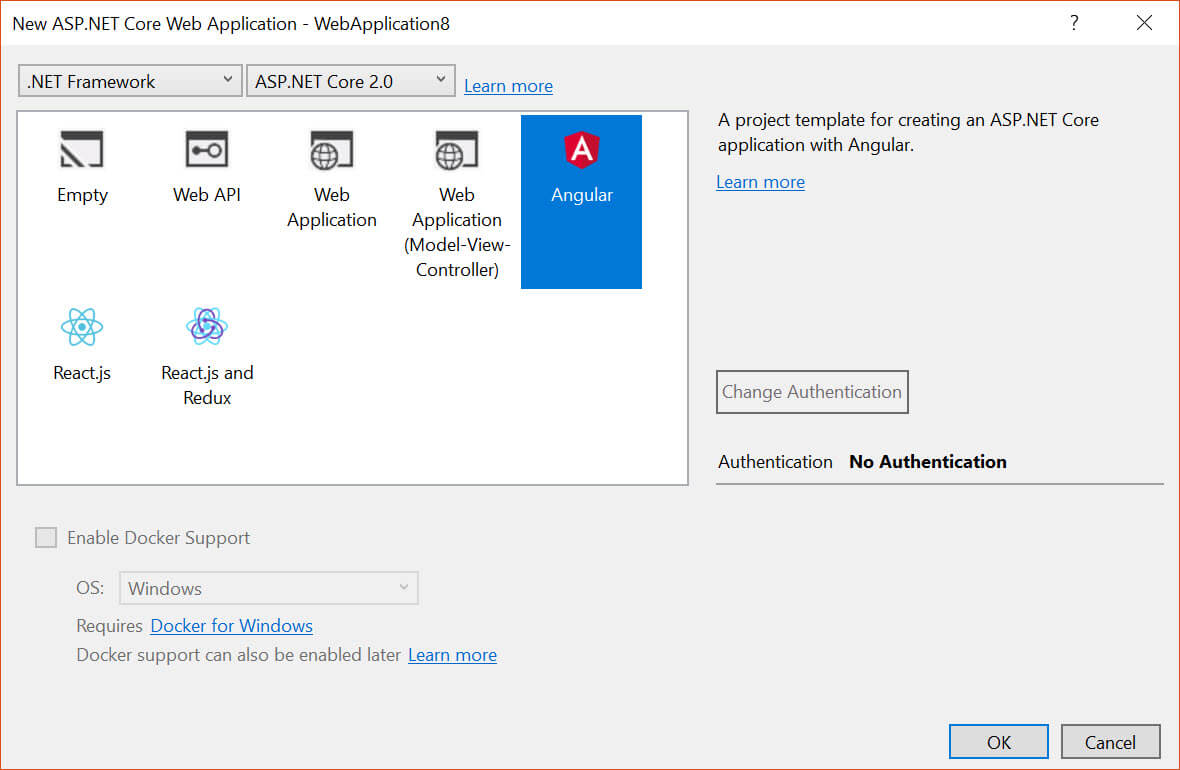

Angular & React Templates

With the 2.0 release of .NET Standard and .NET Core, new project templates were added for JavaScript Single Page Applications, or SPAs. These new project templates utilize a library called JavaScript Services. JavaScript Services is a set of technologies for ASP.NET Core developers built by the ASP.NET team. It provides infrastructure that you'll find useful if you use Angular/React/Knockout/etc. on the client. If you build your client-side resources using Webpack , or if you otherwise want to execute JavaScript on the server at runtime, then there is support in the library to perform those tasks as well. JavaScript Services is the underlying core functionality for the Angular and React project templates.The Angular and React templates are quick-start solutions that include Webpack, ASP.NET Core and TypeScript. The templates are configured to work out-of-the-box so developers who are less experienced with these technologies can jump right in and start working. These are also great solutions for getting an application bootstrapped without needing to build the complex tech stacks by hand. JavaScript Services is responsible for this ease-of-use as Webpack abstractions are built into the template, thus reducing the learning curve.

Since the templates are included in the ASP.NET Core 2.0 release, no additional installation steps are required.

Using the templates is as straight forward as executing the .NET Core CLI or clicking File > New > Project from Visual Studio 2017.

.NET Core CLI:

$ dotnet new templateName [angular, react, reactredux]

From Visual Studio 2017

What we’re getting from the template is a highly opinionated setup where many of the nuances required to initialize a project are planned out for us. This means that Webpack is configured to deal with vendor dependencies and server-side rendering. Included in the setup are processes to kick off the actual tooling, such that Webpack is initialized from within the ASP.NET application at start up. In addition, Hot Module Reload (HMR) is triggered from the application's middleware, thereby further automating the development process.

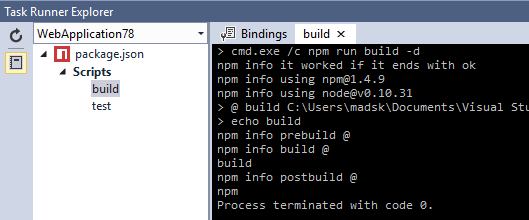

npm Task Runner

When using the Angular and React templates, a lot of time will be spent on the command line with npm. Since many of us enjoy working from Visual Studio it can be disruptive to keep switching to a command line window to perform tasks. This is where the npm Task Runner comes in handy as it adds support for npm scripts defined in package.json directly in Visual Studio's Task Runner Explorer. This includes full support for Yarn as well.

It's a must-have for any developer working with npm from Visual Studio, so grab it from the Visual Studio Market Place.

Windows Compatibility Pack for .NET Core

.NET Core 2.0 is a milestone release for backwards compatibility. With .NET Core & Standard 2.0, there's little need to worry about legacy code. If there's one thing Microsoft understands well it's that existing applications need a low friction upgrade path. There are several attributes to .NET Core that make it well suited to transition to from legacy code. Because .NET Standard 2.0 is at near API parity with .NET Framework 4.6.1, applications can make use of code they already know. In addition, .NET Core includes a compatibility shim that allows projects to reference existing .NET Framework NuGet packages and projects. This means most packages on NuGet today are already compatible with .NET Core 2.0 without the need to be recompiled.

With .NET Core being cross platform there are some instances where APIs are only available on Windows. For this reason, the APIs are not available in .NET Core. For example, Linux does not have the Windows Registry, therefore Microsoft.Win32.Registry isn't available in .NET Core. This might pose a problem for the migration of applications from .NET Framework to .NET Core. To overcome this issue Microsoft has released the Windows Compatibility Pack for .NET Core (currently in preview).

The Windows Compatibility Pack, provides access to an additional 20,000 APIs missing from .NET Core. The Windows Compatibility Pack bridges the gap, allowing those APIs to run with .NET Core when the application is running on Windows. Having the ability to migrate existing applications to the next generation gives .NET developers the freedom to write future-proof code and move the business forward with minimal risk. Microsoft suggests the following migration steps in order to smoothly transition to other platforms.

- Migrate to ASP.NET Core (while still targeting the .NET Framework)

- Migrate to .NET Core (while staying on Windows)

- Migrate to Linux

- Migrate to Azure

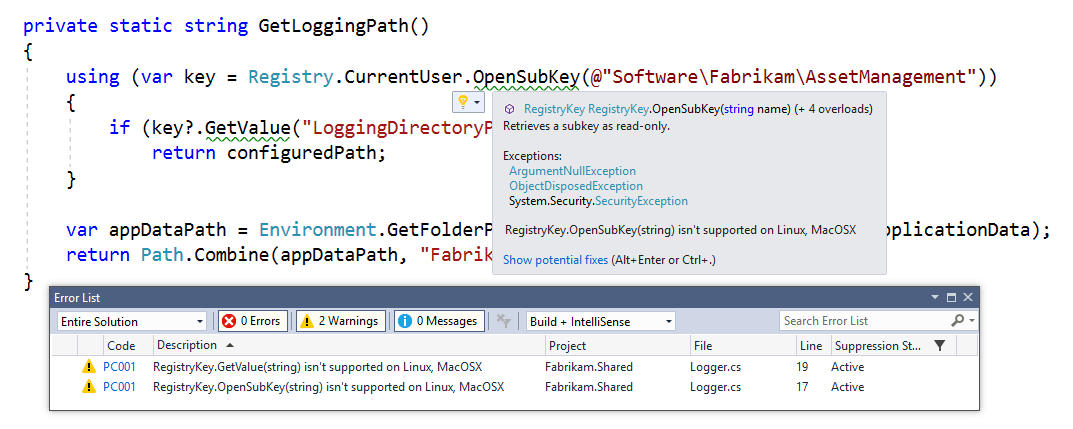

API Analyzer

If you plan on porting an application to Linux, it's important to be aware that not all APIs are supported equally on all platforms. When using .NET Core with the Windows Compatibility Pack, it's possible to call an API that is not supported from an application running on Linux. The result would be the dreaded PlatformNotSupportedException being thrown. This can make development a bit tedious and would require frequent trips to documentation to ensure that a particular API is available to use across platforms.To make things much easier Microsoft has released the API Analyzer tool, a tool to perform these checks automatically. The API Analyzer is a Roslyn-based analyzer that will flag usages of Windows-only APIs when you’re targeting .NET Core and .NET Standard.

Using the API Analyzer, warning messages are presented letting us know that an API isn't supported, for example: RegistryKey.GerValue(string) isn't supported on Linux, MacOSX.

When a warning is encountered, we can now take action against it by removing the feature, replacing it with one that is cross-platform or providing an alternate code path for each operating system.

When developing for a cross-platform and multi-device world, tools like the API analyzer are not only time savers but absolute necessities.

The State of .NET

The aforementioned features are just a glimpse into a much bigger picture of how .NET Standard is shaping the ecosystem. If you would like to learn more about the new capabilities of .NET; including fundamental improvements and standardization efforts that bring forth the best ideas for modern .NET development, then check out our latest whitepaper, The State of .NET in 2018. You can also hear more about these features directly from the .NET Standard documentation team's very own Maira Wenzel on the latest episode of the Eat Sleep Code podcast.

Ed Charbeneau

Ed