The Right Way to Adopt New Technology

Summarize with AI:

Why are even experienced software engineers drawn to shiny new technology like moths to a flame? A personal account of learning the hard way: You have to balance your curiosity with your business’s goals.

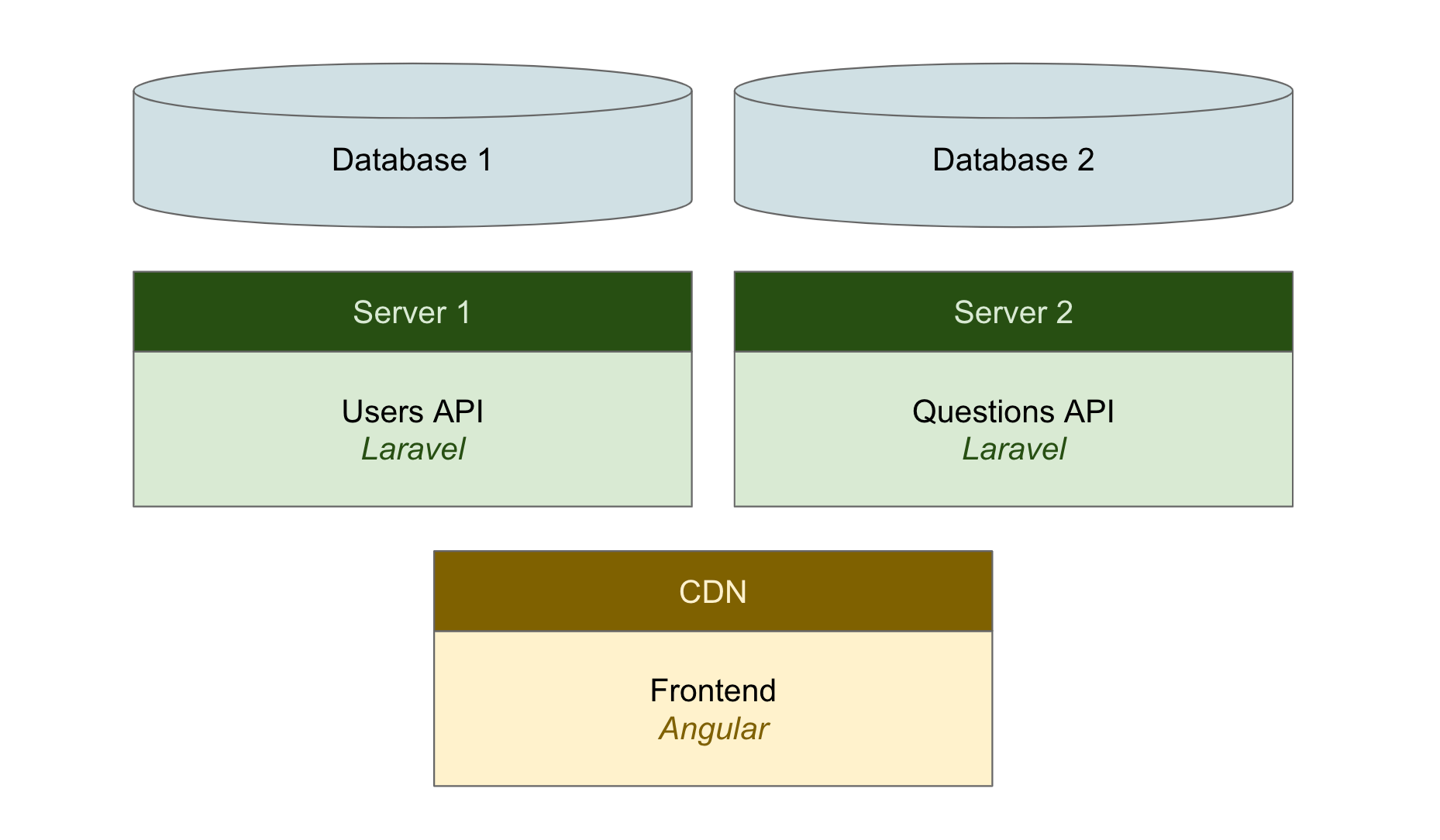

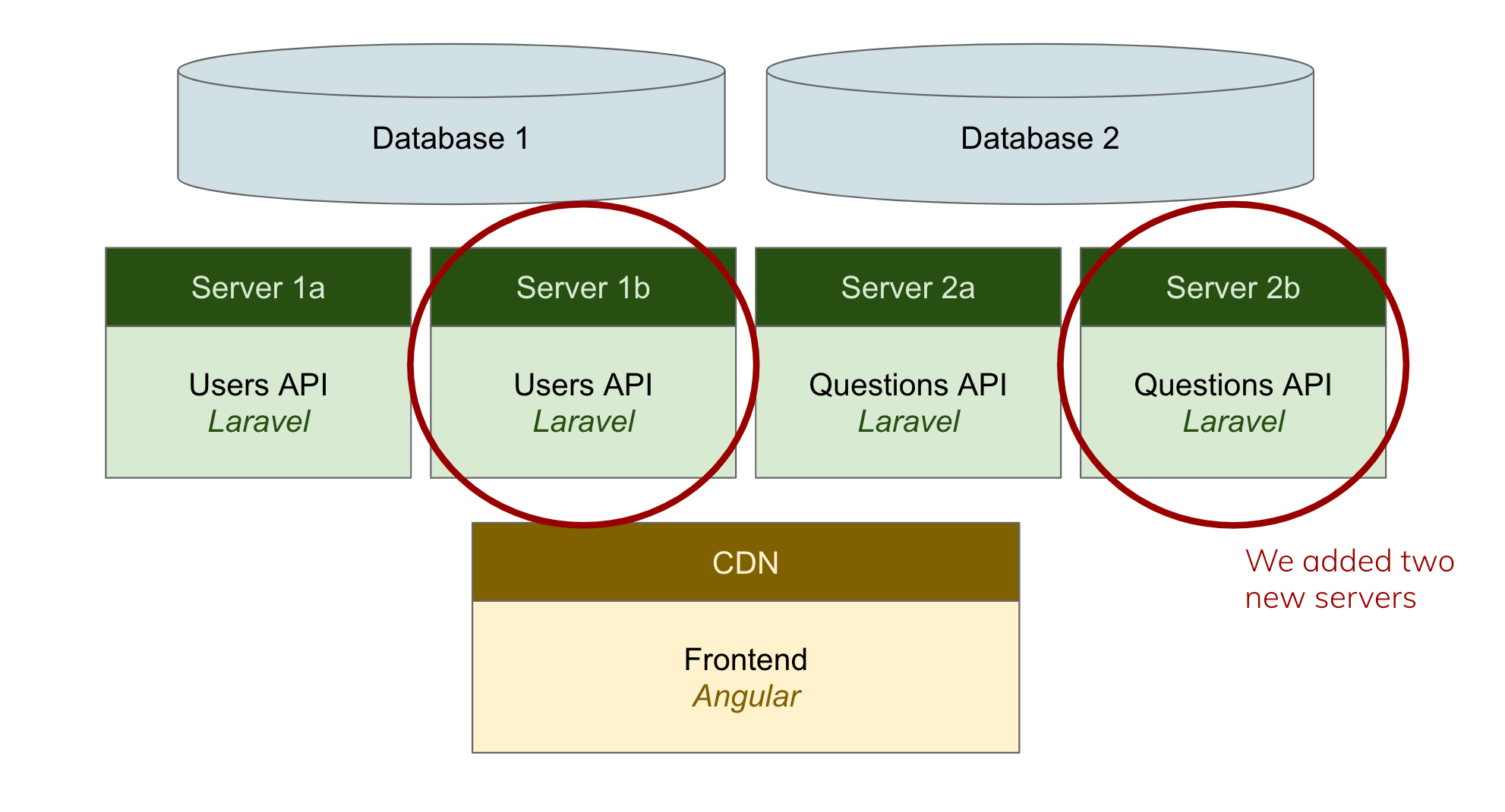

In 2015, I was leading a team of engineers building a web application for college students. Because deals with new schools were closed in May, we had three months to get ready for the surge of traffic every August.

We only had a few thousand users during our first year, so nobody was worried about scaling. We built the app in PHP with an Angular frontend and a MySQL database.

Our application architecture at the end of Year 1

Our application architecture at the end of Year 1

As we prepared to triple our userbase in Year 2, we started wondering how well our application would scale. I started learning about all the latest tools, I hired an experienced DevOps engineer and we ramped up a load testing plan.

After two and a half months of messing around with Docker, Azure Service Mesh and a handful of cutting-edge tools, we realized that we weren’t going to hit our August deadline. We backed up and thought through the problem again. I started asking some of my mentors for advice, and I remember a moment where one of them called me out:

“You don’t need all that complicated tooling!” he told me. “Just throw another server on it.”

Why is New Technology so Appealing?

Like many engineers, I leaped at the opportunity to take advantage of all the coolest new tools. After months of wasted effort, I finally realized that the solution was simple, and we already had the tools we needed. We horizontally scaled out our APIs and vertically scaled our database, which took about two weeks.

Our application architecture at the beginning of Year 2

Our application architecture at the beginning of Year 2

This was obviously the right choice in hindsight, but why wasn’t it obvious at the beginning? Why are even experienced software engineers drawn to shiny new technology like moths to a flame?

1. New Technology Promises to Solve Old Problems

Managing lots of servers is hard. It’s always been hard. It eventually got easier when we moved to the cloud, and now Kubernetes promises to continue making it easier. New technology promises to solve problems faster, more efficiently or more flexibly than all the “boring old stuff.” If you only read the marketing material, you might think that it doesn’t even have any tradeoffs.

2. We Get Attention for Using the “Latest and Greatest”

All the articles I read in 2015 talked about how great Docker was going to be. They insisted that it would replace VPSes in just a few years. Companies that were early adopters got a lot of positive press for doing so. I wanted that kind of attention too.

3. Job Candidates Flock to New Technology

Unfortunately, the Hacker News–fueled hype cycle makes engineers think they have to be working in the latest technology to stay relevant. This seems especially true for entry-level developers; I can’t tell you how many recent bootcamp graduates have asked me if we use X or Y new framework. I even had one try to sell me on moving our entire relational database to a blockchain.

4. We Want to Be Cool Too

“It’s fun to dive in and ‘modernize all the things’—and certainly you can learn a lot in the process (perhaps at the expense of the business).” - David LeBlanc

I didn’t care about padding my resume, but I remember thinking, “This will make a great story for a conference talk.” I cringe at that statement now because trying and failing to implement Docker at an early-stage startup in 2015 is probably my biggest management failure to date.

The Risk in Adopting Too Early

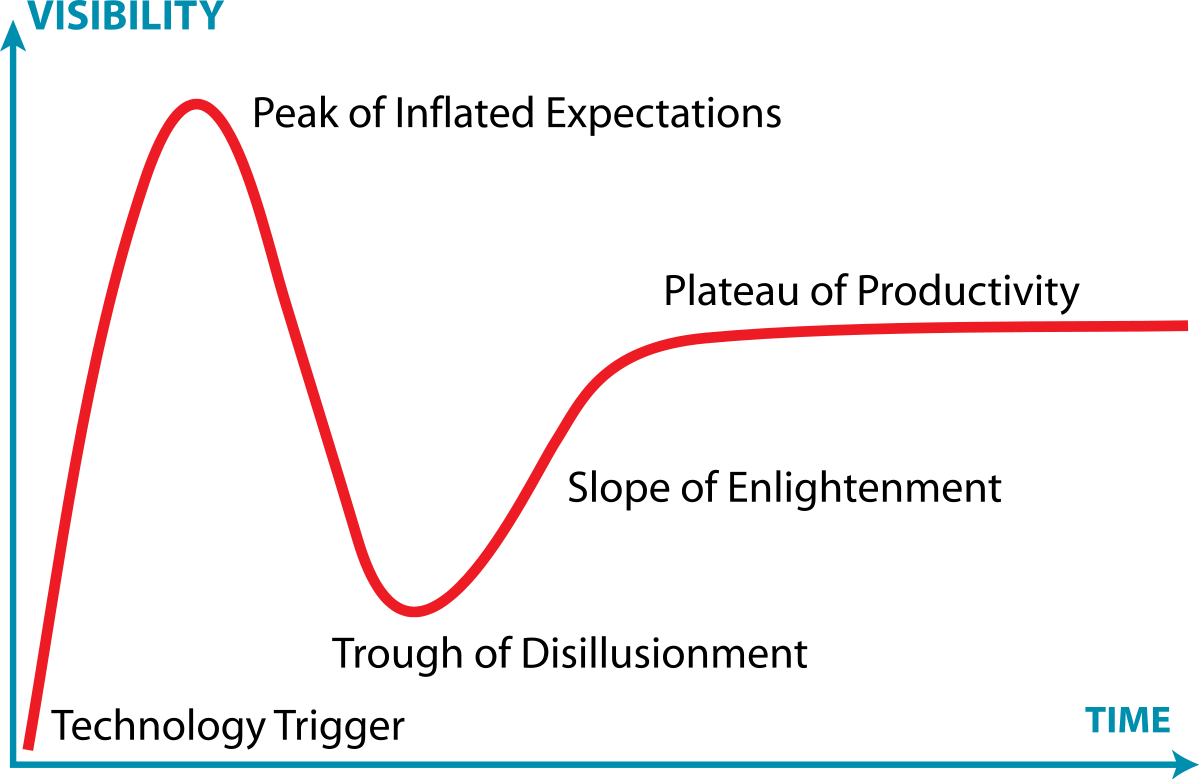

A couple of years ago, I discovered the technology hype cycle:

“The hype cycle postulates a period of marketing hyperbole and adoption of a great new thing—until reality asserts itself and makes it obvious that the new thing is not quite as magical as advertised. The ‘new thing’ then falls out of favor and is then discarded entirely or languishes until a sufficient knowledge base for its successful use evolves.” - Dick Dowdell

Technology hype cycle

Technology hype cycle

Many engineers make the mistake of adopting technology during its initial peak—when it’s most trendy and talked about. The problem is that immature technology will have new, unknown failure mechanisms that existing solutions don’t.

Software engineering teams will waste a lot of time hunting down subtle bugs, finding undocumented edge cases and rewriting code to fit with the new technology. That’s what happened to us when we tried to adopt Docker six years ago. We didn’t have the resources to fight through all the undocumented features and options, plus the API kept changing with each version.

Even if these problems don’t dissuade you, early adopters run the risk of the company shutting down entirely. I remember several friends who jumped on RethinkDB early, only to be disappointed when the company behind it shut down in 2016. While it’s come back as a community-maintained project, it never feels good to have your application’s database in limbo.

Technology Adoption Tips

So if new technology adds so much unnecessary risk, why aren’t we all running a 1990s version of Java? How can we keep from getting so far behind that there’s not an upgrade path? When we start a new project, shouldn’t we use the latest technology tools?

As with any interesting problem, the answer is, “It depends.”

I’ve started to develop some rules of thumb for adopting new technology on software engineering teams. Feel free to take these and modify them to your organization or build up your own set of rules.

1. Give People Time to Experiment

I’m a firm believer in giving employees some time to learn new things on the job. This gives them a creative outlet, keeps them engaged and allows you to spike things that would never get prioritized by the business. If an engineer spends their learning time proving that a new technology tool can be used in our application, I’ll give it strong consideration.

“New technology needs to be validated before used on a product … You have to produce results. If you don’t, you set a product on the path to the death.” - Andrew Orsich

2. Have a Default Tech Stack

One of the crimes of microservices was that they encouraged companies to build different parts of their application in different programming languages. While experienced engineers may enjoy switching languages every week, it adds cognitive overhead and makes it harder to onboard new developers. You will also see silos appear based on which programmers want to work in which languages. Pick one tech stack as your default and only broaden out when you truly need to.

3. Keep Your Core Reliable

When you choose to experiment with new technology, consider limiting your bets to less critical features first. It’s hard to adopt a new, cutting-edge database when you built a platform based on SQL, but it’s not that hard to try out a new UI library on a temporary marketing site. Once you’ve proven the new technology in less mission-critical roles, you might decide to adopt it across your core application.

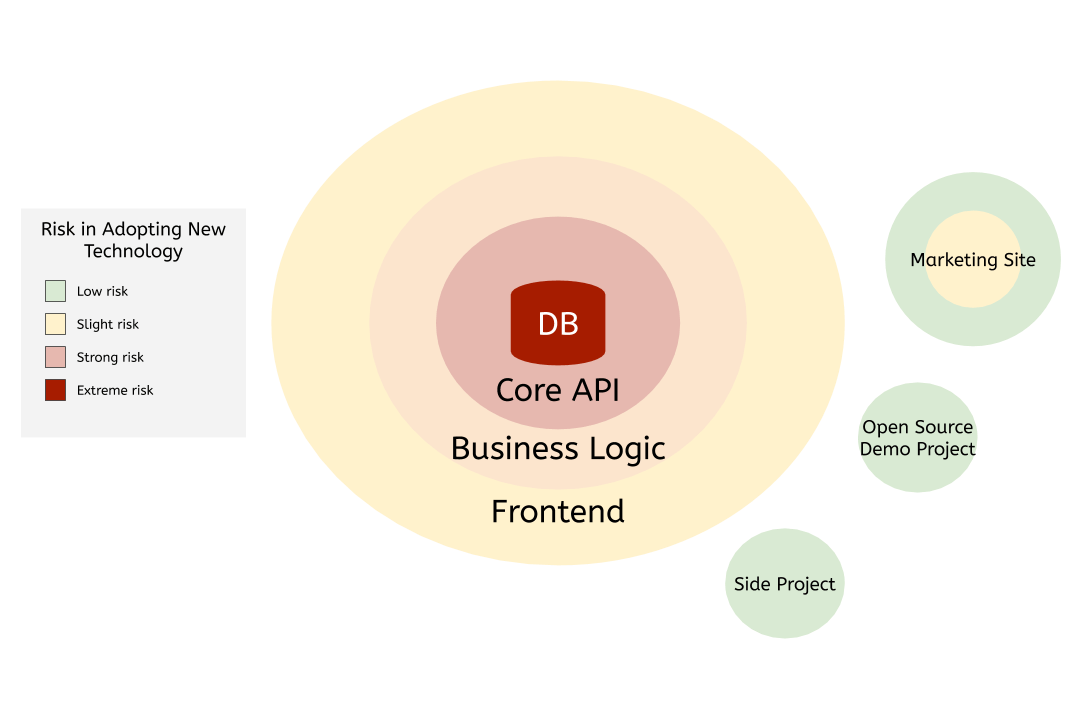

Risk level in adopting new technology throughout your application

Risk level in adopting new technology throughout your application

4. Remember the Business Goals

The best engineers I’ve worked with always kept the “why” in mind. They cut corners on parts of the application that were of low business value and spent weeks perfecting core data models. As a manager or team lead, you must remember why the business needs this technology. If some new tool enters the market, you’ll have to decide how much business value it adds and what the cost of adoption might be.

Conclusion

New technology isn’t bad. I love experimenting with new frameworks and programming languages, but as a leader, you have to balance your curiosity with your business’s goals. It’s easy to get caught up in the hype surrounding unproven new tools, so you should develop standards that help you decide when to try something new. If you’ve built a set of rules for your organization, I’d love to hear about them. Reach out on Twitter to continue the conversation.

Karl Hughes

Karl Hughes is a software engineer, startup CTO, and writer. He's the founder of Draft.dev.