The Future of Hosting with Edge Computing

Summarize with AI:

“Edge computing” is running software closer to the end user. Let’s look at some reasons to consider edge hosting, some use cases for it and some of the challenges involved.

As the cost of computing power drops and more powerful devices are running all around us, the idea of edge computing has gotten a lot of attention. “Edge computing” is a blanket term for running software closer to the end user. Browser-based web applications, mobile apps, IoT devices and content delivery networks are all forms of edge computing.

The question of where we should put our computing power has continued to swing back and forth over the past 40 years. In the days of mainframe computing, terminals were generally simple input/output devices that relied on the mainframe to process their requests. By the late 1970s, personal computers were widely available, so centralized mainframes started to lose favor except in particular applications.

While low-powered terminals never went away, they came back in the form of “thin clients” thanks to faster local networks in the 1990s. By the early 2000s, the internet pushed more computing power back to central servers.

Today, many web applications rely on a single central server and database to relay data to users, but as the demand for data and faster response times increases, the pendulum is swinging back toward edge computing.

Edge Hosting and CDNs

In the past ten years, many companies with large web applications have moved to decentralized hosting. Using load balancers, read replicas, and content delivery networks (CDNs), companies are now able to put their servers closer to end-users.

As CDNs matured, developers started to wonder if this distributed infrastructure could be repurposed to host generalized application workloads. Several companies are now offering edge-hosting services built on CDN-like infrastructure, including Fly, Cloudflare, and Amazon.

Why Host on the Edge?

Migrating to a new hosting model is no small feat, but edge hosting provides several advantages over the traditional central server model.

Latency

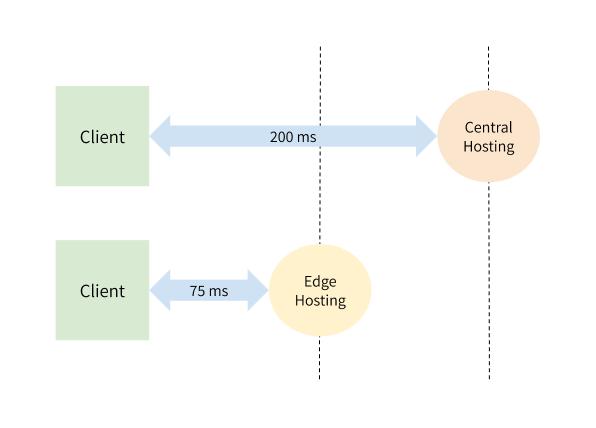

While developers can spend a lot of time optimizing their code’s performance, physics ultimately limits the speed at which data can travel across a network. “By moving computing workloads closer to where an application’s users are we can, effectively, overcome the limitations imposed by the speed of light,” Matthew Prince, the CEO of Cloudflare, pointed out in a blog post earlier this year.

If you need users to interact with your servers, but you want to decrease latency, edge hosting is one of the best options available. Hosting your web application on the edge will almost always be faster than hosting it on a central server.

Security

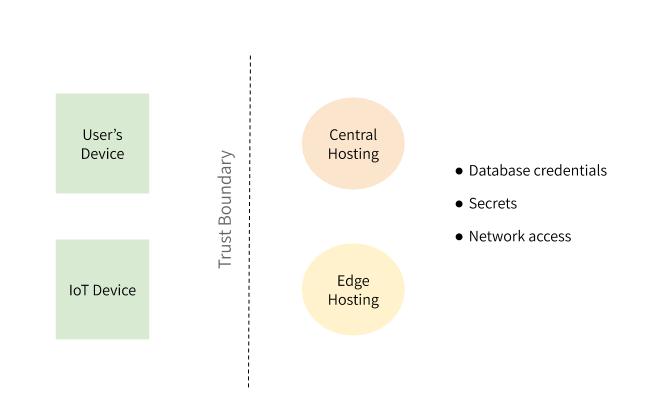

If your goal is to minimize latency, then pushing all the processing to the client might seem like the logical choice. The problem is that once you’ve passed data to an end-user, you have no control over how they use it.

“There’s a trust boundary here,” says Kurt Mackey, CEO of Fly. “Edge hosting is on the side of the boundary that you trust with your secrets and your shared state, and your IoT devices and users are on the other side of this trust boundary.”

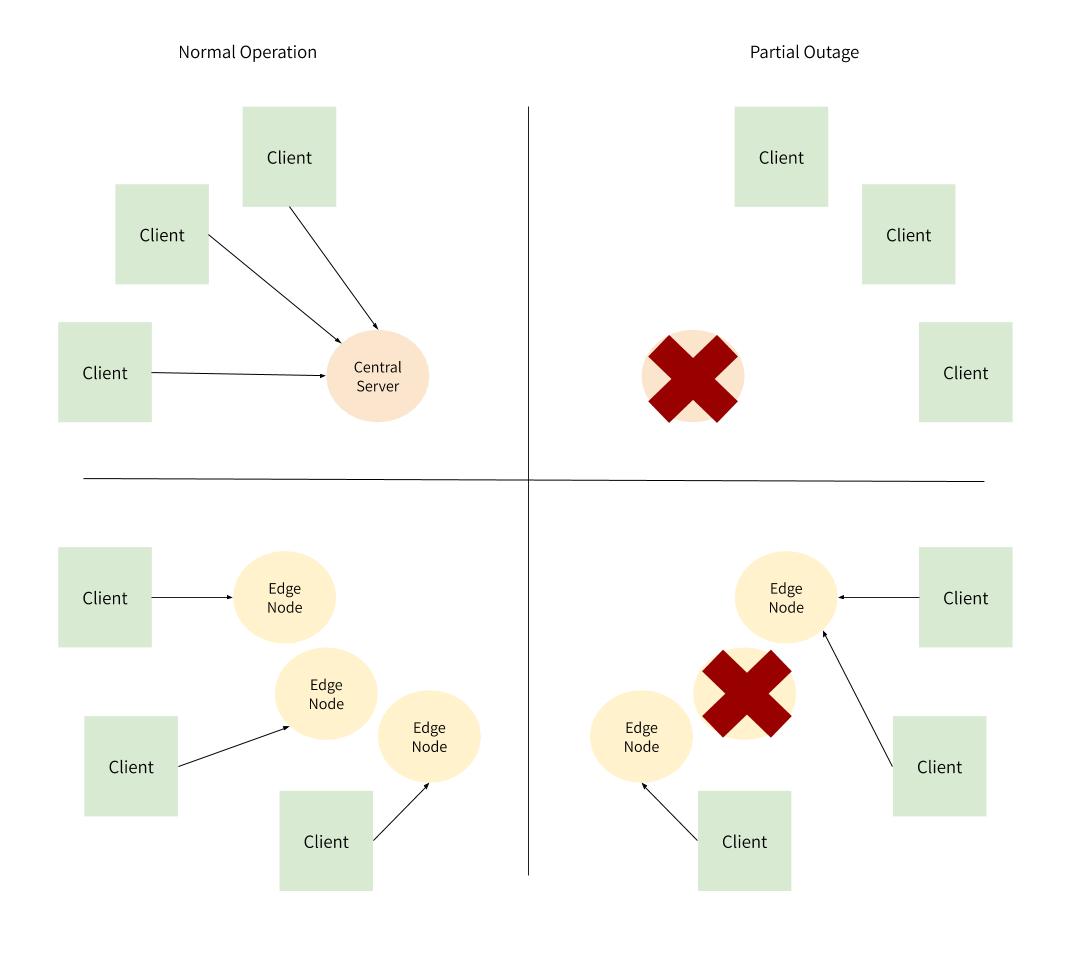

Redundancy

Finally, edge-hosted applications can provide a level of resiliency not possible in single-node applications. While the internet has gotten pretty reliable, it’s impossible to control for every variable. In 2018, the web application I was running was taken down when lightning struck one of Microsoft’s data centers. The only part of our infrastructure that worked was the globally distributed CDN.

Use Cases for Edge Hosting

While increased speed, security, and redundancy are generally advantageous in web applications, some applications will make an especially compelling case for edge computing.

CDNs—which serve static assets from the node closest to the user—have been used by online publishers and ecommerce companies for over two decades. These industries are driven by speed because every second they shave off their response time means more eyeballs and fewer abandoned shopping carts.

As more companies embrace remote work, the demand for peer-to-peer video, audio and text communication tools has been increasing. These applications need to reduce latency, so they use edge nodes to connect users by the closest possible path and make the experience better. The online gaming, sports broadcasting and gambling industries also use edge hosting to improve delivery and create a seamless experience for users.

Speed may be the obvious reason to adopt edge hosting, but it’s not the only one. If you have to deal with any geography-specific compliance issues, you’ll appreciate the ability to keep your data in the same region as your user.

“I predict the real killer feature of edge computing over the next three years will have to do with the relatively unsexy but foundationally important: regulatory compliance,” says Matthew Prince. Edge hosting will allow you to segment traffic such that European users’ data is kept in European data centers and US-based traffic stays in the US.

Microservices may also find a good fit on edge hosting. API gateways like Kong can be deployed to the edge to improve performance and security for your microservices. Similarly, data transformation operations are often a good fit for edge hosting.

Finally, the edge might be the new home for machine learning. Dinesh Chandrasekhar, Head of Product Marketing at Cloudera, points out that, “Powerful computing devices are coming to the edge, all of which come equipped with GPUs that pave the way for ML and AI to move close to the edge.”

Kurt Mackey has seen the same things when talking to Fly’s customers, “Someone came to me asking if they could run Tensorflow on the edge because they wanted to run fraud and bot detection.” Processing data closer to users can improve response times and decrease bandwidth requirements for data-intensive applications.

Challenges in Adopting Edge Hosting

Edge hosting sounds great, but it’s not a panacea. Companies that are ready to adopt it will still have to solve several problems that have long been settled in traditional single-server hosting models. Some edge-hosting providers may offer solutions to these challenges, but you’ll have to compare the options to determine which is best for your use case.

Application State

Almost every web application needs some way to store data, and those hosted on the edge are no exception. “It’s interesting how quickly developers realize they need databases that also run globally,” says Kurt Mackey. “I’ve been shocked by how much people ask for that.”

Distributed databases are improving, but they’re still relatively new and often have limitations that developers need to be prepared for. CockroachDB and YugabyteDB are two SQL-compatible databases designed to work in a distributed environment.

Depending on your application, you might also be able to shard your data or use read-only replicas to access data faster without making as many calls to your central data store.

Logging, Visibility, and Metrics

Tracing requests through a globally distributed network also provides a unique set of challenges. “Companies can not have a dedicated admin to monitor and service each and every Edge location,” says Roopak Parikh, CTO of Platform9. “These operator limitations … mandate that Edge applications be not just very low on computing footprint but also on technical IT overhead.”

Kurt Mackey says that visibility is one reason people choose Fly.io over custom-built edge-hosting infrastructure. “One of the hard parts about running a global app is visibility, metrics, and understanding what’s happening," he says. "That’s the stuff that’s really lacking when you roll your own solution.”

Networking

While network security and access are always a central concern for system administrators, they become especially challenging in an edge-hosted environment. With dozens (possibly hundreds) of nodes, the rules about internal and external network access can get messy. Some edge-hosting providers include solutions for this, but if you’ve created your own network, it could take a lot of effort to maintain.

Cold Starts

Some edge-hosting platforms require “warm-up” time before your application is ready to serve for the first time. Cold starts are a problem in most serverless hosting platforms, but it’s even worse when your serverless platform is distributed across a wide range of nodes.

Matthew Prince points out that, “edge computing platforms can actually make the cold start problem worse because they spread the computing workload across more servers in more locations. As a result, it’s less likely that code will be ‘warm’ on any particular server when a request arrives.”

If your application is continuously serving traffic across all its nodes, you might be able to mitigate this challenge, but it’s worth noting.

Cost

Globally distributed hosting means more servers to pay for and maintain. While each server may be smaller, edge hosting can be costly if you have dedicated resources. Some edge-hosting providers may lower your cost by giving you shared resources or spinning down your unused nodes, but this comes with performance tradeoffs. You’ll have to decide how much the improved latency and security are worth for your application.

Maintaining Consistency

Keeping dozens of servers around the world in sync can be challenging, even with a good process and automated tools.

“It’s almost impossible to manage all these deployments if they don’t share a secure control plane via automation, management and orchestration,” says Nick Barcet, Senior Director of Technology Strategy at Red Hat. “You’ll want to manage your entire infrastructure in the same way and create an environment where you can consistently develop an application once and deploy it anywhere.”

Conclusion

Edge hosting opens up a world of new possibilities for distributed applications. Running code closer to your users can improve its performance and resiliency without sacrificing security. While some forms of edge hosting have been around for decades, the growing number of options available help solve some of the problems that have prevented widespread adoption to date.

Whether you build your own network of edge nodes or use a managed provider, you’re likely to see more of this paradigm in the coming decade.

Karl Hughes

Karl Hughes is a software engineer, startup CTO, and writer. He's the founder of Draft.dev.