Progress Goes To Google I/O

Summarize with AI:

This week, Progress is at Google I/O in the human form of Tara Manicsic and yours truly. Google I/O is a big conference that takes place in Mountain View at the shoreline amphitheater. At my first I/O, Sergey Brin jumped out of a plane with Google Glass and landed on the top of the Moscone center. In years past things have been quite a bit more tame, but we’re holding out for something exciting this year.

We started our day out hunting for bagels which were in short supply. Once we found them, we briefly contemplated opening up a black market, but then Tara decided she needed a band-aid so we ditched our criminal plans and headed to medical.

After that we made our way to our keynote seats and waited for the games to begin. It wasn’t long before they queued the incredibly loud countdown intro, and we were off.

Machine Learning, Machine Learning, Machine Learning

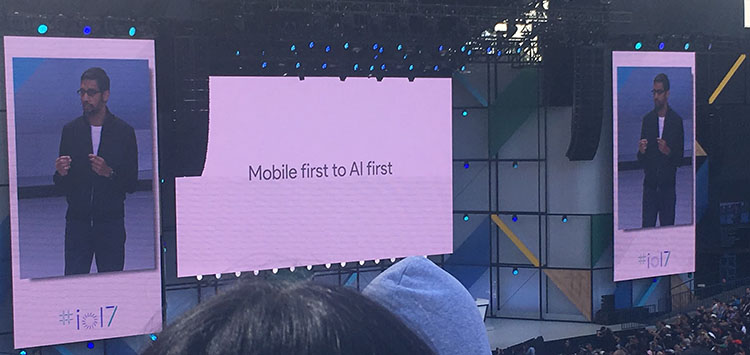

Machine Learning / AI occupied nearly the entire Microsoft Build keynote last week, and it ate up a significant portion of the Google I/O keynote as well. Google has begun to bake their AI into everything they make. From Android to Gmail to Google Home, their Machine Learning investment is showing up in just about everything that Google does. In fact, Sundar Pichai prominently displayed a slide showing that Google was moving from a mobile first company to an AI first company.

Drink every time someone says "machine learning" #io17

— Andrew Betts (@triblondon) May 17, 2017

Google Home

Google has been hard at work improving their Google Home product. I didn’t realize this, but Google Home now recognizes individual voices enabling a personal experience for multiple individuals in a home. This is something that I wish that Alexa could do as well. Right now my wife and I share an Alexa login so when she adds things to her shopping list, she has to log in as me to see them.

Google Home now has a 4.7% error rate on natural language processing, which is down from it’s original 8%.

You can also now make phone calls with Google Home as well. What’s really neat is that you can call any landline anywhere in the USA for free. I’m finally starting to see that Grand Central / Google Voice acquisition paying off!

Google Assistant

Vision is something that Google has been working on quite a bit as well and there is plenty of Machine Learning to be found here as well. Today they announced something called “Google Lense”. This is a feature that can take actions based on items in the real world using Machine Learning (drink). The example that they gave was using Google Lense to identify a flower in real time and get more information about it. Another example would be pointing your phone at a restaurant while standing outside and seeing the menu / Yelp reviews in real time.

Google Assistant is also getting far more conversational. In other words, once you start a conversation about a topic, you don’t have to provide the context for that topic for follow up information. For instance, you could use Google Translate to translate a menu written in another language. Let's say it pulls back some food item you have never heard of. Instead of having to say “What does [ITEM] look like”, you can just say “What does it look like?”, and Google Assistant knows the context in which you are speaking.

Google’s AI Data Centers

Google talked a bit about the actual hardware behind their AI platform as well. Machine Learning (drink) is very computationally intensive, and it takes a lot of computing power to run the thing. As of now, Google has built something called “Tensor Processing Units”, or TSUs. These are much faster than traditional CPUs. Today they announced an even newer type of processing unit for AI called “Cloud TPUs”. These are units that are optimized for both training and inference, which are the two fundamental pieces of Machine Learning (drink).

Google Photos

Google Photos got a big spot in the keynote today. As of today, Google Photos can recognize people, events and emotions in photos. It can also pick out the best photo from a group of similar pictures. It can clean up grainy photos and even remove obstructions from photos where they are clearly undesired.

A big emphasis was put on sharing today in Google Photos. They announced several different ways to share photos. One of those was through shared libraries. This was a feature I thought photos already had, but apparently not. Photos can also share pictures with people and automatically save those photos to other people’s photos accounts. This is nice since often pictures that I want are on my wife’s phone or vice versa. This service makes sure that pictures we want to share are always available on either device.

Lastly, you can now make physical photo books from Google Photos. This is a feature that has been in Apple Photos / iPhoto for some time. However, I find myself drawn more and more to Google Photos because of the unlimited storage and ease of sharing.

YouTube

Some new things are coming to YouTube, including 360 viewing in the living room, and something that I totally don’t understand called SuperChat. Basically you pay to have your chat message highlighted and appear at the top. Feels like a good way to destroy the authentic connection between stars and fans by making fans pay to chat with the creators, but I’m not really in the YouTube demographic anyway.

Superchat is the most distopian thing I've heard of this week.

And that's saying something.#IO17 pic.twitter.com/GSF986lcoU— Robert McGregor (@ID_R_McGregor) May 17, 2017

VR And AR

VR is big this year and now there is a big push on AR as well. Phones are being updated with Daydream, Google’s VR compact for phones with accompanying headset. They are releasing stand alone headsets as well.

In case you’re wondering what Daydream even is, it’s kind of like Google Cardboard, except it has much higher-performance with faster rendering times and operates on a six degree axis where you can move forward, backward and up and down in space. This is supposed to cut down on the nausea effect that Cardboard causes. This is good news because I can hardly use Cardboard anymore without getting really motion sick.

Special thanks to JM Yujuico who was sitting across from me while I was writing this post and was kind enough to explain to me what Daydream is. He works for a VR company called Vicarious, that you should definitely check out.

Augmented Reality (AR) can now help you find things at Lowes. This is especially exciting for me considering I’ve spent at least a combined 2 years of my life wandering through Lowes looking for various items. Why is it so hard to find someone who works there to help you? If Google could help me find a sales associate at a hardware store, that would be truly amazing.

Google Jobs And Closing

They closed the keynote talking about Google Jobs. Similar to the way that Google surfaces flight results, it can now surface job search results right from Google. It does this by partnering with Monster, Career Builder, LinkedIn and others to surface data to the search results page.

There were a lot of other items in the keynote that we didn’t cover here. It’s impressive to watch all of the emphasis on Machine Learning (drink) at these big tech keynotes. Clearly we have entered a new era of software. Progress also recently acquired DataRPM, which does Machine Learning (yes, one more) in industrial environments to predict equipment failure and optimize maintenance. Stay tuned to see how Progress begins to weave this AI story into all of it’s products.

Machine Learning is definitely the future. Go ahead and drink again if you’ve got any drink left.

Burke Holland

Burke Holland is a web developer living in Nashville, TN and was the Director of Developer Relations at Progress. He enjoys working with and meeting developers who are building mobile apps with jQuery / HTML5 and loves to hack on social API's. Burke worked for Progress as a Developer Advocate focusing on Kendo UI.