Next Level Report Engine Explained

In this blog post I will continue the series of articles dedicated to Telerik Reporting - complete .NET embedded reporting tool for web and desktop applications. The purpose of the series is to provide a more technical view over the specifics of our product. This in-depth knowledge will benefit the report creators who want to make sure they’re using the full potential of the reporting engine, gaining high performance and problem-free integration of Telerik Reporting in their applications.

Reporting Engine Overview

This article assumes you already have some experience with Telerik Reporting, but in case you’re a first-time user or want to go again through the main points, I can recommend a few articles before continuing with this one. For a quick hands-on exercise on how to create a report in a few steps, I would suggest the QuickStart section in our documentation. But for a more detailed look you can continue with the excellent series of articles by Eric Rohler, dedicated to Desktop and Web Report Designers and with the previous blog of our series named “Best Practices for Data Retrieval in Telerik Reporting,” where I talk about the right way to prepare and pass data to a Telerik Report.

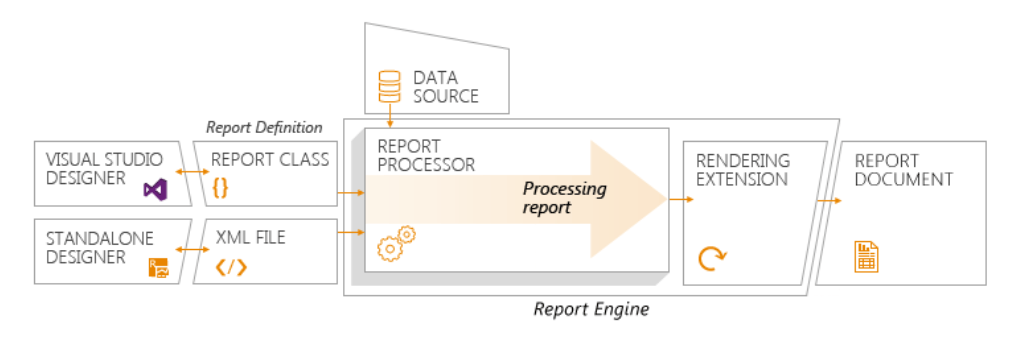

If you already went through the suggested reads, maybe you’ve noticed that they follow the first steps of the report lifecycle, as shown on the image below—creating the report definition and feeding the data.

But how exactly does that report definition, created as a bunch of static controls and some rules in the report designer, go live and produce thousands of pages in a PDF or DOCX file? How does the data from the database get divided in groups and aggregated to calculate the SUM or COUNT expressions? When are the Bindings applied? These and other questions will be answered below.

Definition and Data

To illustrate the processing workflow better, I will use a very simple report definition that will display all the countries in the world, grouped by the first letter of their name. The report employs a WebServiceDataSource to fetch the data from a REST service using the URL https://restcountries.eu/rest/v2/all?fields=name. As the URL suggests, the JSON data, returned from the remote REST service, will contain only the name of the country. In order to have the data ordered alphabetically, I have added a sorting rule to the report. Performance-wise, it would have been better if I was able to get the data ordered from the REST service, but since it lacks such option, I decided to add the sorting rule in the report.

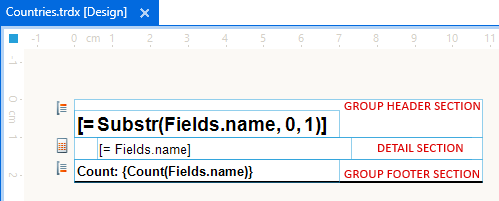

The report also has one report group with a grouping expression set to:

= Substr(Fields.name, 0, 1)The group footer contains a textbox with an aggregate expression:

=Count: {Count(Fields.name)} which will display the count of the countries in each group. The overall look of the report on the designer surface is shown below:

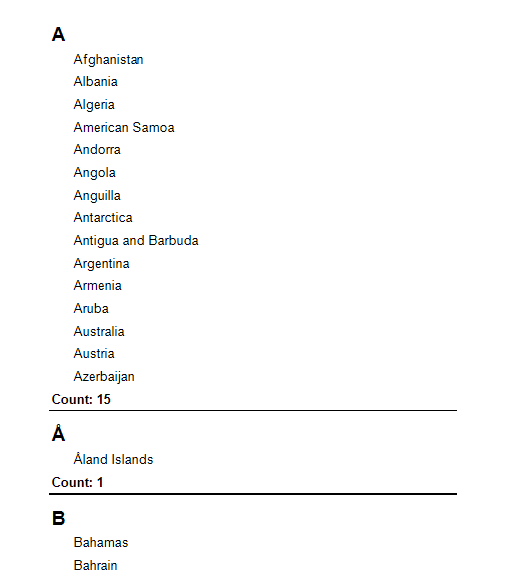

The previewed report shows the alphabetically ordered list of countries, grouped by their first letter:

You can download the report definition from here: Countries.trdp.

Processing the Report

The report items in the report definition are described in a hierarchical tree structure, where the root node is the report and its first-level descendants are the report sections. The report sections contain items that may have other descendants (like Panel), or represent leaf nodes (TextBox, Shape, PictureBox, etc.). The hierarchical structure also holds the additional rules like Styling, Conditional Formatting or Bindings that may be applied on each item.

Once we have configured the report definition, it’s time to process the report. Actually the processing stage and the subsequent rendering stage are closely related, and from the user’s point of view they are represented by a common operation. For example, when rendering a report programmatically using the ReportProcessor, we call the method RenderReport(), which initiates the two-stage operation of processing and rendering in the selected document format. The report viewers also call the same method internally to trigger the process of creating pages and showing them to the user. Still, we’ll examine the processing and rendering stages separately so you’ll have a better idea what happens under the hood.

Processing Overview

The type of each node in the report definition is represented by a class from the Telerik.Reporting namespace, e.g. Telerik.Reporting.Report, Telerik.Reporting.TextBox, etc. Every “definition” class has a corresponding class from the Telerik.Reporting.Processing namespace. These “processing” classes contain runtime information used during the processing and rendering stages. A single instance of a definition class—for example, a Telerik.Reporting.TextBox—may be represented by multiple processing instances of Telerik.Reporting.Processing.TextBox.

I understand this may sound a bit confusing, so let’s see how it works by example. We’ll track the processing of our sample report step-by-step.

The processing stage starts by reading the report definition hierarchy and consecutively traversing its descendant nodes. Since the root of the hierarchy is the Report node, the engine starts processing it by creating an instance of Telerik.Reporting.Processing.Report class—the so-called “processing” instance or processing item.

On the next step, the engine raises the ItemDataBinding event, which can be useful if we want to access the report items programmatically.

After that, the engine goes through the Conditional Formatting rules and Bindings (in that order) and applies them, if any. These rules are used to modify the “processing” instance before binding it to data and performing the subsequent operations.

Modifying the Current Processing Instance

In earlier versions of Telerik Reporting the handler of the ItemDataBinding event was the appropriate place to put code that alters the current processing item. But in the recent editions of our product, we strongly advise against programmatic modification and suggest using the Conditional Formatting and/or Bindings.

Using code to modify the processing item can be error-prone and is not guaranteed to work in any scenario. One of the most common mistakes is to use that event to change the “definition” instance that originates the current processing item. This approach, although disapproved, worked in the past, but the recent engine optimizations do not allow such modifications to be applied and this behavior still causes confusion in users that upgrade their projects to a recent version of our product. Declaring all the modification rules in the report definition will fit most of the user scenarios and is a reliable way to change the report items at runtime.

It is also important to know the difference between Conditional Formatting rules and Bindings, although they may seem to serve the same purpose. The Conditional Formatting rules apply changes only on the Style properties of the processing item, while the Bindings allows to apply changes on almost any of the processing item’s properties.

For example, let’s have a TextBox whose font style needs to be changed to bold under some circumstances. Effectively this means that some of the TextBox’s processing instances will have their Font.Style.Bold set to True. We can do that with a Binding that has Property Path set to Font.Style.Bold and Expression set to True and it would work. However, it would be far from optimal, because the Property Path parts will be resolved using reflection against the processing item’s properties. Also, the Expression value will be converted using the type converter, inferred from the target property type. All these are expensive operations and might cause a serious performance hit. A lot better would be to use the Conditional Formatting rules that will create a new Style object where Font.Bold is set to True and add it to the current TextBox’ style resolution chain with higher priority.

Binding to Data

Let’s get back to the processing of our report. After checking for modifications that need to be applied, the report can be bound to data. In our example, the report has its data source set to WebServiceDataSource, which points to a remote REST service. The engine calls the routine that downloads the data as a collection of simple data objects.

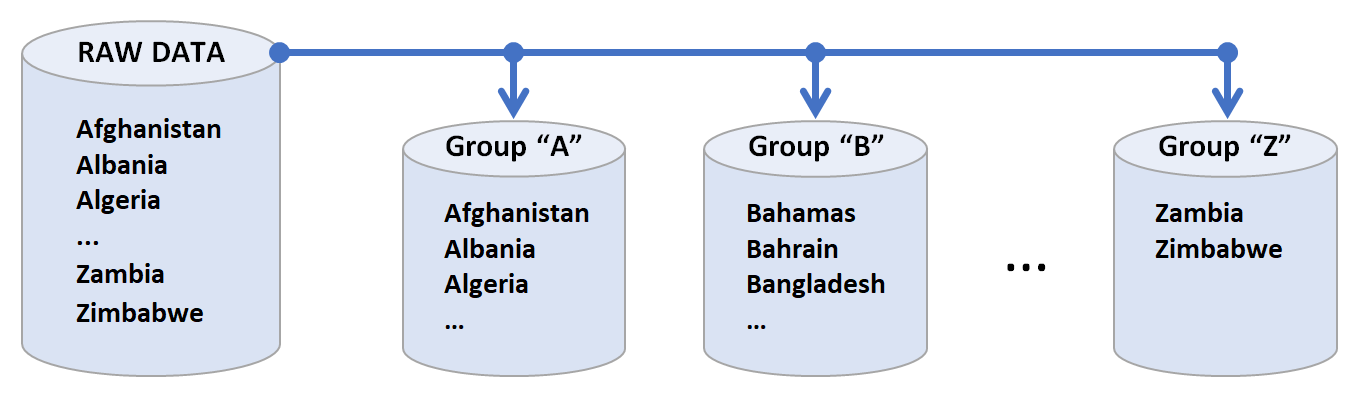

While iterating over that collection, the engine populates the multidimensional result set that will be persisted as the report’s data set. This multidimensional result set is a hierarchical structure that organizes the raw data into groups, following the model of the report groups in our report definition. The grouping expression is executed against each data object and the result determines in which group the object will go.

After populating the multidimensional result set, the report starts processing recursively its own children. The processing routine begins iterating through the data members of the grouped data and assigns each portion of that grouped data as a data context of the current group instance. This means that the group and its children will only have access to this portion of the raw data. The expressions, used within the group scope, will operate only on this data subset—the countries starting with “A”. After assigning the data context, the engine continues the recursive processing of the report group’s children, which in this scenario are three sections: group header, details and group footer.

The processing of the report group header is performed the same way as processing any other item—initially the engine checks if there are any modifications defined in Conditional Formatting and Bindings. Since the group header is not a DataItem, i.e. it does not have its own DataSource, the engine continues the recursive processing with its children. Here the group header has only one child—a TextBox that shows the first letter of the first group member.

During the TextBox processing, the expression for its Value property is being evaluated against its data context. Since the TextBox is in the scope of the report group and the report group’s data context is currently set to Group “A”, the result will be a single “A” and this value will be stored in the Value property of the processing TextBox. With that, the processing of the TextBox concludes, the execution pops out of the recursive algorithm and gets back to the group header processing. Since the group header doesn’t have any more children, the processing algorithm continues with the detail section and its children: in our scenario—a single TextBox showing the current country name.

The processing operations of the detail section and its TextBox repeat for all the 15 members in the current data context, so when the Group “A” finishes its processing, the engine will have created 34 new processing items—report header section and its TextBox, 15 detail sections and their corresponding TextBoxes, and finally a group footer section, containing a single TextBox that shows the count of the members in this group.

After completing each processing cycle, the engine raises the ItemDataBound event. Similarly to ItemDataBinding event, this one can be used to observe the current state of the processing element, (e.g., the already calculated Value of a processing TextBox) but modifying it is not recommended. The processing cycle repeats for every group instance in the grouped data until all the groups are processed.

Processing Performance

Once the processing stage is completed, the engine writes the elapsed time to the Trace output stream and commences the next stage—rendering.

But before looking at it, let me tell you how to use the measured processing time as a performance indicator.

As you can see, the processing stage doesn’t include a lot of CPU-intensive operations like drawing bitmaps or measuring text using the graphics subsystem. The most “expensive” operation here should be the data retrieval process and the construction of the multidimensional result set. So if you notice that the processing stage takes suspiciously long time to complete, it’s a clear indicator that the report needs to be optimized.

A possible reason for a bad performance can be the usage of too many Bindings on an item placed in the detail section—in this case the reflection code will be executed per each processing item instance created for every object in the data set. Another cause for poor performance can be extremely large expressions, or user functions that get called for every data record and contain non-optimized code. However, it’s expected to have significant memory consumption during the processing stage, because the whole hierarchy with the created processing items—the so-called “processing tree”—is kept in the memory.

Rendering the Report

During the rendering operation, the engine calculates the positions of all the already-processed report items and writes their graphical representation (text, image, set of graphical primitives, etc.) in the output stream according to the selected rendering extension. Depending on that extension, the engine will also choose what paging algorithm will be used. Some rendering extensions like HTML5Interactive or IMAGEInteractive use logical pages, others like PDF and IMAGEPrintPreview—physical ones—and some like XLSX use no paging at all. The main difference is that logical (also called interactive) paging produces pages that may have greater height than the page height selected while designing the report. Logical paging uses the so-called “soft page breaks” where the report contents are optimized for previewing on screen. The physical paging splits the report contents, so they fit exactly on the designed page size.

Setting up the Prerequisites

Let’s trace the process of rendering our report in a PDF file. The first important thing that the engine will determine is whether or not the expressions in the document contain a PageCount expression. If any of the page sections or watermarks contains this expression, the engine starts a pre-flight report rendering whose only goal is to count the pages in the report. This may seem oddly complicated, but here’s why it totally makes sense. When you add an expression like:

Page {PageNumber}/{PageCount}to a page section, the engine needs to know the total amount of pages in order to evaluate it correctly. The only way of determining the page count is to perform a “lightweight” rendering that calculates the positions of all the items in the report and determines how many pages the report contents will span. It’s a “lightweight” rendering, because it involves only measurement logic and doesn’t actually write any content to the output stream.

Measurement and Arrangement

I’ve mentioned a couple of times the terms “measurements” and “position calculations” without explaining them, but since it’s one of the most important routines in the report rendering, I would like to go in some details.

Measuring and arranging are different for the different processing classes, but the result is common—to determine the exact bounds of the item in logical page coordinates. Calculating the items’ position in logical coordinates means that the report page size is not taken into account, because the paging hasn’t occurred yet. Instead, all of the items are considered placed on an endless canvas with top-left coordinates set to 0, 0. For simplicity, most calculations are performed in relative coordinates (relative to their parent item), but on a later stage they will be converted to absolute coordinates.

The measurement logic varies between the different processing items. When measuring a TextBox, we use GDI+ methods to calculate the space that its text will occupy. When measuring a PictureBox, we transform its image according to the report definition and determine what physical size (cm, mm, inch, etc.) it would have. The measurement is done recursively through the processing tree and every container item measures the bounds of its children. This way every container “knows” if it needs to grow in order to accommodate all of its children, or shrink in order to preserve space.

While the measurements determine the size of the items, their location is calculated by a separate routine called “arrangement”. This logic takes care to preserve the report layout as set in the designer. A very common example is shown on the image below:

![6-arranging-textboxes Designed layout has two boxes: [=Fields.LoremIpsum] and [=Fields.Translation]. Rendered document has two similar boxes with text that runs a full paragraph, the first in Latin, the second in English.](https://d585tldpucybw.cloudfront.net/sfimages/default-source/blogs/2021/2021-03/6-arranging-textboxes.png)

Here we have two TextBoxes stacked on top of each other. The top one’s text needs to be displayed on a few lines when rendered. If their positions remain the same, their contents would overlap. The arrangement logic “pushes” the second textbox down so they stay the way they’re designed. The same logic is applied when an item, i.e. CrossTab, grows horizontally and the items on its right side need to be pushed sideways.

Paging

After all the items are measured and arranged, it’s time to place them on the pages. As I explained above, some rendering formats render their contents using logical paging on a single logical page, and others, on true physical pages. Let’s see how it would look if our example is rendered to PDF, which is a physical page rendering format.

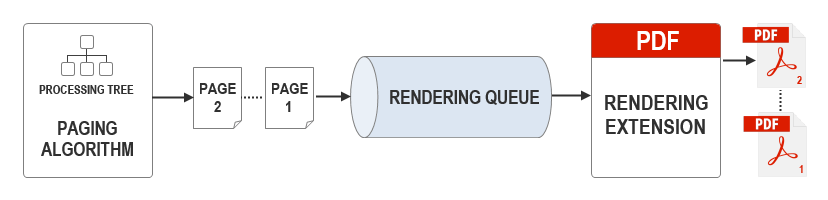

The engine generates a single page—a data structure that will contain one or more elements of the processing tree. The logic starts enumerating the processing elements in the hierarchy, creates their lightweight paging representations called “paging elements” and adds them to the page’s item collection. The process goes until it finds an element whose coordinates fall beyond the coordinates of the page. That means the current page is already filled up and needs to be sent for rendering.

In previous versions of Telerik Reporting, the page preparation logic and page rendering logic were executed synchronously—when a page was prepared, the engine would start its rendering and wait until the rendering routine finished its job. We recognized this as an area of improvement, because the page preparation is usually performed faster than the actual rendering, so from R3 2020 onwards the rendering logic is executed asynchronously using the classic producer-consumer pattern. So instead of waiting for the rendering to end, the pagination logic starts preparing a new page once the previous one is declared as finished. Here is the place where the complex logic for keeping items together kicks in—when an item is positioned on more than one page, the engine goes through its definition and decides whether to move the whole element on the next page or leave part of it on the current one and then continue it on the consecutive one.

Once a page is finished and added to the producer’s queue for rendering, the paging algorithm creates a new page. It may be a new horizontal page, if the report contains elements that span horizontally on more than one page, or it can be a new vertical page. The engine continues its enumeration of the processing hierarchy, adding the paging elements to the new page until it’s filled up, sends it for rendering and the routine repeats again until all the processing elements are enumerated. By this time the rendering process certainly should have started—let’s see how it goes there.

Native Rendering

The workflow so far follows a common model—from the creation of the processing tree, through the measurements and arrangements and up to the paging routine—it is almost the same regardless of the rendering extension. The actual rendering algorithms, however, greatly differ from one another. They have a common input—the pages, added to the rendering queue by the paging algorithm—but their output could be a graphics file, an HTML page or a XAML writer.

Regardless of the selected output, the engine will always try to use the format’s native primitives when rendering. For example, when rendering a report containing a graph, using the HTML rendering extension, the graph will be rendered as a piece of SVG markup, rather than rendering it as a single bitmap image. This allows for better interactivity and high-quality rendering by the browser at any zoom level. However, rendering natively means that each rendering extension has different limitations imposed by the API we use. Such limitation is, for example, the tool tips rendering. Some of the rendering formats like HTML support tool tips natively; others—like PDF—through text annotations, and some—like image rendering formats—do not support tool tips at all. The limitations of each rendering extension are listed in a “design considerations” article in our documentation and must be examined before selecting a target rendering format for our report.

Let’s see how the actual PDF rendering of our report is performed. When the rendering process is started, the rendering extension creates an instance of a class called PdfDocument and sets its mandatory attributes like root structure, internal dictionaries, etc. When the pagination algorithm sends a page for rendering, the rendering routine picks it and starts traversing the elements placed on that page. Each element already has its page coordinates calculated and its text or other visual data evaluated.

The rendering extension keeps a collection of specific writer classes that are specialized in rendering a particular element type. So when an element needs to be written on the PDF page, the engine picks the appropriate writer based on the element type and calls its method that will write the element contents on the page. For example, the TextBox writer will start the writing process by configuring the text transformation matrix and open a new text object. Then it will obtain the current font used by the TextBox and make sure it exists in the PDF’s font dictionary. After that the writer will recalculate the coordinates to fit the current page, set the text position and output the text glyphs. If needed, the TextBox writer will also add text decorations like underline and strikethrough. Finally it will close the text object and restore the text transformation matrix. The procedure repeats for every paging element on the page until it is completely rendered using the native PDF primitives.

Control the Rendering Extension

Each rendering extension has settings that can be configured through a class called device information settings. These settings can be set programmatically or through the application configuration file—app.config for .NET Framework applications or appsettings.json for .NET Core apps.

When rendering a report programmatically with the ReportProcessor class, the device information settings are represented by a Hashtable instance. The keys in that hashtable are the names of the settings as listed in the corresponding documentation article. The value for each key must either conform to the available values per this key, or match the expected value type. All the supported keys and their values are listed in the documentation articles per each rendering extension.

For example, if we want to have all the reports rendered in PDF to comply with the PDF/A-1b standard, we have to add a configuration section in our app.config file like the one below:

<Telerik.Reporting> <extensions> <render> <extension name="PDF"> <parameters> <parameter name="ComplianceLevel" value="PDF/A-1b"/> </parameters> </extension> </render> </extensions> </Telerik.Reporting>If we need to do it programmatically, we can initialize the device information settings while passing it to the Report Processor:

var reportSource = new Telerik.Reporting.UriReportSource() { Uri = "Countries.trdx" }; var deviceInfo = new Hashtable() { {"ComplianceLevel", "PDF/A-1b" } }; var result = new Telerik.Reporting.Processing.ReportProcessor().RenderReport("PDF", reportSource, deviceInfo);The device info settings that are passed programmatically have precedence over the ones set in the configuration file.

Rendering Performance

The time it will take to the engine to finish the rendering stage mostly depends on the structure of the report. Naturally, the more items the engine has to render, the slower it will go. In many cases the count of the items in the report can be reduced without affecting the layout, and below I will provide a couple of examples on that.

A common mistake is to use separate TextBox items to form a sentence or just a line of text. Consider the following design:

![8-rendering-performance-design-mistake In four separate boxes: Recipient: , Mr. , [=Field.FirstName] , [=Fields.SecondName]](https://d585tldpucybw.cloudfront.net/sfimages/default-source/blogs/2021/2021-03/8-rendering-performance-design-mistake.png)

Here we have four TextBoxes that form the recipient title and names. All of them can be replaced with a single TextBox with the following expression:

Recipient: Mr. {Fields.FirstName} {Fields.SecondName}Besides reducing the amount of TextBoxes to process and render, this approach will also improve the look of the rendered document, because the original design would leave gaps between the first and second names.

Another example of using redundant report items is a scenario where Shape items were used to draw borders between TextBoxes in an attempt to create a grid-like layout. Since the Shape items were placed in the details section, the processing tree was overburdened with an enormous amount of processing Shape elements that needed to be measured, arranged and rendered in the output document. All this could be avoided by simply using the Borders property of the TextBox elements—and that would even provide a better result.

Except optimizing the amount of report items, their positioning is also very important and can cause performance drawbacks if done incorrectly. The most common mistake is to allow a report item to grow sideways, thus causing an additional horizontal page to be created. The pagination engine will preserve this layout and will keep generating blank pages, although there are no report items on them. In our latest release, R1 2021, we introduced an improvement that by default skips blank pages, but they still will be generated, so it’s better to make sure the report items do not go outside the designed page size.

Similar to the processing stage, the engine keeps track of the elapsed time during the rendering stage and outputs it in the Trace stream once it’s done. This time measurement can be useful while fine-tuning a report and looking for the best performance.

Wrap-up

The rendering engine in Telerik Reporting is an elaborate set of rules and procedures. Although quite mature, it is being constantly improved and optimized in order to deliver a rich feature set and the best performance without sacrificing usability. Due to its complexity, it is not always straightforward to achieve the desired results even for experienced users. With this article I believe I managed to describe in details the main stages in the report lifecycle and answer the questions you were having. I hope you enjoyed reading the article at least as much as I enjoyed writing it. In case I failed to explain well enough some of the concepts, or just skipped a particularly interesting subject, I would appreciate it if you'd drop a line or two in the comments below.

Learn More and Try for Yourself

Want to try it out for yourself? You can learn more about Telerik Reporting and Telerik Report Server and start free trials at the links below.

Also Available in Telerik DevCraft

Both Telerik Reporting and Telerik Report Server are also available as part of Telerik DevCraft.

DevCraft is the most powerful collection of Telerik .NET and Kendo UI JavaScript developer tools, which includes modern, feature-rich and professionally designed UI components for web, desktop and mobile applications, reporting and report management solutions, document processing libraries, automated testing and mocking tools. DevCraft will arm you with everything you need to deliver outstanding applications in less time and with less effort. With award-winning technical support delivered by the developers who built the products and a ton of resources and trainings, you can rest assured that you have a stable provider to rely on for your everyday challenges along your software development journey. Learn more about Telerik DevCraft.

Ivan Hristov

Ivan Hristov has been a software developer in the Telerik Reporting division since 2013.

When not at work, he might be seen biking/skiing with his daughter, reading or discussing influential movies with friends. Feel free to reach out to him through LinkedIn.