How Our Telerik Teams Test Software: Chapter One

An Introduction

You’re reading the first post in a series that’s intended to give you a behind-the-scenes look at how the Telerik teams, which are invested in Agile, DevOps, TDD development practices, go about ensuring product quality.We’ll share insights around the practices and tools our product development and web presence teams employ to confirm we ship high quality software. We’ll go into detail so that you can learn not only the technical details of what we do but why we do them. This will give you the tools to become testing experts.

Important note: After its completion, this series will be gathered up, updated/polished and published as an eBook. Interested? Register to receive the eBook by sending us an email at telerik.testing@telerik.com.

Chapter One: How We Test Our Own Test Automation Software: Test Studio

Test Studio ships three major releases per year, each followed by a Service with new features and enhancements, as well as numerous minor releases in the form of internal builds with bug fixes and small feature improvements.

For major releases we define two important timeframes:

- Feature freeze: Three weeks before the release date—the development of new features freezes; QA ensures features are functional and they pass the acceptance criteria

- Code freeze: Two weeks before release date—developers fix only blocking and critical bugs related to the new features and logged from QA; check-ins of new code are not allowed

For service packs, we define one milestone:

- One week before the release date—we implement a code freeze, during which QA tests the product and verifies that it can be released

Telerik Test Studio Team’s Approach to Testing

- We use continuous integration to run unit tests on critical features around the clock (like Licensing for example)

- Regression testing using UI Automation runs to cover UI and functionality through UI, including E2E scenarios for each major feature

Note: Key approach is to prepare automation for new features before the release, so automation coverage daily runs can be performed prior to release to verify last minute priority changes

- We do manual functional testing for new features, including random testing and exploratory testing for small features

- Code and test reviews for each check-in are a requirement

- The team creates Acceptance Criteria for features approved by PM, Development and QA

- The team runs browser compatibility tests for each browser and after latest browser updates

- Different environment setups to ensure all major customer scenarios are supported and tests are run against them (OS, Browsers, Visual Studio, CI tools, Source Control tools, TeamPulse, 3rd party integration tools, DBs, etc.)

Automating the Testing of Test Studio

We exclusively use Telerik Test Studio to automate Test Studio and provide internal feedback by doing so. In addition, for some specific scenarios, we use MS UI Automation calls in our .tstests to simulate specific Windows user interactions which are then handled by Test Studio, like showing context menu in a Browser during Test Studio Recording. For some other custom scenarios we call SOAP UI web service tests within Test Studio tests and analyze the output, to cover End-To-End (E2E) cases. Key approaches we use for the automation include:

- Automation IDE

We use Visual Studio with Telerik Test Studio plugin for automation project, for test and code maintenance, because we have more than 30,000 lines of code within the project which require sophisticated VS IDE and our primary application under test is Test Studio standalone product. - Dedicated Automation Environments

- 2.1. We use dedicated for test automation environments (Windows Server 2008R2), that are configured exactly the same (in terms of installed software)

- 2.2. We use specific user account for automation (automation user) with preconfigured local folder structure

- 2.3. We have configured automation environments group policies NOT to Lock Machine, so automation tests can run unobstructed

- 2.4. We connect remotely to environments using MS Remote Desktop Connection and resize the remote connection window when running automation, so the active user session is not lost during automation execution

- 2.1. We use dedicated for test automation environments (Windows Server 2008R2), that are configured exactly the same (in terms of installed software)

- Test Creation and Maintenance

- 3.1. We use Telerik Test Studio Tests (.tstest) explicitly, particularly WPF recording for creation and maintenance of tests (Run To Here/From Here/Selected)

- 3.2. We have more than 12,000 unique elements in the Automation project; we leverage offline test step creation using our powerful Step Builder functionality

- 3.3. We use Coded Steps within .tstest files for more complex tasks (i.e. working within virtualizing containers, scrolling to specific point, etc.), repeating actions/verifications (perform multiple same tasks with different parameters) or using .NET specific calls (working with system threads, IO or processes for example)

- 3.1. We use Telerik Test Studio Tests (.tstest) explicitly, particularly WPF recording for creation and maintenance of tests (Run To Here/From Here/Selected)

- Code Reuse

Working with common tests, automation helper classes and global constants and variables are heavily utilized and recommended by the Test Studio testing team. Rule of thumb is if the same chunk of test steps/code is used more than twice across the project, it should be moved to a common test/code.

- 4.1. Automation Helper Classes with different methods and functions to be reused from coded steps

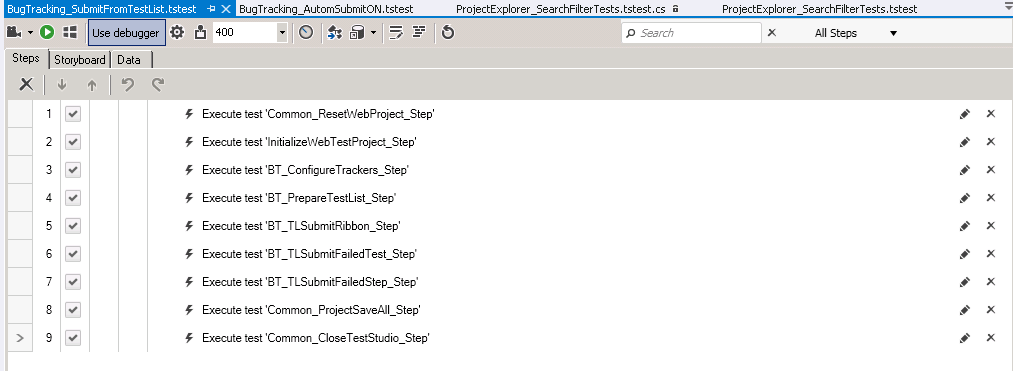

- 4.2. Common .tstest tests that are reused by multiple tests using Test As Step functionality—between 30-40% of all tests in the project are Common tests. Here is how a main test can look, using only common tests:

- 4.1. Automation Helper Classes with different methods and functions to be reused from coded steps

- Databinding

- 5.1. Databinding is used in various situations in tests, for performing iterations of same steps with different data—however another powerful use is with Common tests that execute the same steps, but with different data taken from the parent test which is calling them, using InheritParentData property of the child test (i.e. a Common test creates a new Test List and the name of the list is different when called from different parent tests)

- 5.2. Another useful usage of Databinding is to change data value during runtime, when a test executes same steps 2 times with different data in the same iteration—this can be achieved through a coded step like this before the usage of the new data:

var row = (System.Data.DataRow)this.ExecutionContext.DataSource.Rows[0];row["TestName"] ="test2"; - 5.3. Another reuse is done for elements with same find expressions and varying TextContent value in identification logic, that can be used in various steps in different tests, using DataBind Find Expression

- 5.1. Databinding is used in various situations in tests, for performing iterations of same steps with different data—however another powerful use is with Common tests that execute the same steps, but with different data taken from the parent test which is calling them, using InheritParentData property of the child test (i.e. a Common test creates a new Test List and the name of the list is different when called from different parent tests)

- Specifics Around Testing WPF/Silverlight—WaitForNoMotion Step Property

UI automation in general is not as fast as API or low level automation, since the tester has to ensure the UI is properly visualized and refreshed before a certain test step is executed. In Test Studio there is a Step property called WaitForNoMotion for WPF/SL elements that takes care of this. Appropriate usage of this property can save execution time without compromising test stability.

Example: when opening a new Window, first step (verification or action) should always have WaitForNoMotion=true to ensure UI is loaded fully, which can take 1-2 seconds before its execution. However if after this initial step there are 20 other steps that check the UI for example or do actions on controls that don’t change in the current context, setting WaitForNoMotion=false for those steps will get rid of these 1-2 seconds per step delay that ensures UI is properly loaded, because the user already knows this is the case and some 10-20 seconds are saved and execution is accelerated. When applying this approach to a large number of steps in big projects, the accumulated savings in time can be measured up to an hour.

- Execute MS UI Automation Wwithin Telerik Test Studio Test

There are cases when we want to simulate Windows user actions while the Telerik Test Studio engine is running to see if it behaves properly—for example simulating user actions while the Test Studio recorder is running to see if steps are recorded properly. We do this by defining helper classes with common methods using MS UI Automation:

usingSystem.Windows.Automation;publicstaticvoidShowContextMenu(AutomationElement element,intinpos,intoffset){Assert.IsNotNull(element,"The input element is null - stop execution");var p = element.Current.BoundingRectangle;System.Drawing.Point poi =newSystem.Drawing.Point(Convert.ToInt32(p.X)+inpos,Convert.ToInt32(p.Y+inpos));Cursor.Position = poi;System.Threading.Thread.Sleep(3000);poi =newSystem.Drawing.Point(Convert.ToInt32(p.X) + offset, Convert.ToInt32(p.Y + offset));Cursor.Position = poi;System.Threading.Thread.Sleep(2000);} - Call external processes within Test Studio Test

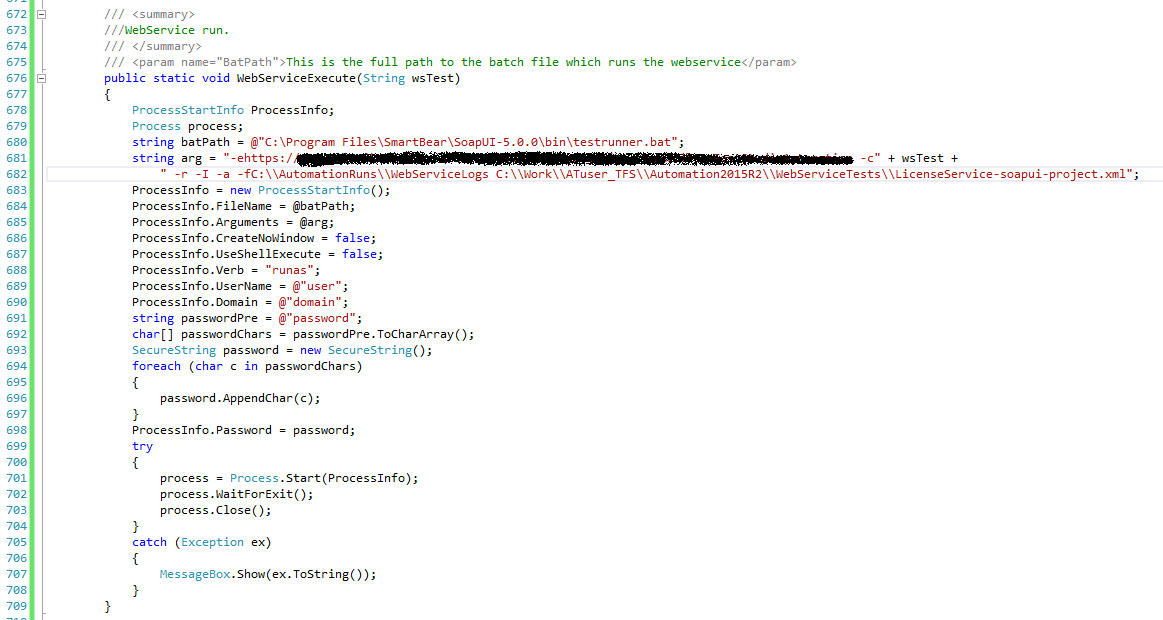

For certain E2E scenarios, we perform some actions from within the UI that should update a database or send server API calls, using REST Web Services. For example, we perform “unregister of a machine key” from the server from within the UI: we check if the UI reports success or failure, then double check by calling the server directly to verify the result returned to the UI was correct; once we get the response of the server we verify the response in a coded step in the same test:

Another common usage of REST Web Services is to restart, for example, specific Windows WCF Services on a local or a remote machine to ensure a clean, un-cached environment state:

- Overriding Telerik Test Studio Execution Extension Methods

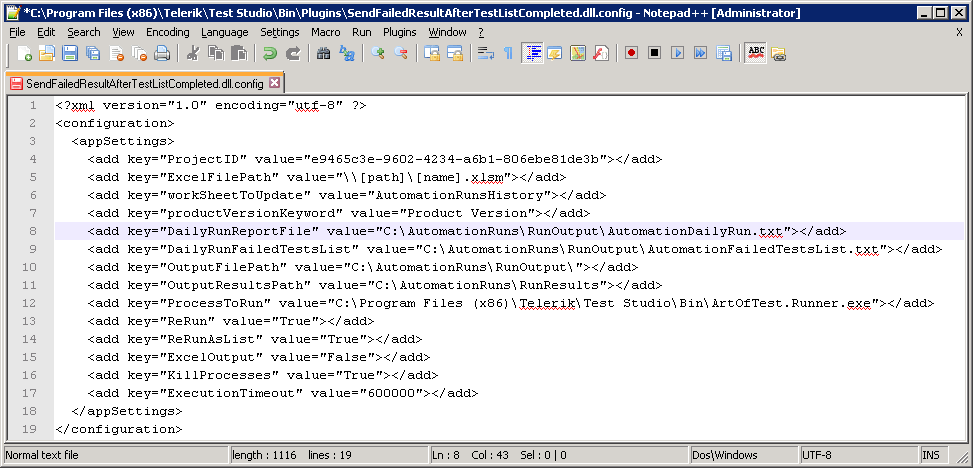

There is a very powerful option to use our Execution Extension to customize execution and plug in to external APIs and sources for external tools integration, reporting, pre- and post-conditions setup, etc.- 9.1. We build our execution extension and deploy it in [Test Studio installation folder]\Bin\Plugins so it is triggered for each run, but as we only need the Automation project to be tracked and processed by it, we filter by projectID inside the extension, so it is not triggered for any other project during execution:

string projVersion;if(executionContext.Test.ProjectId.ToString() == projVersion) - 9.2. We use extension configuration file (.config) in which we have abstracted out certain variables, so we don’t need to rebuild and deploy extension every time we want to change some key parameter in it, only change the value in the configuration file:

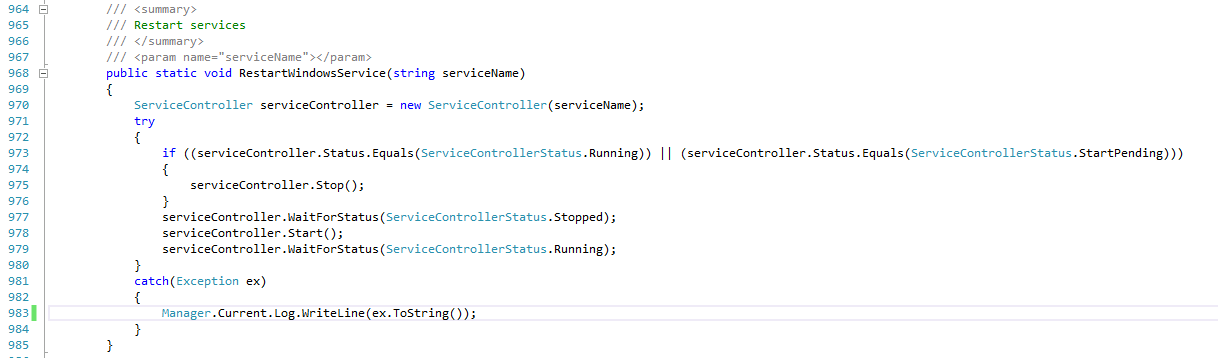

- 9.3. Ensuring clean/uncashed test conditions: Pre-conditions and post-conditions are crucial to ensure flawless execution in case some unexpected events happen or execution stops before some external tasks are finalized—for such cases we use the OnBeforeTestStarted() method to kill all known processes that can affect the test execution:

publicvoidOnBeforeTestStarted(ExecutionContext executionContext, ArtOfTest.WebAii.Design.ProjectModel.Test test){if(iskillenabled){procsToKill =newstring[] {"iexplore","Chrome","Safari","firefox","ExpenseItStandalone","LogonScreen","WerFault","wcat","WpfApplication1","WpfApplication2","WpfApplication3","devenv",excel","slauncher","vsjitdebugger","plugin-container"};foreach (string pr in procsToKill){KillProcessByName(pr);}if(executionContext.Test.Path.StartsWith("Scheduling")){RestartWindowsService(@"Telerik Storage Service");RestartWindowsService(@"Telerik Scheduling Service");}}} - 9.4. Test Execution timeout: There are some cases in which some modal dialogs or other unexpected events make the execution “hang,” not allowing it to proceed with the rest of the test steps—eventually the entire nightly automation run might fail, and for such cases, we have implemented a Test Execution timeout approach, in which we use separate threads to monitor execution and force “stop hanging” processes if the test doesn’t finish in the pre-defined timeout:

System.Timers.Timer stopWatch;publicvoidOnBeforeTestStarted(ExecutionContext executionContext, ArtOfTest.WebAii.Design.ProjectModel.Test test){stopWatch =newSystem.Timers.Timer(timeout);stopWatch.Elapsed += StopWatchElapsed;stopWatch.Start();}privatevoidStopWatchElapsed(object sender, System.Timers.ElapsedEventArgs e){procsToKill =newstring[] {"iexplore","Chrome","Safari","firefox","ExpenseItStandalone","LogonScreen","WerFault","wcat","WpfApplication1","WpfApplication2","WpfApplication3","devenv","excel","slauncher","vsjitdebugger","plugin-container"};foreach (string pr in procsToKill){KillProcessByName(pr);}}publicvoidOnAfterTestCompleted(ExecutionContext executionContext, TestResult result){stopWatch.Stop();} - 9.5. Re-run failed lists: We have implemented the concept of re-run for all failed tests from a Test List immediately after it is executed, to ensure the failure was not random—that way we can quickly filter random failures and focus our efforts on real errors, which are more urgent, and later we can investigate why the first fail happened and stabilize or adjust the automation test to be more bullet proof for future runs We prepare the Test List creation runtime and execute it:

publicvoidOnBeforeTestListStarted(TestList list){//create product version specific variableoutputDailiyFile ="DailyRun"+ list.CurrentVersion.ToString();//read configuration fileif(String.IsNullOrEmpty(projVersion)) ReadDLLConfiguration(outputDailiyFile);//execute this section only for the Automation projectif(projVersion == list.ProjectId.ToString()){isCurrentProject =true;//prepare execution to re-run failed testsif(!rerun || list.TestListName.Contains("FailedReRun"))return;//create new TestList objectfailedTL =newTestList(list.TestListName.ToString() +"(FailedReRun)","Automatic", ArtOfTest.WebAii.Design.TestListType.Automated);failedTL.ProjectId = list.ProjectId;failedTL.Id = list.Id;failedTL.Settings = list.Settings;}}And execute it runtime also:

- 9.6. Reporting: We use the OnAfterTestCompleted() and OnAfterTestListcompleted() methods to output the status result of the execution for each test in external files in several formats (.txt, .cvs, .xls)—these are integrated with our reporting systems where we can quickly visualize results and generate our custom reports or debug automation run output

publicvoidOnAfterTestListCompleted(RunResult result){//Only execute if re-run failed tests is selectedif(!rerun || !isFailed)return;//get the list of failed tests for re-runvar failedtestnames = result.TestResults.Where(x => x.Result == ArtOfTest.Common.Design.ResultType.Fail).ToList();if(rerunAsList){foreach (TestResult tr in failedtestnames){failedTL.Tests.Add(newTestInfo(tr.TestId.ToString(), tr.TestPath.ToString()));}failedTL.SaveToListFile(projRoot);StartAOTProcess(failedTL.TestListName.ToString() +".aiilist", projRoot);}else{foreach (TestResult tr in failedtestnames){StartAOTProcess(tr.TestPath.ToString(), @projRoot);}}} - 9.1. We build our execution extension and deploy it in [Test Studio installation folder]\Bin\Plugins so it is triggered for each run, but as we only need the Automation project to be tracked and processed by it, we filter by projectID inside the extension, so it is not triggered for any other project during execution:

- Execution

We use PowerShell scripts to install and configure the product, run Test Lists using ArtOfTestRunner command line tool, prepare data for execution reports, configure certain pre- and post-conditions and send emails with execution results—we schedule to run these scripts using Windows Task Scheduler

Did you find these tips helpful? Stay tuned for the next chapter and let us know your feedback. And don't forget to register to receive the eBook that will be put together when this series is complete.

If you are interested in trying the latest Test Studio, feel free to go and grab your free trial.

Daniel Djambov

Daniel was the QA Architect of the Telerik ALM and Testing division.