The Importance of Technical SEO in Website Design

Technical SEO plays a crucial role in website discoverability, ranking and user experience. Look at nine factors that contribute to technical SEO and what web designers and developers can do to ensure they’re accounted for during website development.

Everyone wants a writer who can optimize their content with keywords and a marketer who can get them high-authority backlinks. But what about web designers and developers? It’s you who play a crucial role in ensuring that the technical side of a website has been optimized for better search performance.

In this post, we’re going to look at why you can’t afford to ignore technical SEO when building a website and nine technical factors that contribute to how well or poorly your sites will rank.

Why is Technical SEO So Important?

There are three main prongs of search engine optimization.

On-page SEO has to do with what we do to content. It needs to be:

- Readable

- Relevant

- Valuable

- Authoritative

- Keyword-optimized

- Well-structured

- Internally linked

If search engine indexing bots can’t understand what it’s about and if readers can’t get any value from it, the page won’t rank well.

Off-page SEO has to do with off-site activities that boost the authority of a website.

In some cases, we have control over off-page SEO. For instance, Google Business profiles that are well-reviewed by customers can benefit websites. These profiles pass traffic and authority onto the connected website.

Off-page SEO isn’t always within our control though. Getting high-authority backlinks and positive reviews are highly coveted because of what they can do for a site’s ranking. However, unless you’re a well-established writer, entrepreneur or celebrity, they can be difficult to get organically.

Technical SEO is all about the mechanics of a website and how they impact, first and foremost, the crawlability and indexing of your pages. Technical SEO factors also impact the actual performance and usability of a website.

A poorly executed technical SEO plan could mean that your website or some of its pages don’t show up in search results. If people can’t find your site, that’s a huge problem.

A lack of technical SEO could also mean that your pages rank. However, when visitors open them, they have to wait 15 seconds or longer for the content to load. (Or, rather, they don’t wait and instead back out to search results to find a faster site.)

It could also mean that the site is confusing to navigate, leads to too many 404 dead-ends or is difficult to use on a smartphone.

So it’s not just a search engine’s ability to crawl a site that can be negatively impacted. A lack of technical SEO or poor execution of it can create a poor user experience.

9 Technical SEO Factors You Should be Paying Attention to

When you’re building a website, a technical SEO checklist will come in handy. You can use the following list to put yours together.

I’d also recommend using Google’s technical SEO tools—Google Search Console and PageSpeed Insights—once your site goes live. They’ll help you assess how successful the technical optimization was.

1. XML Sitemap

An XML sitemap is a file that lives at the root of a website. It’s not meant to be accessed by humans. It’s there so that search bots can find all of the URLS (and other content like image files) on a website. It also helps search engines understand the overall structure and organization of a website.

Some content management systems will automatically generate an XML sitemap of a website. That’s helpful since the last thing you want to do is have to piece together an XML sitemap for a massive website or shop.

You can also use XML-Sitemaps.com to generate one for you.

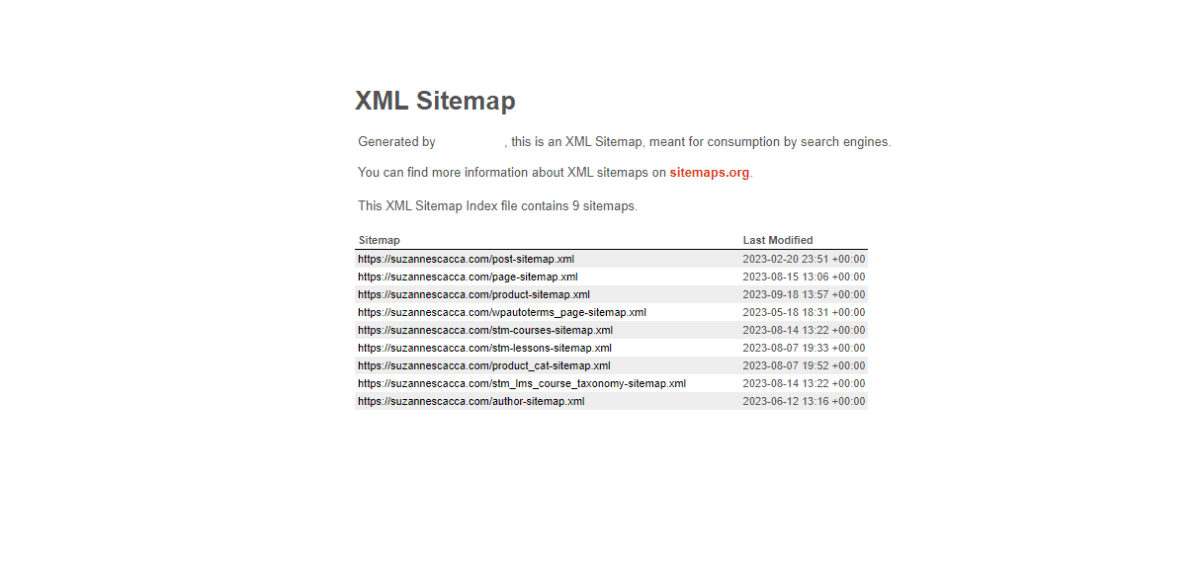

When it’s ready, it’ll look something like this:

This is the XML sitemap for my website. It has organized my content into various categories, like pages, posts, products, courses and more. You can also add ones for categories, tags, authors, etc.

Generating the XML sitemap is an important first step. You also need to submit it to the search engines.

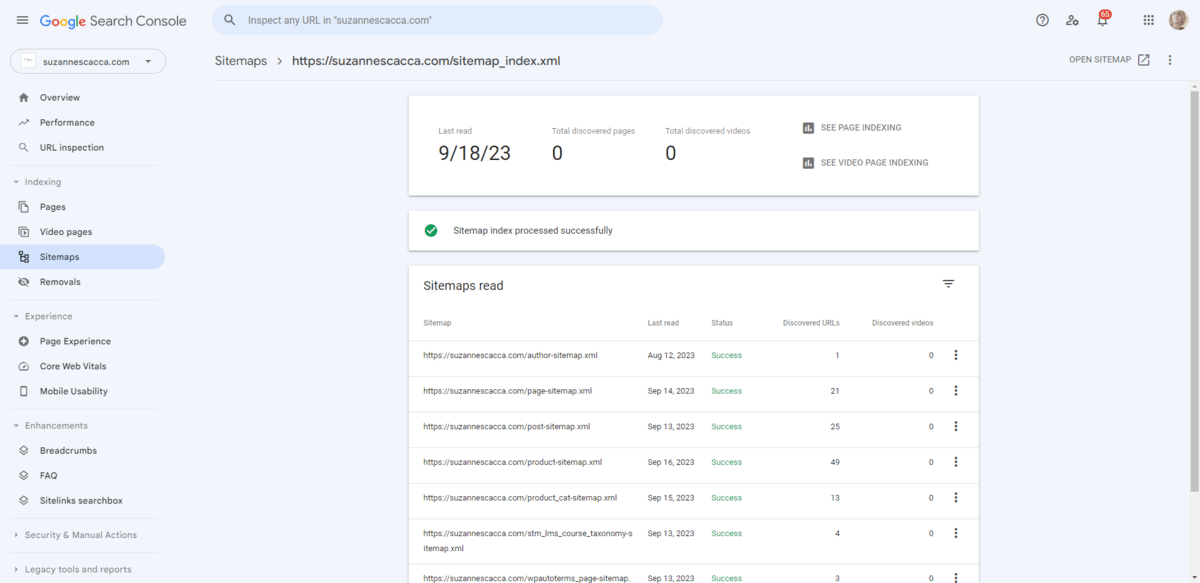

Start with the most popular search engines. For Google, you’ll submit the sitemap (all you need is the link to it) via Google Search Console. For Bing, you’ll use Bing Webmaster Tools.

Check in a few days later to ensure that your sitemap was successfully submitted. For instance, this is what it will look like in Google Search Console:

It should match up exactly to the XML sitemap you generated.

This doesn’t mean that every page on your site has now been crawled and indexed though. To see if Google has detected all of your content, refer to the Pages tab. This will tell you which pages are indexed and which ones aren’t.

In some cases, pages won’t be indexed because they’re old and deleted. In other cases, it’s because there’s something in your website blocking the search engines from indexing. We’ll cover some of those reasons next.

2. Robots.txt

The robots.txt file is another file that lives at your site’s root and is meant for search engines’ “eyes” only. This document provides rules on which parts of a website can be crawled and indexed.

You probably won’t need to set up the robots.txt file as your CMS or web host will do it for you. However, you will have to write custom rules, for instance, if you want to block Google from accessing your site. In that case, you’d add a rule that looks like this:

User-agent: Googlebot

Disallow: /

That’s not really a practical application of the robots.txt file unless your website is going to be under construction for a long time.

Instead, you’ll use it to keep search engines from poking around in folders and files related to the administration of your site. You can also use it to stay out of directories related to plugins or themes you’ve installed.

3. HeadTags

There are also ways to communicate with search engines about which individual pages to view, index or stay away from.

To do this, you’ll have to create a rule and insert it into the <head> of your site as a meta tag that looks like this:

<meta name="robots" content="noindex">

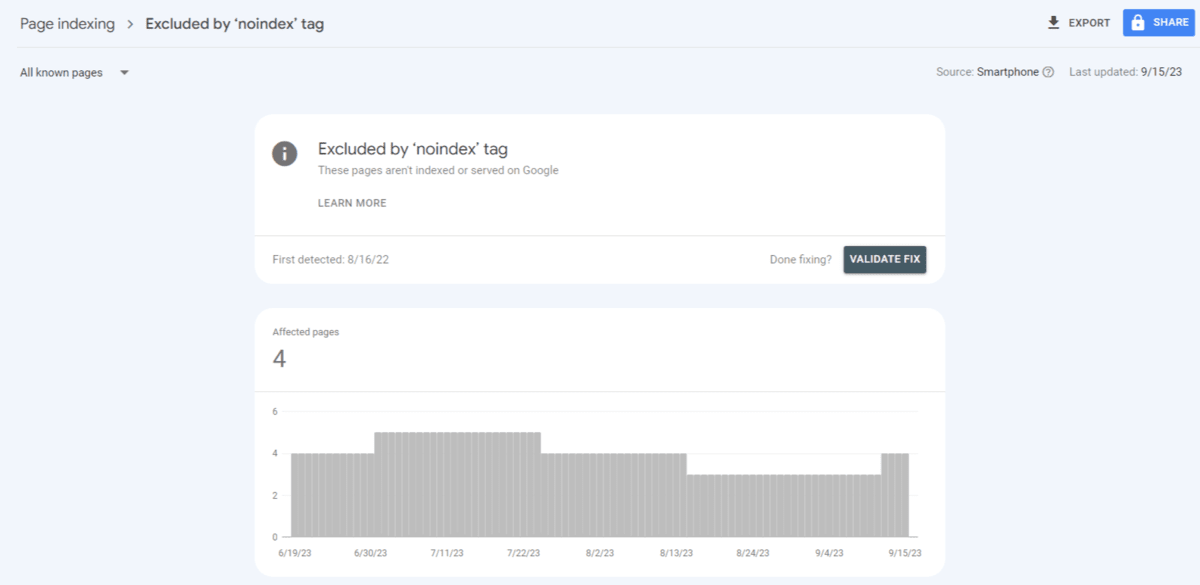

This will block all search engines from indexing the page that the tag is found on. If you go into Google Search Console, you should see your noindex pages under the Pages > Page indexing > Excluded by ‘noindex’ tag area.

You can see here that four of my pages are marked as noindex. If you were to scroll down, Google would tell you which of those pages are affected. If they don’t belong there, you can try resubmitting them for indexing.

You can also use <head> tags to mark up canonical content.

While it’s not ideal to have duplicate content on a website, there are some cases where it’s necessary—like when running A/B tests. A canonical tag is a way of telling the search engines which of the pages is the original copy and the one that should be indexed and ranked. Any duplicate content found on the site will then be ignored or deprioritized in search results.

To add this markup to the <head>, use a string that looks like this:

<link rel="canonical" href="https://yourdomain.com/main-page/" />

Duplicate content can get you in trouble with search engines as well as cause confusion for your users. So this particular <head> tag will come in handy.

4. Information Architecture

Search engines don’t just pay attention to the XML sitemaps that you create for them. They’re going to examine your website’s architecture which includes the navigation, footer links, internal link structure, breadcrumbs and pagination.

A website’s information architecture (IA) plays a critical role in the user experience. If visitors can’t take one look at your navigation and understand what your site is about and how to get around it, that’s a major problem.

The internal link structure can tell search engines and users a lot about the quality of your site as well.

Internal links help search engines discover new and related pages that they may not have had time to crawl or index yet. Or that they had a hard time discovering on their own or via the sitemap.

Internal links also enhance the user experience when used correctly.

They can help users find more information and relevant recommendations, ensuring that they get the most out of the website while they’re there. It also helps with discoverability as the header has limited space and might not contain every page, especially for a website with hundreds or thousands of pages on it.

When building out your IA, focus on simplicity and organization. The naming conventions of the labels, pages and links should leave no room for interpretation. The path structure for each page should be descriptive and brief (e.g., /products/baby-romper/). And it should all be organized in a logical fashion.

5. Broken Pages

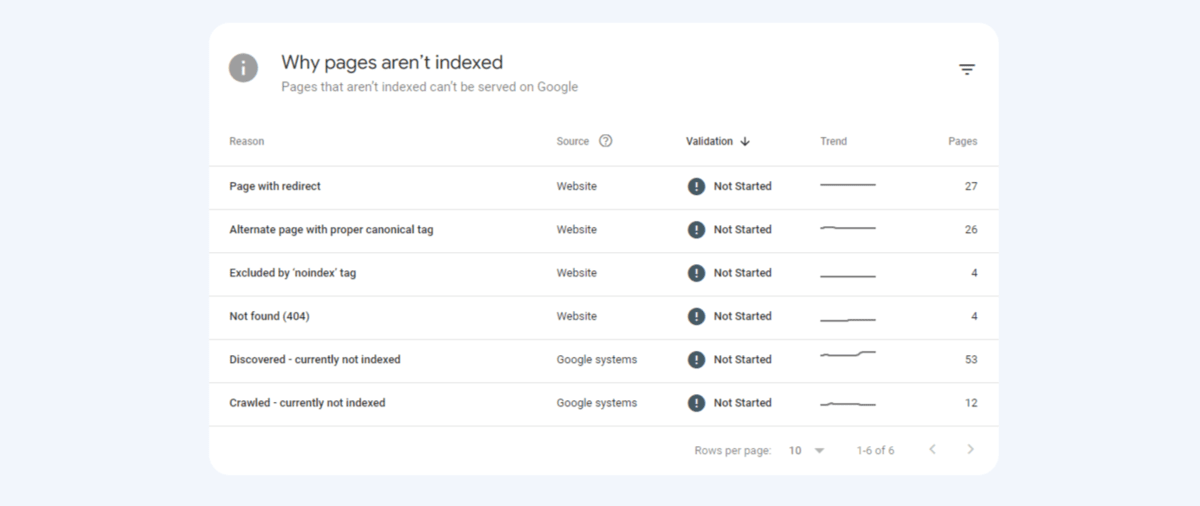

If you scroll down the Pages tab in Google Search Console, you’re going to find a list of reasons why certain pages aren’t indexed. Noindex and canonical tags are just two of the reasons you might find.

For example, my site has four other reasons listed:

- Page with redirect

- Not found (404)

- Discovered – currently not indexed

- Crawled – currently not indexed

We’re not going to worry about the last two as Google is working on them. The first two, however, relate to “broken” pages.

Broken pages take users to a 404 page, which is one of the most common website errors.

If you or your team are doing cleanup on a website and deleting old, irrelevant content, people are going to encounter the 404 unless you have a suitable alternative cued up for it. But that doesn’t mean your team needs to write a new blog post about small business marketing to replace the one that was just deleted. It just means directing visitors looking for the old post to the main blog page, for instance.

While your users might not be able to avoid every 404 error, you can reduce the likelihood of it by setting up a 301 redirect for any renamed or deleted pages. Even though Google won’t penalize your site for the 404, it will if it finds that large quantities of users abandon your site out of frustration because none of the links work.

6. Responsiveness

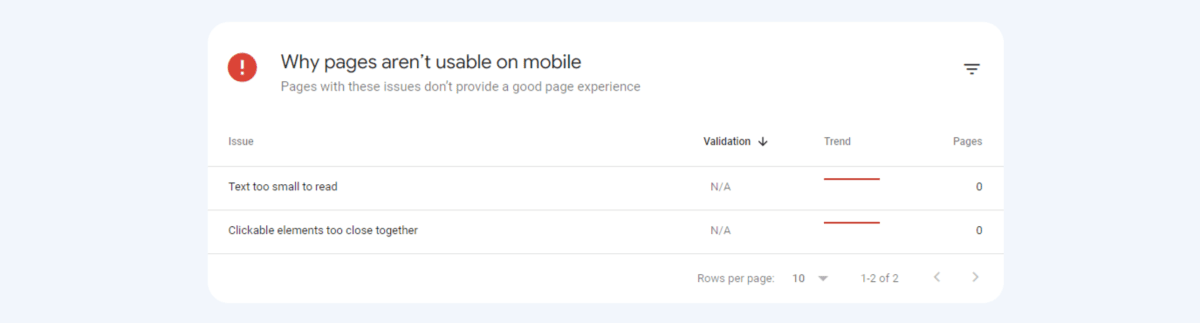

There is a tab called Mobile Usability in Google Search Console. However, this feature is set to retire at the end of 2023.

Google’s never really had a great tool for checking mobile responsiveness. Its mobile-friendly test tool is literally a “Yes” or “No” evaluation. So having this one built into Google Search Console was useful at times as it provided some direct feedback on mobile-related issues.

For instance, in the list of issues above, Google is telling me that there’s text that’s too small to read as well as clickable elements that are too close together.

Your best bet is to get yourself a reliable responsive checker. The one I use is the Mobile simulator Chrome extension. It doesn’t provide a list of mobile-related errors. Instead it allows you to view your site on dozens of devices. It takes some work, but it’ll at least help you identify and fix issues that appear on smaller screens.

7. Page Loading Speed

Webpage loading speeds don’t affect a search engine’s ability to index your site. However, they do seriously impact the user experience. So if your site loses visitors because they can’t stand to wait 15 seconds, 10 seconds or even 5 seconds for a page to load, your ranking will drop as a result. It’s all tied together.

There are lots of things you can do to ensure each page (or, at the very least, your most critical pages) load in a few seconds or less. For example:

- Choose fast website hosting.

- Add a CDN.

- Resize and compress images.

- Implement lazy loading.

- Replace PNG and JPG image files with WebP.

- Embed videos from YouTube or Vimeo instead of uploading them to the server.

- Cache your content.

- Minify HTML, CSS and JavaScript.

- Combine CSS and JavaScript.

- Implement async loading.

- Clean up messy code.

- Reduce the number of redirects.

- Limit the number of plugins and API connections used.

If you want to see how these optimizations impact websites, check out this post on how to create lightweight and faster mobile UIs.

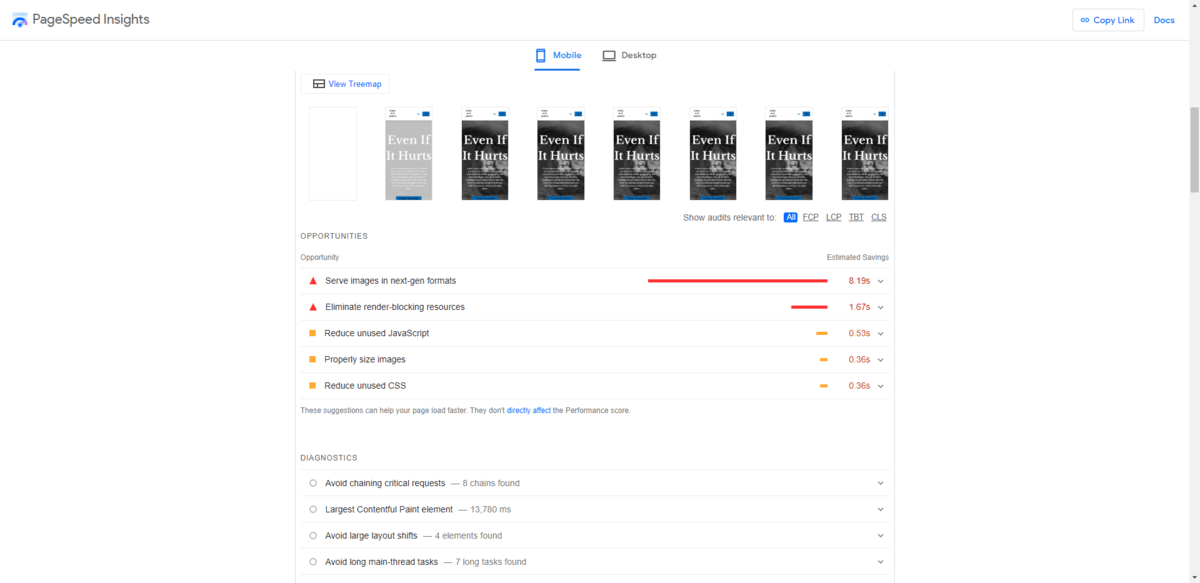

Google may have even more suggestions for you. To find out what your Performance score is and ways to improve it, use PageSpeed Insights.

At the top of this page, Google will break down how long it takes your homepage (or whatever page you’re evaluating) to load. It will tell you the:

- First Contentful Paint

- Largest Contentful Paint

- Total Blocking Time

- Cumulative Layout Shift

- Speed Index

It will even give you a visual demonstration of what the page looks like as it loads. Down below you’ll find “Opportunities” to improve your performance score.

8. Security

This is another one of those areas where Google is vague on what to do to be in its good graces. If you look at Google Search Console, the Security issues section will either tell you that you have issues or don’t.

PageSpeed Insights briefly touches on the security matter under the Best Practices section. You’ll find a few notes related to security here:

- “Uses HTTPS”

- “Avoid deprecated APIs”

- “Ensure CSP (Content Security Policy) is effective against XSS attacks”

Google is basically looking for the bare minimum when it comes to security. However, if your site should happen to get breached and users are impacted, Google will take notice and respond in kind when it comes to ranking it.

This 22-point security checklist will help you cover your bases. Even if Google doesn’t look for or check these things off, you should for the sake of your users.

9. Accessibility

When Google pushed out its page experience update, accessibility became an important technical matter to pay attention to.

It makes sense when you think about it. We’ve come so far with the web development where something like mobile responsiveness isn’t even really addressed by Google anymore. Because it’s a given. So what’s the next step? Making sure that all devices, browsers and humans have equal access to websites.

Although Google says that accessibility isn’t an official ranking factor, it’s no different than 404 pages or security breaches. Sure, Google might not derank your site or a page if it catches any of these issues. But if your users respond negatively to it, then it certainly will.

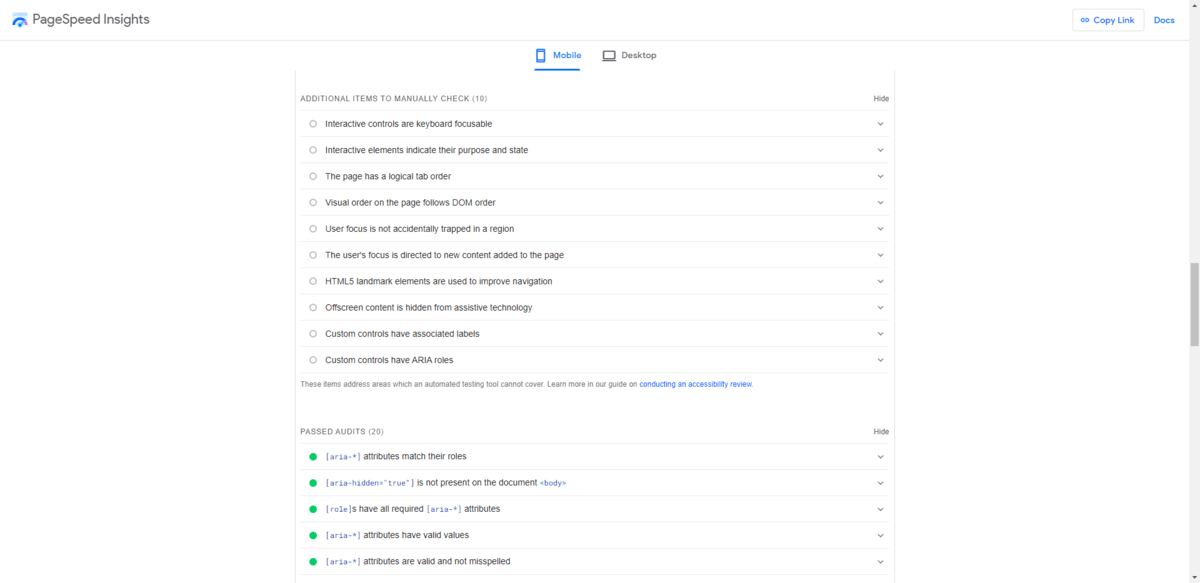

If you go into PageSpeed Insights and scroll down to your Accessibility score, you’ll find issues related to the following categories:

- Navigation

- Contrast

- Names and Labels

Google keeps track of other issues related to how your pages are marked up for screen reader technology and other assistive devices.

There’s a list of “Additional Items to Manually Check” as well as “Passed Audits.” These have to do with things like HTML markup, ARIA, interactive elements, user focus and more.

I would recommend generating your own accessibility checklist from everything you find here. There are other useful resources when it comes to creating accessible websites—like WAI—but Google’s checklist is a good place to get started.

Wrap-up

Technical SEO might not get all the buzz that on-page or off-page SEO get. However, it’s critical to take care of it as it can have some serious impacts on your website. From making it difficult for search engines to crawl your content to creating an unpleasant experience for users, technical SEO factors have a wide range of effects. So if you’re not already addressing these matters in your web design process, it’s time to start.

Suzanne Scacca

A former project manager and web design agency manager, Suzanne Scacca now writes about the changing landscape of design, development and software.